Sentinel VoLTE includes the following features to support XCAP.

|

|

See also the XCAP Query Examples. |

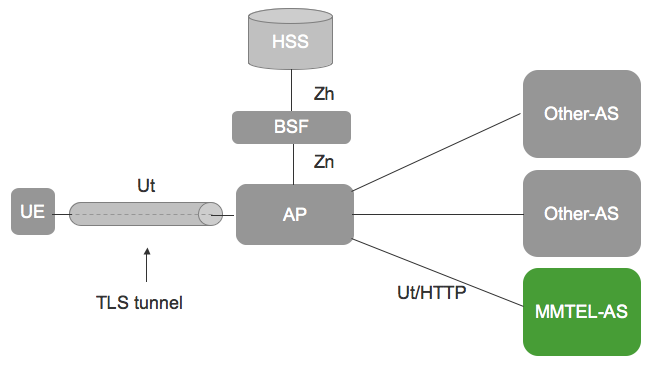

XCAP architecture within the IMS

The following diagram illustrates Sentinel VoLTE’s XCAP architecture within the IMS:

|

|

XCAP’s use within IMS is described in 3GPP TS 33.222. |

Sentinel VoLTE and XCAP

Sentinel VoLTE supports the use of XCAP over HTTP. The diagram above shows this as MMTEL-AS and Other-AS — not as an AP in the IMS architecture.

Sentinel VoLTE’s XCAP server support requires that the Ut/HTTP Requests have passed through a separate Authentication Proxy (not provided by the Sentinel VoLTE product) before reaching the XCAP server. OpenCloud provides an implementation of the Authentication Proxy (Ut interface) in the Sentinel Authentication Gateway product.

HTTP URIs and XCAP

Rhino VoLTE’s XCAP server runs alongside other HTTP servers. So it does not run on the “root URI” of a server; rather, it runs on a URI relative to the root URI.

For example, if the XCAP Server is running on xcap.server.net with port 8080, the base XCAP URL will be

http://xcap.server.net:8080/rem/sentinel/xcap.

The IMS XCAP standards place the XCAP URI at the root of the host. This means URI re-writing may be required before hitting Sentinel VoLTE’s XCAP server.

This could be done via the AP, or via an HTTP proxy or web-server (such as Apache Web Server) between the AP and Sentinel VoLTE’s XCAP server.

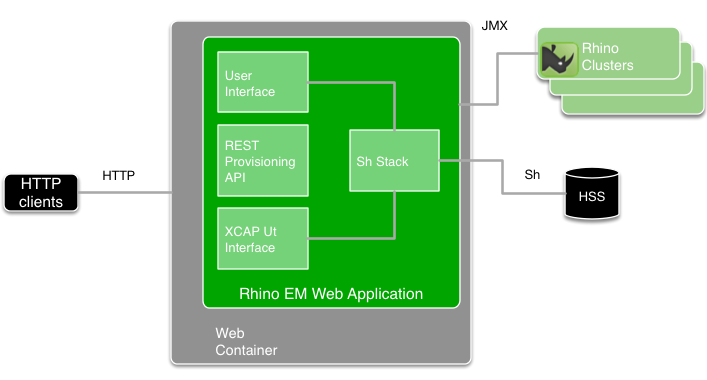

Integration with Rhino Element Manager and Sentinel

The Sentinel XCAP server is a layer on top of the existing provisioning services and runs alongside the Sentinel REST machine API. XCAP is defined using REST principles and uses much of the same technology as the Sentinel REST API. It can be co-deployed with the Sentinel REST Provisioning API, and web human user interface, like this:

Integrated components

The diagram above includes these components:

-

The HTTP clients can be:

-

a web browser

-

a provisioning application

-

an XCAP client (such as XCAP-enabled user equipment connecting through an aggregation proxy).

-

-

The HTTP servlet container configuration is used to specify the IP interfaces and port numbers that the REM Web application is running on. Therefore, if the port that the REM application is running on is to be reconfigured, the HTTP servlet container needs restarting.

-

The REM web application is packaged as a web archive (WAR). It can be deployed into various HTTP servlet containers. OpenCloud tests the application in Apache Tomcat and Jetty HTTP servlet containers.

-

The three HTTP-triggered components (web user interface, REST Provisioning API, and XCAP server) are triggered from the same port. The HTTP Request URI is used to distinguish which component processes a certain request.

|

|

Each of the components has their own security configuration. |

Diameter Sh stacks and Rhino clusters

Each REM application includes one or more instances of the Diameter Sh stack. Each instance is scoped to the Rhino cluster it is associated with. The Rhino cluster is selected when the REM user selects a “Rhino instance” to log into.

Each instance of the Diameter Sh stack has Diameter peer configuration — including Realm tables for multiple Destination Realms. In other words, a single Diameter stack instance can be configured to connect to more than one distinct HSS.

Each instance of the Diameter Sh stack is shared by both the XCAP server and parts of the web user interface.

Each Rhino cluster has connectivity to various systems. (That connectivity is not shown in the diagram above.)