Architecture

orca is a command-line tool used to distribute upgrade/patching tasks to multiple hosts,

such as all hosts in a Rhino cluster,

and it implements the cluster migration workflow.

It consists of:

-

the

orcascript, which is run from a Linux management host (for example a Linux workstation or one of the cluster’s REM nodes) -

several sub-scripts and tools which are invoked by orca (never invoked directly by the user).

-

common modules

-

workflow modules

-

slee-data-migration

-

slee-data-transformation

-

slee-patch-runner

-

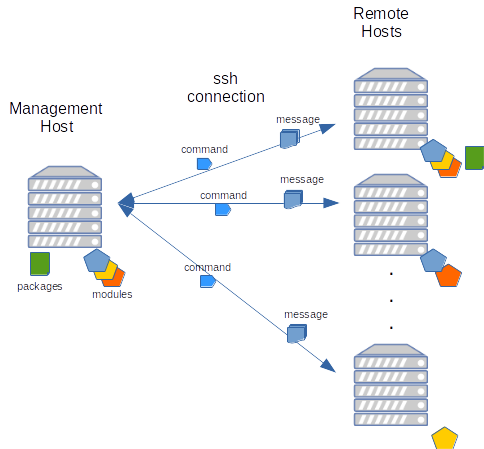

orca requires ssh connections with the remote hosts and will copy the required modules and tools to the hosts,

triggers the command from the management host and the transferred modules will run in the remote hosts.

The control is done via ssh connection and orca will store logs in the management host.

The picture below shows how orca interacts with the tools.

Upon a valid command orca will transfer the required module to the remote host.

Some commands include packages that will be used in the remote host, like a minor upgrade package or patch package.

The packages are transferred automatically, and then kept in the install path in the remote host.

When the command is executing, the module running in the remote host will send messages back to the main process in the management host.

All messages are persisted in the log files in the management host for debug purposes.

Some messages are shown in the console for the user, others are used internally by orca.

Running orca

|

|

Standardized path structure

When orca operates on Rhino installations, it depends on the installation on every host being set up according to a standardized path structure,

which is documented here.

It can also be used to migrate an existing Rhino installation to this standardized path structure (with temporary loss of service) -

see the standardize-paths command details below.

Do not attempt to run any other |

|

|

Limitation of one node per host

|

The command-line syntax for invoking orca is

orca --hosts <host1,host2,…> [--skip-verify-connections] [--remote-home-dir DIR] <command> [args…]

where

-

host1,host2,…is a comma-separated list of all hosts on which the<command>is to be performed. This will normally be all hosts in the cluster, but sometimes you may want to use a selected host or set of hosts. For example, you may want to do some operation in batches when the cluster is large. Some commands, such asupgrade-rem, can operate on different types of hosts, which may not be running Rhino. -

the

--skip-verify-connectionsoption, or-kfor short, instructsorcanot to test connectivity to the hosts before running a command. The default is to test connectivity with every command, which reduces the risk of a command being only partially completed due to a network issue. -

the

--remote-home-diror-roption is used to optionally specify a home path other than/home/sentinelon the remote Rhino hosts. It should not be given when operating on other types of host, such as REM nodes. -

commandis the command to run: one ofstatus,prepare,prepare-new-rhino,migrate,rollback,cleanup,apply-patch,revert-patch,minor-upgrade,major-upgrade,upgrade-rem,standardize-paths,import-feature-scripts,rhino-only-upgradeorrun. -

argsis one or more command-specific arguments.

For further information on command and args see the documentation for each command below.

orca writes output to the terminal and also to log files on the hosts.

Once the command is complete, orca may also copy additional log files off the hosts and store them

locally under the log directory.

Running a migrate, rollback, apply-patch or revert-patch command will typically take between 5 to 10 minutes per node, depending on the timeouts set to allow time for active calls to drain.

At the end of many commands, orca will list which commands can be run given the current node/cluster status, for example:

Available actions: - prepare - cleanup --clusters - rollback

|

|

Always use

|

Arguments common to multiple commands

Some arguments are common to multiple orca commands. They must always be specified after the command.

SLEE drain timeout

Rhino will not shut down until all activities (traffic) on the node have concluded. In order to achieve this, the operator must redirect traffic away be redirected away from the node before starting a procedure such as a migration or upgrade.

The argument --stop-timeout N controls how long orca will wait for active calls to drain and for the SLEE to stop gracefully.

The value is specified in seconds, or 0 for no timeout (wait forever). If calls have not drained in the specified time,

and thus the SLEE has not stopped, the node will be forcibly killed.

This option applies to the following commands:

-

migrate -

rollback -

apply-patch -

revert-patch -

minor-upgrade -

major-upgrade -

rhino-only-upgrade

It is optional, with a default value of 120 seconds.

Dual-stage operations

The --pause, --continue and --no-pause options control behaviour during long operations which apply to

multiple hosts, where the user may want the operation to pause after the first host to run validation tests.

They apply to the following commands:

-

apply-patch -

revert-patch -

minor-upgrade -

major-upgrade -

rhino-only-upgrade

--pause is used to stop orca after the first host, --continue is used when re-running the command for

the remaining hosts, and --no-pause means that orca will patch/upgrade all hosts in one go. These options are

mutually exclusive and exactly one must be specified when using the above-listed commands.

REM installation information

-

The

--backup-diroption informsorcawhere it can locate, or should store, REM backups created during upgrades. -

The

--remote-tomcat-homeoption informsorcawhere the REM Tomcat installation is located on the host (thoughorcawill try to autodetect this based on environment variables, running processes and searching the home directory). It corresponds to theCATALINA_HOMEenvironment variable. -

In complex Tomcat setups you may also need to specify the

--remote-tomcat-baseoption which corresponds to theCATALINA_BASEenvironment variable.

These options apply to the following commands:

-

status, when used on a host with REM installed -

upgrade-rem -

rollback-rem -

cleanup-rem

All these options are optional and any number of them can be specified at once. When exactly one of --remote-tomcat-home

and --remote-tomcat-base is specified, the other defaults to the same value.

orca commands

Be sure you are familiar with the concepts and patching process overview described in Cluster migration workflow.

status

The status command prints the status of the nodes on the specified hosts:

-

which cluster directories are present

-

which cluster directory is live

-

the SLEE state of the live node

-

a list of export (backup) directories present on the node, if any.

It will also output some global status information, which at present consists of a list of nodes that use per-node service activation state.

The status command will display much of its information even if Rhino is not running, though there may be

additional information it can include when it can contact a running Rhino.

standardize-paths

The standardize-paths command renames and reconfigures an existing Rhino installation so it conforms to the standard

path structure described here.

This command requires three arguments:

-

--product <product>, where<product>is the name of the product installed in the Rhino installation. Specify the name as a single word in lowercase, e.g.volteoripsmgw. -

--version <version>, where<version>is the version of the product installed in the Rhino installation. Specify the version as a set of numbers separated by dots, e.g.2.7.0.10. -

--sourcedir <sourcedir>, where<sourcedir>is the path to the existing Rhino installation, relative to therhinouser’s home directory.

Note that the standardize-paths command can only perform filesystem-level manipulation.

In particular, it cannot

-

change the user under whose home directory Rhino is installed (this should be the

rhinouser) -

change the name of the database (this should be in the form

rhino_<cluster ID>) -

change any init.d, systemctl or similar scripts that start Rhino automatically on boot, because editing these requires root privileges.

prepare

The prepare command prepares for a migration by creating one node in the uplevel cluster.

It can take three arguments:

-

The

--copy-dbargument will copy the management database of the live cluster to the new cluster. This means that the new cluster will contain the same deployments as the live cluster. -

The

--init-dbargument will initialize an empty management database in the new cluster. This will allow a different product version to be installed in the new cluster. -

The

-n/--new-versionargument takes a string that will be used as the version in the name of the new cluster, e.g. "2.7.0.6".

Note that the --copy-db and --init-db arguments cannot be used together.

-

The new cluster will have the next sequential ID to the current live cluster. For example, if the current live cluster has cluster ID 100, the new one will have cluster ID 101.

-

The configuration of the new cluster is adjusted automatically; there is no need to manually change any configuration files.

-

When preparing the first set of nodes in the uplevel cluster, use the

--copy-dbor--init-dboption which will causeorcato also prepare the new cluster’s database.

prepare-new-rhino

The prepare-new-rhino command does the same action as the prepare command with the exception that it will clone

the current cluster to a new cluster with a new specified Rhino.

The arguments are:

-

The

-n/--new-versionargument takes a string that will be used as the version in the name of the new cluster, e.g. "2.7.0.6". The version number here is for the Sentinel product and not the Rhino version. -

The

-r/--rhino-packageargument takes the Rhino install package to use, e.g. "rhino-install-2.6.1.2.tar" -

The

-o/--installer-overridesargument takes a properties files used by the rhino-install.sh script.

|

|

Use --installer-overrides with care. If specified it will override the existing properties used by the current running system.

For more details about rhino installation see Install Unattended

|

migrate

The migrate command runs a migration from a downlevel to an uplevel cluster.

You must have first prepared the uplevel cluster using the prepare command.

To perform the migration, orca will

-

if the

--special-first-hostoption was passed to the command, export the current live node configuration (as a backup) -

stop the live node, and wait to ensure all sockets are closed cleanly

-

edit the

rhinosymlink to point at the uplevel cluster -

start Rhino in the uplevel cluster (if the

--special-first-hostoption is used, then this Rhino node will be explicitly made into a primary node — use this if and only if the uplevel cluster is empty or has no nodes currently running)

rollback

The rollback command will move a node back from the uplevel cluster to the downlevel cluster - the reverse of migrate.

If there is more than one old cluster, the most recent (highest ID number) is assumed to be the target downlevel cluster.

The process is:

-

stop the live node, and wait to ensure all sockets are closed cleanly

-

edit the

rhinosymlink to point at the downlevel cluster -

start Rhino in the downlevel cluster (if the

--special-first-hostoption is used, then this Rhino node will be explicitly made into a primary node - use this if and only if the downlevel cluster is empty or has no nodes currently running).

The usage of --special-first-host or -f can change depending on the cluster states.

If there are 2 clusters active (50 and 51 for example) and the user want to rollback a node from cluster 51, don’t use the --special-first-host option.

The reason is that the cluster 50 already has a primary member.

If the only active cluster is the cluster 51 and the user wants to rollback one node or all the nodes, then include the --special-first-host option, because the cluster 50

is inactive and the first node migrated has to be set as part of the primary group before other nodes join the cluster.

cleanup

The cleanup command will delete a set of specified cluster or export (backup) directories on the specified nodes.

It takes one or two arguments:

-

--clusters <id1,id2,…>and/or -

--exports <id1,id2,…>, whereid1,id2,…is a comma-separated list of cluster IDs.

For example, cleanup --clusters 102 --exports 103,104 will delete the cluster directory numbered 102 and the export directories corresponding to clusters 103 and 104.

This command will reject any attempt to remove the live cluster directory.

In general this command should only be used to delete cluster directories:

-

once any patching or upgrading is fully completed, and the uplevel version has passed all acceptance tests and been accepted as live configuration

-

if it is determined that the patch or upgrade is faulty, and rollback to the downlevel version has been fully completed on all nodes.

When cleaning up a cluster directory, the corresponding cluster database is also deleted.

apply-patch

The apply-patch command will perform a prepare and migrate to apply a given patch to the specified nodes in a single step.

It requires one argument: <file>, where <file> is the path (full or relative path) to the patch .zip file.

Specifically, the apply-patch command does the following:

-

on the first host in the specified list of hosts, prepare the uplevel cluster and migrate to it using the same processes as in the

prepareandmigratecommands -

on the other hosts, prepare the uplevel cluster

-

copy the patch to the first host

-

apply the patch to the first host (using the

apply-patch.shscript provided in the patch .zip file) -

once the first host has successfully recovered, migrate the other hosts. They will pick up the patch automatically as a consequence of being in the same new Rhino cluster.

revert-patch

The revert-patch command operates identically to the apply-patch command, but reverts the patch instead of applying it.

Like apply-patch, it takes a single <file> argument.

Since apply-patch does a migration, it would be most common to revert a patch using the rollback command.

However a rollback will lose any configuration changes made after applying the patch, whereas this command performs a second

migration and hence preserves configuration changes.

minor-upgrade

The minor-upgrade command will perform a prepare creating a new cluster, clear the management database for the new cluster,

install the new software version and migrate nodes in a single step.

It requires the following arguments:

-

<the packages path>, a path that contains the compressed product sdk with the offline repositories -

<install properties file>, the install properties file for the product

To run a custom package during the installation, specify either or both in the packages.cfg:

-

post_install_packageto specify a custom package to be applied after the new software is installed but before the configuration is restored -

post_configure_packageto specify the custom package to be applied after all configuration and data migration is done on the first node, but before the other nodes are migrated

The workflow is as follows:

-

validate the specified install.properties, and check it is not empty

-

check the connectivity to the hosts

-

prepare a new cluster. If new Rhino or/and Java is specified install them as part of the process.

-

clean the new cluster database

-

create a rhino export from the current installation

-

persist RA configuration and profile table definitions (their table names and profile specifications)

-

migrate the node as explained in Cluster migration workflow

-

import licenses from the old cluster into the new cluster

-

copy the upgrade pack to the first node with the specified install.properties

-

install the new version of the product into the new cluster using the product’s installer

-

if post install package is present, copy the custom package to the first node and run the

installexecutable from that package -

recreate profile tables in the new cluster as needed, to match the set of profile tables from the previous cluster

-

restore the rhino configuration from the customer export (access, logging, SNMP, object pools, etc)

-

restore the RA configuration

-

restore the profiles from the customer export (maintain the current customer configuration)

-

if post configure package is present, copy the post-configuration custom package to the first node and run the

installexecutable from that package -

migrate other nodes as explained in Cluster migration workflow

A command example is:

./orca --hosts host1,host2,host3 minor-upgrade packages $HOME/install/install.properties

major-upgrade

The major-upgrade command will perform a prepare creating a new cluster, clear the management database for the new cluster,

install the new software version and migrate nodes in a single step.

It requires the following arguments:

-

<the packages path>, a path that contains the compressed product sdk with the offline repositories -

<install properties file>, the install properties file for the product -

optional

--skip-new-rhino, indicates to not install a new Rhino present as part of the upgrade package -

optional

--installer-overrides, takes a properties file used by the rhino-install.sh script -

optional

--license, a license file to install with the new Rhino. If no license is specified,orcawill check for a license in the upgrade package and no license is present it will check if the current installed license is supported for the new Rhino version.

|

|

Use --installer-overrides with care. If specified it will override the existing properties used by the current running system.

For more details about rhino installation see Install Unattended

|

To run a custom package during the installation, specify either or both in the packages.cfg:

-

post_install_packageto specify a custom package to be applied after the new software is installed but before the configuration is restored -

post_configure_packageto specify a custom package to be applied after all configuration and data migration is done on the first node, but before the other nodes are migrated

The workflow is as follows:

-

validate the specified install.properties, and check it is not empty

-

check the existing packages defined in

packages.cfgexist: sdk, rhino, java, post install, post configure -

check the connectivity to the hosts

-

prepare a new cluster. If new Rhino or/and Java is specified install them as part of the process.

-

clean the new cluster database

-

create a rhino export from the current installation

-

persist RA configuration and profile table definitions (their table names and profile specifications)

-

migrate the node as explained in Cluster migration workflow

-

import licenses from the old cluster into the new cluster

-

copy the upgrade pack to the first node with the specified install.properties

-

install the new version of the product into the new cluster using the product’s installer

-

generate an export from the new version

-

apply data transformation rules on the downlevel export

-

recreate profile tables in the new cluster as needed, to match the set of profile tables from the previous cluster

-

if post install package is present, copy the custom package to the first node and run the

installexecutable from that package -

restore the RA configuration after transformation

-

restore the profiles from the transformed export (maintain the current customer configuration)

-

restore the rhino configuration from the customer export (access, logging, SNMP, object pools, etc)

-

if post configure package is present, copy the post-configuration custom package to the first node and run the

installexecutable from that package -

do a 3 way merge for Feature Scripts

-

manual import the Feature Scripts after checking they are correct; see Feature Scripts conflicts and resolution

-

migrate other nodes as explained in Cluster migration workflow

A command example is:

./orca --hosts host1,host2,host3 major-upgrade packages $HOME/install/install.properties

upgrade-rem

The upgrade-rem command upgrades Rhino Element Manager hosts to new versions.

Like the other commands, it takes a list of hosts which the upgrade should be performed on, but these hosts are likely to be specific to Rhino Element Manager, and not actually running Rhino itself.

The command can be used to update both the main REM package, and also plugin modules.

A command example is:

./orca --hosts remhost1,remhost2 upgrade-rem packages

The information of which plugins to upgrade are present in the packages.cfg file.

As part of this command orca generates a backup. This is stored in the backup directory, which (if not overridden

by the --backup-dir option) defaults to ~/rem-backup on the REM host. The backup takes the form of a directory named

<timestamp>#<number>, where <timestamp> is the time the backup was created in the form YYYYMMDD-HHMMSS,

and <number> is a unique integer (starting at 1). For example:

20180901-114400#2

indicates a backup created at 11:44am on 1st September 2018, labelled as backup number 2. The backup number can be used

to refer to the backup in the rollback-rem and cleanup-rem commands described below.

The backup contains a copy of:

-

the

rem_homedirectory (which contains the plugins) -

the

rem.warweb application archive -

the

rem-rmi.jarfile

rollback-rem

The rollback-rem command reverts a REM installation to a previous backup, by stopping Tomcat, copying the files in the

backup into place, and restarting Tomcat. Its syntax is

./orca --hosts remhost1 rollback-rem [--target N]

The --target parameter is optional and specifies the number of the backup (that appears in the backup’s name after the

# symbol) to roll back to. If not specified, orca defaults to the highest-numbered backup, which is likely to be

the most recent one.

Because REM is not clustered in the same way as Rhino nodes are, installations and backups may differ between REM nodes.

As such, to avoid unexpected results, the --target parameter can only be used if exactly one host is specified.

cleanup-rem

The cleanup-rem command deletes unwanted REM backup directories from a host. Its syntax is

./orca --hosts remhost1 cleanup-rem --backups N[,N,N...]

There is one mandatory parameter, --backups, which specifies the backup(s) to clean up by their number(s)

(that appear in the backups' names after the # symbol), comma-separated without any spaces.

Because REM is not clustered in the same way as Rhino nodes are, installations and backups may differ between REM nodes.

As such, to avoid unexpected results, the cleanup-rem command can only be used on one host at a time.

run

The run command allows the user to run a custom command on each host. It takes one mandatory argument

<command>, where <command> is the command to run on each host (if it contains spaces or other characters

that may be interpreted by the shell, be sure to quote it).

It also takes one optional argument --source <file>, where <file> is a script or file to upload before executing the command.

(Files specified in this way are uploaded to a temporary directory, and so the command may need to move it to its correct location.)

For example:

./orca --hosts VM1,VM2 run "ls -lrt /home/rhino"

./orca --hosts VM1,VM2 run "mv file.zip /home/rhino; cd /home/rhino; unzip file.zip" --source /path/to/file.zipNote on symmetric activation state for upgrades

Symmetric activation state mode must be enabled before starting a major or minor upgrade. This means that all services will be forced to have the same state across all nodes in the cluster. This ensures that all services start correctly on all nodes after the upgrade process completes. If your cluster normally operates with symmetric activation state mode disabled, it will need to be manually disabled after the upgrade and related maintenance operations are complete.

See Activation State in the Rhino documentation.

Note on Rhino 2.6.x export

The Rhino export configuration from Rhino 2.5.x to Rhino 2.6.x changed.

More specifically, Rhino 2.6.x exports now include data for each SLEE namespace and include SAS bundle configuration.

orca just restores configuration for the default namespace.

Sentinel products are expected to have just the default namespace; if there is more than one namespace orca will raise an error.

orca does not restore the SAS configuration when doing minor upgrade.

The new product version being installed as part of the minor upgrade includes all the necessary SAS bundles.

For Rhino documentation about namespaces see Namespaces.

For Rhino SAS configuration see MetaView Service Assurance Server (SAS) Tracing

Troubleshooting

See Troubleshooting.