What is the SGC TCAP Stack ?

The SGC TCAP Stack is a Java component that is embedded in the CGIN Unified RA and the IN Scenario Pack to provide OCSS7 support. The TCAP stack communicates with the SGC via a proprietary protocol.

|

|

For a general description of the TCAP Stack Interface defined by the CGIN Unified RA, please see Inside the CGIN Connectivity Pack. |

TCAP Stack and SGC Stack cooperation

After the CGIN Unified RA is activated the SGC TCAP stack uses one of two procedures to establish communication with the SGC stack:

-

The newer, and recommended,

ocss7.sgcsregistration process, introduced in release 1.1.0. -

The legacy

ocss7.urlListregistration process, supported by releases up to and including 2.1.0.

'ocss7.sgcs' Connection Method

This connection method:

-

automatically load balances all traffic between all available SGCs; and

-

introduces dialog failover when an SGC becomes unavailable for any reason.

When properly configured, all TCAP stacks in the Rhino cluster will be connected to all available SGCs in the OCSS7 cluster simultaneously, with every connection servicing traffic. This is sometimes referred to as the Meshed Connection Manager because of the fully meshed network topology it uses.

TCAP Stack registration process

After the CGIN Unified RA is activated, the SGC TCAP stack connects to all configured SGC(s). For each connection the TCAP stack will:

-

Perform a basic handshake with the SGC over the connection, negotiating the data protocol version to be used. If the SGC does not support the requested protocol version it will respond with handshake failure, closing the connection. If the SGC does support the requested protocol version it will respond with a handshake success message.

-

Send an extended handshake request to the SGC. This handshake includes a unique TCAP stack identifier as well as any extended features supported by the TCAP stack. At the time of writing the following features are supported:

ANSI_SCCPandPREFIX_MIGRATION(see ANSI SCCP and Prefix Migration for details). -

The SGC assigns a TCAP local transaction ID prefix for this connection.

-

The SGC creates local state to manage the data transfer with the TCAP stack, and updates the cluster state with information about the newly connected TCAP stack.

-

The SGC sends an extended handshake response to the TCAP stack, including the local transaction prefix assigned as well as indicating any extended features supported by the TCAP stack.

At this point, the TCAP stack is operational and can both send and receive TCAP traffic.

Load balancing TCAP stack data

Dialogs are load balanced across all active connections between the SGCs and the TCAP stacks. An SGC that receives traffic from the SS7 network will round-robin new dialogs between all TCAP stacks that have an active connection to it. Similarly, each TCAP stack will round-robin new outgoing dialogs between the SGCs it is connected to.

If a configured connection is down for any reason the TCAP stack will attempt to re-establish it at regular intervals until successful.

In some OA&M or failure situations an SGC may have no TCAP stack connections. When this happens the SGC will route traffic to the other SGCs in the cluster for forwarding to the TCAP stacks. Traffic will remain balanced across the TCAP stacks.

If a new SGC needs to be added to the cluster with a new connection address then the TCAP stack configuration will need to be adjusted.

This adjustment can be done either before or after the SGC is installed and configured, and the cluster will behave as described above.

Updates to the TCAP stack ocss7.sgcs list can be done while the system is active and processing traffic — existing connections will not be affected unless they are removed from the list, and the TCAP stack will attempt to establish any newly configured connections.

Prefix Migration

This feature provides dialog failover for in-progress dialogs when an SGC becomes unavailable to a TCAP stack for some reason.

|

|

This feature does not provide failover between Rhino nodes when a Rhino node becomes unavailable for some reason. Failover between Rhino nodes is not available in CGIN 1.5.4 with any TCAP stack because CGIN itself does not provide replication facilities. |

As detailed in TCAP Stack registration process, each connection to an SGC is allocated a TCAP local transaction ID prefix. Under normal operating conditions, each TCAP dialog continues to use the same connection between a TCAP stack and an SGC for its lifetime, with the connection identified by the prefix the connection was assigned. If, however, an SGC becomes unavailable the Prefix Migration feature allows the prefixes for all affected connections to be distributed to the other SGCs in the cluster and attached to suitable existing TCAP stack connections (if available). Once prefix migration has completed, existing dialogs will continue via an existing connection to a different SGC.

For example, TCAP stack A is connected to SGCs 1 and 2. It has a different prefix for each connection; A1 and A2 respectively. If SGC 2 terminates unexpectedly, the prefix associated with A2 will be migrated to connection A1. All traffic received by the SGCs for that prefix will now be sent to the TCAP stack via connection A1, which is hosted on SGC 1.

It is important to note that prefixes migrate between SGCs, not TCAP stacks. For prefix migration to work there must be an existing connection between the original TCAP stack and at least one substitute SGC which is capable of accepting a new migrated prefix. An SGC may not be capable of accepting a prefix migration because of configuration and resource limitations (see below). Prefixes which cannot be allocated to a replacement SGC will be dropped and their in-flight dialogs will be lost.

Each active prefix in an SGC has associated resource overhead so migrated prefixes are only used for existing dialogs. New dialogs will not be created on a migrated prefix. Once the last dialog ends on a migrated prefix that prefix and associated resources are released.

Please note that prefix migration support requires that the SGC’s heap is configured appropriately. Please see the sgc.tcap.maxMigratedPrefixes configuration property documentation in Static SGC instance configuration for information on the amount of heap required to support prefix migration.

|

|

Failure recovery can never be perfect — network messages currently being processed at the time of failure will be lost — so some dialogue failure must be expected. |

Legacy 'ocss7.urlList' Connection Method

This is the method used by the TCAP stack and SGCs prior to release 1.1.0. This method is still available to ensure backwards compatibility between TCAP stacks and the SGC. It is strongly recommended that all new installations use the new ocss7.sgcs method instead as this provides failover capabilities as well as automatic load balancing without the need for intervention.

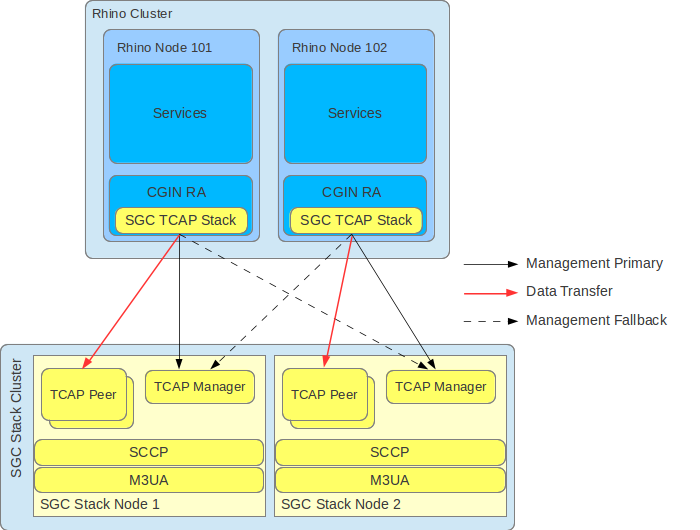

Below is a high-level overview diagram, followed by descriptions, concentrating on components directly involved in TCAP Stack and SGC Stack cooperation. SGC Stack components are the items colored yellow; components colored blue (and similar) are provided by Rhino platform or are part of Operating System environment.

-

TCAP Manager is the software component that the TCAP Stack queries initially, to register within the SGC Stack and establish a data transfer connection.

-

TCAP Peer is the software component within the SGC Stack Node that manages the data transfer connection with the TCAP Stack.

|

|

The TCAP Manager listen address is configured as part of configuring the SGC Node. For details, please see the stack-http-address and stack-http-port attributes in General Configuration.

|

TCAP Stack registration process

After the CGIN Unified RA is activated, the SGC TCAP Stack goes through a two-phase process to establish communication with the SGC Stack:

-

Assigning to the connection to the TCAP Manager an SGC Node, where the data transfer connection should be established.

-

Connecting to SGC Stack Node, to register it within the SGC Cluster, and establish the data transfer connection.

Detailed Stack registration steps

Phase 1

-

The TCAP Stack connects to the first active TCAP Manager from a user-provided list of TCAP Manager addresses (corresponding to the nodes of the SGC Stack Cluster).

-

After the connection with the TCAP Manager is established, the TCAP Stack sends a request containing information about the SSN represented by the TCAP Stack.

-

The TCAP Manager selects an SGC Node within the cluster that will be responsible for managing the data transfer connection with the TCAP Stack.

-

The address where the selected SGC Node listens for data transfer connections is sent back as part of the response to the TCAP Stack.

Phase 2

-

The TCAP Stack connects to the selected SGC Node and begins registration by sending a handshake message containing its SSN and version of the data transfer protocol that it supports (this is a custom protocol used to exchange TCAP traffic between the TCAP Stack and the SGC Node; both v1.0.0 and v2.0.0 versions are supported in this release of OCSS7).

-

The SGC Node assigns a TCAP local transaction prefix to the TCAP Stack,

-

The SGC Node creates a TCAP Peer responsible for managing the data transfer connection with the TCAP Stack, and updates the cluster state with information about the newly connected TCAP Stack.

-

The TCAP local transaction prefix is sent to the TCAP Stack as part of a successful handshake response.

At this stage, the TCAP Stack is operational and can both originate and receive TCAP traffic.

|

|

How the TCAP Manager selects an SGC Node

The goal of the node-selection process is to load balance the number of connected TCAP Stacks between SGC Nodes within an SGC Cluster. The TCAP Manager does that by following a very simple algorithm:

|

|

|

When Phase 2 registration fails…

The TCAP Stack can fail to register with the SGC Node. Possible reasons include:

To see why registration fails, check the log file. |

Data transfer connection failover

After the TCAP Stack detects data transfer connection failure, it repeats the registration process by attempting to establish a connection to one of the configured TCAP Managers. Connection attempts are in order of TCAP Manager addresses defined by the ocss7.urlList property. This process does not differ from the standard TCAP Stack registration process, as TCAP Manager selects one of the available SGC Nodes. For proper failover behaviour, the TCAP Stack should be configured with the TCAP Manager addresses of all nodes within the SGC Cluster.

Rebalancing TCAP Stack data transfer connections

When an SGC Node joins a running SGC Cluster (for example after a planned outage or failure), the already established TCAP Stack data transfer connections are NOT affected. That is, existing TCAP Stack data transfer connections are NOT rebalanced between SGC Nodes.

Example rebalancing procedure

|

|

This example uses a basic production deployment of an SGC Cluster, composed of two SGC Nodes cooperating with the CGIN Unified RA, deployed within a two-node Rhino cluster (as depicted above). |

Imagine the following scenario:

-

Due to hardware failure, SGC Stack Node 2 was not operational. This resulted in both instances of the CGIN Unified RA being connected to SGC Stack Node 1.

-

That hardware failure is removed, and SGC Stack Node 2 is again fully operational and part of the cluster.

-

To rebalance the data transfer connections of the CGIN Unified RA entity running within Rhino cluster, that entity must be deactivated and activated again on one of the Rhino nodes. (Deactivation is a graceful procedure where the CGIN Unified RA waits until all dialogs that it services are finished; at the same time all new dialogs are directed to the CGIN Unified RA running within the other node.)

You would do the following:

-

Make sure that the current traffic level is low enough so that it can be handled by a CGIN Unified RA on a single Rhino node.

-

Deactivate the CGIN Unified RA entity on one of the Rhino nodes.

-

Wait for the deactivated CGIN Unified RA entity to become

STOPPED. -

Activate the previously deactivated CGIN Unified RA entity,

|

|

Per-node activation state

For details of managing per-node activation state in a Rhino Cluster, please see Per-Node Activation State in the Rhino Administration and Deployment Guide. |

TCAP Stack configuration

General description and configuration of the CGIN RA is detailed in the CGIN RA Installation and Administration Guide. Below are configuration properties specific to the SGC TCAP Stack.

Parameter |

Usage and description |

Active |

|---|---|---|

Values |

||

Default |

||

|

Short name of the TCAP stack to use. |

✘ |

for the SGC TCAP Stack, this value must be |

||

|

Maximum number of threads used by the scheduler. Number of threads used to trigger timeout events. |

✘ |

in the range |

||

|

||

|

Number of events that may be scheduled on a single scheduler thread. |

✘ |

in the range

If this value is set too low, then some invoke and activity timers may not fire, potentially leading to hung dialogs. The higher this value is, the higher the memory requirements of the TCAP stack. |

||

|

||

|

Maximum number of inbound messages and timeout events that may be waiting to be processed. |

✘ |

in the range

|

||

|

||

|

Maximum number of opened transactions (dialogs). |

✘ |

in the range |

||

|

||

|

Number of threads used by the worker group to process timeout events and inbound messages. |

✘ |

in the range |

||

|

||

|

Maximum number of tasks in one worker queue. |

✘ |

in the range

|

||

|

||

|

Maximum number of outbound messages in the sender queue. |

✘ |

in the range (if less than default value the default value is silently used)

|

||

|

||

|

Comma-separated list of SGCs to connect to.

Only one of |

✔ * |

comma-separated list in the format |

||

unset |

||

|

Comma-separated list of URLs used to connect to legacy TCAP Manager(s).

Only one of |

✔ * |

comma-separated list in the format |

||

unset |

||

|

Wait interval in milliseconds between subsequent connection attempts for the |

✔ |

in the range not related to single URLs on the list (but the whole list) |

||

|

||

|

SSN number used when the |

✘ |

in the range |

||

|

v1.0.0 protocol variant only - Enables or disables the OCSS7 to SGC connection heartbeat. N.B. if the heartbeat is enabled on the SGC it must also be enabled here. v2.0.0 protocol variant - heartbeat is always enabled. |

✘ |

|

||

|

||

|

The period between heartbeat sends in seconds. Heartbeat timeout is also a function of this value, as described in Data Connection Heartbeat Mechanism. The value configured here must be smaller than the value of |

✘ |

|

||

|

* If a TCAP data transfer connection is established, changing this property has no effect until the data transfer connection fails and the TCAP Stack repeats the registration process.

ANSI SCCP

From SGC version 2.1.0.x and CGIN version 1.5.4.3, the SGC and OCSS7 TCAP stack support both ITU and ANSI SCCP.

SCCP Variant

The TCAP stack’s choice of SCCP variant is configured via the local-sccp-address CGIN configuration property. A value of C7 indicates ITU SCCP, and a value of A7 indicates ANSI SCCP.

To configure the SGC, please see SCCP variant configuration.

The TCAP stack and the SGC must be configured for the same SCCP variant. Furthermore, use of ANSI SCCP also requires use of the ocss7.sgcs connectivity method as the legacy ocss7.urlList method does not support the advanced feature negotiation required to successfully configure for ANSI SCCP.

The table below shows the supported configurations:

SGC sccp-variant |

TCAP Stack local-sccp-address Type |

|

|---|---|---|

|

|

|

|

|

✘ |

|

✘ |

|

ANSI Point Codes

|

|

ANSI SCCP point code configuration recommendations

Care must be taken when configuring ANSI SCCP point codes via the simple integer format supported by CGIN and the SGC as CGIN parses this value according to ANSI SCCP encoding standards. The SGC parses this value according to ANSI MTP/M3UA standards. The two standards differ in the order in which they process the network, cluster and member fields. Both CGIN and the SGC support specification of ANSI SCCP point codes in |

SGC-TCAP stack protocols

Version 2.1.0 of OCSS7 supports two proprietary data protocols; the original v1.0.0 protocol and a new v2.0.0 protocol. The data protocol version is automatically negotiated and is not user configurable.

v2.0.0

This is a protobuf-based protocol, running over TCP/IP. It was introduced in OCSS7 1.1.0.

Handshake

v2.0.0 uses a two-stage handshake. The first stage is the original proprietary basic handshake, used to agree on a protocol version to be used for TCAP stack - SGC communications.

Once the basic handshake has successfully negotiated the v2.0.0 protocol the TCAP stack and SGC both switch to using this protocol for further communications.

Next, the TCAP stack will send an extended handshake to the SGC. This handshake includes:

-

A list of extended features supported by the TCAP stack. At present

PREFIX_MIGRATIONis the only extended feature available. -

The heartbeat period configured in the TCAP stack (TCAP stack property

ocss7.heartbeatPeriod) -

An identifier that uniquely identifies this TCAP stack (this identifier will be the same for all connections originating from this TCAP stack)

The SGC will determine if TCAP stack connection can be accepted, and if it can, will send an extended handshake success response which includes:

-

A list of extended features supported by the SGC. At present

PREFIX_MIGRATIONis the only extended feature available. -

The prefix assigned to this connection, to be used to allocate transaction IDs for any new dialogs sent/received over this connection.

If the TCAP stack connection cannot be accepted, the SGC will send an extended handshake response which includes:

-

The reason why the TCAP stack connection could not be accepted

Heartbeat

The data connection between the TCAP stack and SGC features a configurable heartbeat consisting of:

-

A heartbeat request/response message pair

-

TCAP stack side timeout detection

-

SGC side timeout detection

The heartbeat period is configured in the TCAP stack by setting the TCAP stack ocss7.heartbeatPeriod to the desired value (in ms), and automatically communicated to the SGC by the TCAP stack during the extended handshake.

The TCAP stack will send a HeartbeatPing message at the configured interval. On receipt of a HeartbeatPing the SGC will send a HeartbeatPong.

If the SGC does not receive a HeartbeatPing message within 2 * ocss7.heartbeatPeriod ms of the last HeartbeatPing or the extended handshake completing, it will mark the heartbeat as having failed and close the connection to the TCAP stack.

If the TCAP stack does not receive a HeartbeatPong message before sending its next ping (i.e. within ocss7.heartbeatPeriod ms) it will mark the heartbeat as having failed and close the connection to the TCAP stack.

v1.0.0

This is the original proprietary protocol used by OCSS7 version 1.0.0.x and 1.0.1.x. OCSS7 1.1.0.x also supports this protocol version to ensure backwards compatibility with older clients/servers.

Handshake

This protocol uses a basic handshake to establish the connection between TCAP stack and SGC. This handshake includes a protocol version field, which will be set to v1.0.0. If the SGC cannot accept this connection, for example, unsupported protocol version, insufficient resources available, it will send a handshake failure response.

If the SGC can accept this connection it will send a handshake success response which includes the prefix allocated for this connection.

Data Connection Heartbeat Mechanism

The data connection between the TCAP stack and SGC supports a heartbeat mechanism that can be used to detect failed connections in a timely manner. This mechanism consists of three components:

-

A heartbeat request/response message pair

-

TCAP stack side timeout detection

-

SGC side timeout detection

The heartbeat request/response message pair is enabled by setting the TCAP stack ocss7.heartbeatEnabled property to true.

This configures the TCAP stack to send a heartbeat request. The SGC will respond to any heartbeat request with a

heartbeat response message, regardless of SGC configuration. The frequency with which the heartbeat request message is

sent (and therefore also the heartbeat response) is configured with the TCAP stack’s ocss7.heartbeatPeriod configuration

parameter.

If heartbeats are enabled in the TCAP stack, then the TCAP stack will automatically perform timeout detection. If a

heartbeat response message hasn’t been seen within a period of time equal to twice the heartbeat period (2 * ocss7.heartbeatPeriod)

the connection will be marked as defective and closed, allowing the TCAP stack to select a new SGC data connection.

The SGC stack may also perform timeout detection; this is controlled by the SGC properties com.cts.ss7.commsp.heartbeatEnabled and com.cts.ss7.commsp.server.recvTimeout. When the heartbeatEnabled property is set to true the SGC will close a connection if a heartbeat request hasn’t been received from that TCAP stack in the last recvTimeout seconds.

It is important to note that if the SGC stack is configured to perform timeout detection then every TCAP stack connecting to it must also be configured to generate heartbeats. If a heartbeat-disabled TCAP stack connects to a heartbeat-enabled SGC the SGC will close the connection after recvTimeout seconds, resulting in an unstable TCAP stack to SGC connection.