This manual is a guide for configuring and upgrading the TSN, ShCM, MAG, MMT CDMA, and SMO nodes as virtual machines on OpenStack or VMware vSphere.

- Notices

- Changelogs

- Introduction

- VM types

- Installation and upgrades

- Installation and upgrades overview

- Installation or upgrades on OpenStack

- Installation on OpenStack

- Automatic rolling upgrades and patches with SIMPL VM on OpenStack

- Rolling upgrades with SIMPL VM

- Rolling upgrades using CSAR EFIX patch with SIMPL VM

- Setting up for a rolling upgrade using CSAR EFIX patch

- Rolling CSAR EFIX patch TSN nodes on OpenStack

- Rolling CSAR EFIX patch ShCM nodes on OpenStack

- Rolling CSAR EFIX patch MAG nodes on OpenStack

- Rolling CSAR EFIX patch MMT CDMA nodes on OpenStack

- Rolling CSAR EFIX patch SMO nodes on OpenStack

- Post rolling upgrade using CSAR EFIX patch steps

- Installation or upgrades on VMware vSphere

- Installation on VMware vSphere

- Automatic rolling upgrades and patches with SIMPL VM on VMware vSphere

- Rolling upgrades with SIMPL VM

- Rolling upgrades using CSAR EFIX patch with SIMPL VM

- Setting up for a rolling upgrade using CSAR EFIX patch

- Rolling CSAR EFIX patch TSN nodes on VMware vSphere

- Rolling CSAR EFIX patch ShCM nodes on VMware vSphere

- Rolling CSAR EFIX patch MAG nodes on VMware vSphere

- Rolling CSAR EFIX patch MMT CDMA nodes on VMware vSphere

- Rolling CSAR EFIX patch SMO nodes on VMware vSphere

- Post rolling upgrade using CSAR EFIX patch steps

- Verify the state of the nodes and processes

- VM configuration

- Declarative Configuration

- rvtconfig

- Writing an SDF

- Bootstrap parameters

- Bootstrap and configuration

- REM, XCAP and BSF certificates

- SAS configuration

- Services and components

- Configuration YANG schema

- tsn-vm-pool.yang

- snmp-configuration.yang

- routing-configuration.yang

- system-configuration.yang

- traffic-type-configuration.yang

- common-configuration.yang

- shcm-service-configuration.yang

- shcm-vm-pool.yang

- sas-configuration.yang

- mag-vm-pool.yang

- bsf-configuration.yang

- naf-filter-configuration.yang

- home-network-configuration.yang

- number-analysis-configuration.yang

- mmt-cdma-vm-pool.yang

- sentinel-volte-configuration.yang

- hlr-configuration.yang

- icscf-configuration.yang

- smo-vm-pool.yang

- sgc-configuration.yang

- sentinel-ipsmgw-configuration.yang

- vm-types.yang

- Example configuration YAML files

- Example for tsn-vmpool-config.yaml

- Example for snmp-config.yaml

- Example for routing-config.yaml

- Example for system-config.yaml

- Example for shcm-vmpool-config.yaml

- Example for shcm-service-config.yaml

- Example for common-config.yaml

- Example for sas-config.yaml

- Example for mag-vmpool-config.yaml

- Example for bsf-config.yaml

- Example for naf-filter-config.yaml

- Example for home-network-config.yaml

- Example for number-analysis-config.yaml

- Example for mmt-cdma-vmpool-config.yaml

- Example for sentinel-volte-cdma-config.yaml

- Example for hlr-config.yaml

- Example for icscf-config.yaml

- Example for smo-vmpool-config.yaml

- Example for sgc-config.yaml

- Example for sentinel-ipsmgw-config.yaml

- Example for shcm-overrides.yaml

- Example for mag-overrides.yaml

- Example for mmt-cdma-overrides.yaml

- Example for smo-overrides.yaml

- Changing Cassandra data

- Connecting to MetaView Server

- SGC configuration tools

- Troubleshooting node installation

- Glossary

Notices

Copyright © 2014-2022 Metaswitch Networks. All rights reserved

This manual is issued on a controlled basis to a specific person on the understanding that no part of the Metaswitch Networks product code or documentation (including this manual) will be copied or distributed without prior agreement in writing from Metaswitch Networks.

Metaswitch Networks reserves the right to, without notice, modify or revise all or part of this document and/or change product features or specifications and shall not be responsible for any loss, cost, or damage, including consequential damage, caused by reliance on these materials.

Metaswitch and the Metaswitch logo are trademarks of Metaswitch Networks. Other brands and products referenced herein are the trademarks or registered trademarks of their respective holders.

Changelogs

4.0.0-36-1.0.0

-

rvtconfig has been updated so that it ignores specific files that may be in the rvt-config directory unnecessarily. (#386665)

-

Fully qualified table names in cqlsh queries and replaced prepared statements with parameterised simple statements. (#340635)

-

An error message is now output when incorrectly formatted override yaml files are inputted rather than a lengthy stack trace. (#381281)

-

Update MAG nginx config to add X-Ua-OpenSSL-Cipher-Suite header to XCAP server requests containing UE-nginx SSL connection cipher. (#340633)

-

Disabled reverse-DNS lookups for SSH logins on the VM. (#398999)

-

The

override.yamlfiles formmt-gsmandmmt-cdmanode types are now imcluded in thecompare-configandupload-configcomparisons. (#371373) -

The

--vm-version-sourceargument now takes the optionsdf-versionthat uses the version in the SDF for a given node. There is now a check that the inputted version matches the SDF version and an optional argument--skip-version-checkthat skips this check. (#380063)

4.0.0-34-1.0.0

-

Updated system package versions of

rsyncandopen-vm-toolsto address security vulnerabilities. -

Updated system package versions of

bpftool,kernel,perf,pythonandxzto address security vulnerabilities. -

Fixed an issue where VMs would send DNS queries for the

localhosthostname. (#206220) -

Fixed issue that meant

rvtconfig upload-configwould fail when running in an environment where the input device is not a TTY. When this case is detectedupload-configwill default to non-interactive confirmation-y. This preserves 4.0.0-26-1.0.0 (and earlier versions) in environments where an appropriate input device is not available. (#258542) -

Fixed an issue where scheduled tasks could incorrectly trigger on a reconfiguration of their schedules. (#167317)

-

Added

rvtconfig compare-configcommand and madervtconfig upload-configcheck config differences and request confirmation before upload. There is a new-fflag that can be used withupload-configto bypass the configuration comparison.-yflag can now be used withupload-configto provide non-interactive confirmation in the case that the comparison shows differences. (OPT-4517)

-

Added the rvt-gather_diags script to all node types. (#94043)

-

Increased bootstrap timeout from 5 to 15 minutes to allow time (10 minutes) to establish connectivity to NTP servers. (OPT-4917)

-

Make

rvtconfig validatenot fail if fields are present in the SDF it does not recognize. (OPT-4699) -

Added 3 new traffic schemes: "all signaling together except SIP", "all signaling together except HTTP", and "all traffic types separated". (#60997)

-

Fixed an issue where updated routing rules with the same target were not correctly applied. (#169195)

-

Scheduled tasks can now be configured to run more than once per day, week or month; and at different frequencies on different nodes. (OPT-4373)

-

Updated subnet validation to be done per-site rather than across the entire SDF deployment. (OPT-4412)

-

Fixed an issue where unwanted notification categories can be sent to SNMP targets. (OPT-4543)

-

Hardened linkerd by closing the prometheus stats port and changing the proxy port to listen on localhost only. (OPT-4840)

-

Added an optional node types field in the routing rules YAML configuration. This ensures the routing rule is only attempted to apply to VMs that are of the specified node types. (OPT-4079)

-

initconfwill not exit on invalid configuration. VM will be allowed to quiesce or upload new configuration. (OPT-4389) -

rvtconfignow only uploads a single group’s configuration to that group’s entry in CDS. This means that initconf no longer fails if some other node type has invalid configuration. (OPT-4392) -

Fixed a race condition that could result in the quiescence tasks failing to run. (OPT-4468)

-

The

rvtconfig upload-configcommand now displays leader seed information as part of the printed config version summary. (OPT-3962) -

Added

rvtconfig print-leader-seedcommand to display the current leader seed for a deployment and group. (OPT-3962) -

Enum types stored in CDS cross-level refactored to string types to enable backwards compatibility. (OPT-4072)

-

Updated system package versions of

bind,dhclient,dhcp,bpftool,libX11,linux-firmware,kernel,nspr,nss,openjdkandperfto address security vulnerabilities. (OPT-4332) -

Made

ip-address.ipfield optional during validation for non-RVT VNFCs. RVT and Custom VNFCs will still require the field. (OPT-4532) -

Fix SSH daemon configuration to reduce system log sizes due to error messages. (OPT-4538)

-

Allowed the primary user’s password to be configured in the product options in the SDF. (OPT-4448)

-

Updated system package version of

glib2to address security vulnerabilities. (OPT-4198) -

Updated NTP services to ensure the system time is set correctly on system boot. (OPT-4204)

-

Include deletion of leader-node state in rvtconfig delete-node-type, resolving an issue where the first node deployed after running that command wouldn’t deploy until the leader was re-deployed. (OPT-4213)

-

Rolled back SIMPL support to 6.6.3. (OPT-43176)

-

Disk and service monitor notification targets that use SNMPv3 are now configured correctly if both SNMPv2c and SNMPv3 are enabled. (OPT-4054)

-

Fixed issue where initconf would exit (and restart 15 minutes later) if it received a 400 response from the MDM. (OPT-4106)

-

The Sentinel GAA Cassandra keyspace is now created with a replication factor of 3. (OPT-4080)

-

snmptrapdis now enabled even if no targets are configured for system monitor notifications, in order to log any notifications that would have been sent. (OPT-4102) -

Fixed bug where the SNMPv3 user’s authentication and/or privacy keys could not be changed. (OPT-4102)

-

Making SNMPv3 queries to the VMs now requires encryption. (OPT-4102)

-

Fixed bug where system monitor notification traps would not be sent if SNMPv3 is enabled but v2c is not. Note that these traps are still sent as v2c only, even when v2c is not otherwise in use. (OPT-4102)

-

Removed support for the

signalingandsignaling2traffic type names. All traffic types should now be specified using the more granular names, such asss7. Refer to the pageTraffic types and traffic schemesin the Install Guide for a list of available traffic types. (OPT-3820) -

Ensured

ntpdis in slew mode, but always step the time on boot before Cassandra, Rhino and OCSS7 start. (OPT-4131, OPT-4143)

4.0.0-14-1.0.0

-

Changed the

rvtconfig delete-node-typecommand to also delete OID mappings as well as all virtual machine events for the specified version from cross-level group state. (OPT-3745) -

Fixed systemd units so that

systemddoes not restart Java applications after asystemctl kill. (OPT-3938) -

Added additional validation rules for traffic types in the SDF. (OPT-3834)

-

Increased the severity of SNMP alarms raised by the disk monitor. (OPT-3987)

-

Added

--cds-addressand--cds-addressesaliases for the-cparameter inrvtconfig. (OPT-3785)

4.0.0-13-1.0.0

-

Added support for separation of traffic types onto different network interfaces. (OPT-3818)

-

Improved the validation of SDF and YAML configuration files, and the errors reported when validation fails. (OPT-3656)

-

Added logging of the instance ID of the leader while waiting during initconf. (OPT-3558)

-

Do not use YAML anchors/aliases in the example SDFs. (OPT-3606)

-

Fixed a race condition that could cause initconf to hang indefinitely. (OPT-3742)

-

Improved error reporting in

rvtconfig. -

Updated SIMPL VM dependency to 6.6.1. (OPT-3857)

-

Adjusted linkerd OOM score so it will no longer be terminated by the OOM killer (OPT-3780)

-

Disabled all yum repositories. (OPT-3781)

-

Disabled the TLSv1 and TLSv1.1 algorithms for Java. (OPT-3781)

-

Changed initconf to treat the reload-resource-adaptors flag passed to rvtconfig as an intrinsic part of the configuration, when determining if the configuration has been updated. (OPT-3766)

-

Updated system package versions of

bind,bpftool,kernel,nettle,perfandscreento address security vulnerabilities. (OPT-3874) -

Added an option to

rvtconfig dump-configto dump the config to a specified directory. (OPT-3876) -

Fixed the confirmation prompt for

rvtconfig delete-node-typeandrvtconfig delete-deploymentcommands when run on the SIMPL VM. (OPT-3707) -

Corrected a regression and a race condition that prevented configuration being reapplied after a leader seed change. (OPT-3862)

4.0.0-9-1.0.0

-

All SDFs are now combined into a single SDF named

sdf-rvt.yaml. (OPT-2286) -

Added the ability to set certain OS-level (kernel) parameters via YAML configuration. (OPT-3403)

-

Updated to SIMPL 6.5.0. (OPT-3358, OPT-3545)

-

Make the default gateway optional for the clustering interface. (OPT-3417)

-

initconfwill no longer block startup of a configured VM if MDM is unavailable. (OPT-3206) -

Enforce a single secrets-private-key in the SDF. (OPT-3441)

-

Made the message logged when waiting for config be more detailed about which parameters are being used to determine which config to retrieve. (OPT-3418)

-

Removed image name from example SDFs, as this is derived automatically by SIMPL. (OPT-3485)

-

Make

systemctl statusoutput for containerised services not print benign errors. (OPT-3407) -

Added a command

delete-node-typeto facilitate re-deploying a node type after a failed deployment. (OPT-3406) -

Updated system package versions of

glibc,iwl1000-firmware,net-snmpandperlto address security vulnerabilities. (OPT-3620)

4.0.0-8-1.0.0

-

Fix bug (affecting 4.0.0-7-1.0.0 only) where rvtconfig was not reporting the public version string, but rather the internal build version (OPT-3268).

-

Update sudo package for CVE-2021-3156 vulnerability (OPT-3497)

-

Validate the product-options for each node type in the SDF. (OPT-3321)

-

Clustered MDM installations are now supported. Initconf will failover across multiple configured MDMs. (OPT-3181)

4.0.0-7-1.0.0

-

If YAML validation fails, print the filename where an error was found alongside the error. (OPT-3108)

-

Improved support for backwards compatibility with future CDS changes. (OPT-3274)

-

Change the

report-initconfscript to check for convergence since the last time config was received. (OPT-3341) -

Improved exception handling when CDS is not available. (OPT-3288)

-

Change rvtconfig upload-config and rvtconfig initial-configure to read the deployment ID from the SDFs and not a command line argument. (OPT-3111)

-

Publish imageless CSARs for all node types. (OPT-3410)

-

Added message to initconf.log explaining some Cassandra errors are expected. (OPT-3081)

-

Updated system package versions of

bpftool,dbus,kernel,nss,opensslandperfto address security vulnerabilities.

4.0.0-6-1.0.0

-

Updated to SIMPL 6.4.3. (OPT-3254)

-

When using a release version of

rvtconfig, the correctthis-rvtconfigversion is now used. (OPT-3268) -

All REM setup is now completed before restarting REM, to avoid unnecessary restarts. (OPT-3189)

-

Updated system package versions of

bind-*,curl,kernel,perfandpython-*to address security vulnerabilities. (OPT-3208) -

Added support for routing rules on the Signaling2 interface. (OPT-3191)

-

Configured routing rules are now ignored if a VM does not have that interface. (OPT-3191)

-

Added support for absolute paths in

rvtconfigCSAR container. (OPT-3077) -

The existing Rhino OIDs are now always imported for the current version. (OPT-3158)

-

Changed behaviour of

initconfto not restart resource adaptors by default, to avoid an unexpected outage. A restart can be requested using the--reload-resource-adaptorsparameter torvtconfig upload-config. (OPT-2906) -

Changed the SAS resource identifier to match the provided SAS resource bundles. (OPT-3322)

-

Added information about MDM and SIMPL to the documentation. (OPT-3074)

4.0.0-4-1.0.0

-

Added

list-configanddescribe-configoperations torvtconfigto list configurations already in CDS and describe the meaning of the specialthis-vmandthis-rvtconfigvalues. (OPT-3064) -

Renamed

rvtconfig initial-configuretorvtconfig upload-config, with the old command remaining as a synonym. (OPT-3064) -

Fixed

rvtconfig pre-upgrade-init-cdsto create a necessary table for upgrades from 3.1.0. (OPT-3048) -

Fixed crash due to missing Cassandra tables when using

rvtconfig pre-upgrade-init-cds. (OPT-3094) -

rvtconfig pre-upgrade-init-cdsandrvtconfig push-pre-upgrade-statenow supports absolute paths in arguments. (OPT-3094) -

Reduced timeout for DNS server failover. (OPT-2934)

-

Updated

rhino-node-idmax to 32767. (OPT-3153) -

Diagnostics at the top of

initconf.lognow include system version and CDS group ID. (OPT-3056) -

Random passwords for the Rhino client and server keystores are now generated and stored in CDS. (OPT-2636)

-

Updated to SIMPL 6.4.0. (OPT-3179)

-

Increased the healthcheck and decommision timeouts to 20 minutes and 15 minutes respectively. (OPT-3143)

-

Updated example SDFs to work with MDM 2.28.0, which is now the supported MDM version. (OPT-3028)

-

Added support to

report-initconffor handling rolled overinitconf-json.logfiles. The script can now read historic log files when building a report if necessary. (OPT-1440) -

Fixed potential data loss in Cassandra when doing an upgrade or rollback. (OPT-3004)

Introduction

This manual describes the configuration and upgrade of Rhino VoLTE TAS VMs.

Introduction to the Rhino VoLTE TAS product

The Rhino VoLTE TAS solution consists of a number of types of VMs that perform various IMS TAS functions. These nodes are deployed to an OpenStack or VMware vSphere host.

Most nodes' software is based on the Rhino Telecoms Application Server platform. Each VM type runs in a cluster for redundancy, and understands that it is part of the overall solution, so will configure itself with relevant settings from other VMs where appropriate.

Installation

Installation is the process of deploying VMs onto your host. The Rhino VoLTE TAS VMs must be installed using the SIMPL VM, which you will need to deploy manually first, using instructions from the SIMPL VM Documentation.

The SIMPL VM allows you to deploy VMs in an automated way. By writing a Solution Definition File (SDF), you describe to the SIMPL VM the number of VMs in your deployment and their properties such as hostnames and IP addresses. Software on the SIMPL VM then communicates with your VM host to create and power on the VMs.

The SIMPL VM deploys images from packages known as CSARs (Cloud Service Archives), which contain a VM image in the format the host would recognize, such as .ova for VMware vSphere, as well as ancillary tools and data files.

Your Metaswitch Customer Care Representative can provide you with links to CSARs suitable for your choice of appliance version and VM platform.

They can also assist you with writing the SDF.

See the Installation and upgrades overview page for detailed installation instructions.

Note that all nodes in a deployment must be configured before any of them will start to serve live traffic.

Upgrades

The Rhino VoLTE TAS nodes are designed to allow rolling upgrades with little or no service outage time. One at a time, each downlevel node is destroyed and replaced by an uplevel node. This is repeated until all nodes have been upgraded.

Configuration for the uplevel node is uploaded in advance. As nodes are recreated, they immediately pick up the uplevel configuration and resume service application.

If an upgrade goes wrong, rollback to the previous version is also supported.

As with installation, upgrades and rollbacks use the SIMPL VM.

See the Installation and upgrades overview page for detailed instructions on how to perform an upgrade.

CSAR EFIX patches

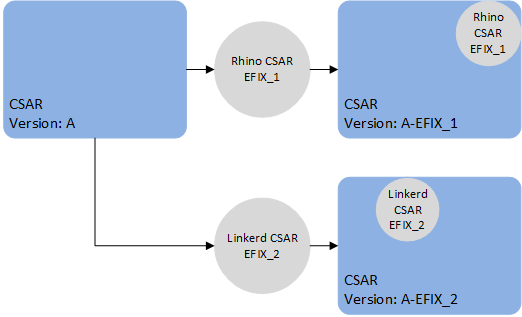

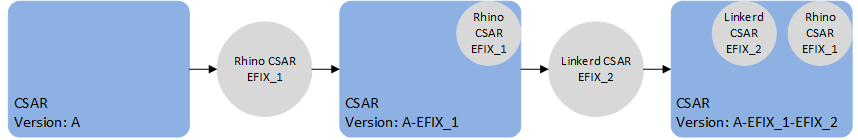

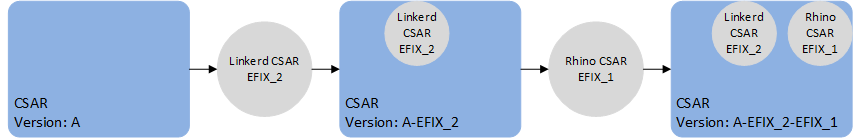

CSAR EFIX patches, also known as VM patches, are based on the SIMPL VM’s csar efix command. The command is used to combine a CSAR EFIX file (a tar file containing some metadata and files to update), and an existing unpacked CSAR on the SIMPL. This creates a new, patched CSAR on the SIMPL VM. It does not patch any VMs in-place, but instead patches the CSAR itself offline on the SIMPL VM. A normal rolling upgrade is then used to migrate to the patched version.

Once a CSAR has been patched, the newly created CSAR is entirely separate, with no linkage between them. Applying patch EFIX_1 to the original CSAR creates a new CSAR with the changes from patch EFIX_1.

In general:

-

Applying patch EFIX_2 to the original CSAR will yield a new CSAR without the changes from EFIX_1.

-

Applying EFIX_2 to the already patched CSAR will yield a new CSAR with the changes from both EFIX_1 and EFIX_2.

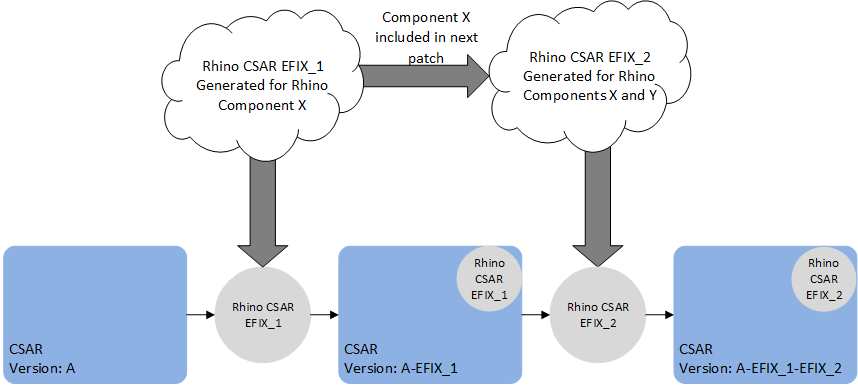

VM patches which target SLEE components (e.g. a service or feature change) contain the full deployment state of Rhino, including all SLEE components. As such, if applying multiple patches of this type, only the last such patch will take effect, because the last patch contains all the SLEE components. In other words, a patch to SLEE components should contain all the desired SLEE component changes, relative to the original release of the VM. For example, patch EFIX_1 contains a fix for the HTTP RA SLEE component X and patch EFIX_2 contains an fix for a SLEE Service component Y. When EFIX_2 is generated it will contain the component X and Y fixes for the VM.

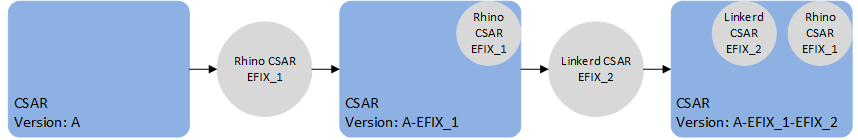

However, it is possible to apply a specific patch with a generic CSAR EFIX patch that only contains files to update. For example, patch EFIX_1 contains a specific patch that contains a fix for the HTTP RA SLEE component, and patch EFIX_2 contains an update to the linkerd config file. We can apply patch EFIX_1 to the original CSAR, then patch EFIX_2 to the patched CSAR.

We can also apply EFIX_2 first then EFIX_1.

| |

When a CSAR EFIX patch is applied, a new CSAR is created with the versions of the target CSAR and the CSAR EFIX version. |

Configuration

The configuration model is "declarative" - to change the configuration, you upload a complete set of files containing the entire configuration for all nodes, and the VMs will attempt to alter their configuration ("converge") to match. This allows for integration with GitOps (keeping configuration in a source control system), as well as ease of generating configuration via scripts.

Configuration is stored in a database called CDS, which is a set of tables in a Cassandra database. These tables contain version information, so that you can upload configuration in preparation for an upgrade without affecting the live system.

The TSN nodes provide the CDS database. The tables are created automatically when the TSN nodes start for the first time; no manual installation or configuration of Cassandra is required.

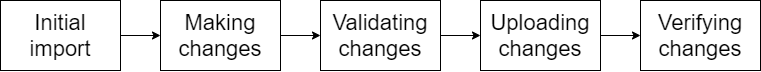

Configuration files are written in YAML format. Using the rvtconfig tool, their contents can be syntax-checked and verified for validity and self-consistency before uploading them to CDS.

See VM configuration for detailed information about writing configuration files and the (re)configuration process.

VM types

This page describes the different Rhino VoLTE TAS VM type(s) documented in this manual.

It also describes the ancillary nodes used to deploy and manage those VMs.

Node types

TSN

A TAS Storage Node (TSN) is a VM that runs two Cassandra databases and provides these databases' services to the other node types in a Rhino VoLTE TAS deployment. TSNs run in a cluster with between 3 and 30 nodes per cluster depending on deployment size; load-balancing is performed automatically.

ShCM

An Sh Cache Microservice node provides HTTP access to the HSS via Diameter Sh, as well as caching some of that data to reduce round trips to the HSS.

MAG

A Management and Authentication Gateway (MAG) node is a node that runs the XCAP server (part of Sentinel VoLTE), and Sentinel AGW, Metaswitch’s implementation of the 3GPP Generic Authentication Architecture (GAA) framework, consisting of the NAF Authentication Filter and BSF components. It also runs the Rhino Element Manager management and monitoring software. The BSF runs in Rhino, all the other components run in Apache Tomcat.

MMT CDMA

An MMTel (MMT) node is a VM that runs the Sentinel VoLTE application on Rhino. It provides both SCC and MMTel functionality. It is available in both a GSM and CDMA variant.

| |

This book documents the CDMA version of the MMT node. If you are installing a GSM deployment, please refer to the RVT VM Install Guide (GSM). |

VM sizes

Refer to the Flavors section for information on the VMs' sizing: number of vCPUs, RAM, and virtual disk.

Ancillary node types

The SIMPL VM

The SIMPL Virtual Appliance provides orchestration software to create, verify, configure, destroy and upgrade RVT instances. Following the initial deployment, you will only need the SIMPL VM to perform configuration changes, patching or upgrades - it is not required for normal operation of the RVT deployment.

Installation

SIMPL supports VM orchestration for numerous Metaswitch products, including MDM (see below). SIMPL is normally deployed as a single VM instance, though deployments involving a large number of products may require two or three SIMPL instances to hold all the VM images.

Virtual hardware requirements for the SIMPL VM can be found in the "VM specification" section of the SIMPL VM Documentation.

Metaswitch Deployment Manager (MDM)

Rhino VoLTE TAS deployments use Metaswitch Deployment Manager (MDM) to co-ordinate installation, upgrades, scale and healing (replacement of failed instances). MDM is a virtual appliance that provides state monitoring, DNS and NTP services to the deployment. It is deployed as a pool of at least three virtual machines, and can also manage other Metaswitch products that might be present in your deployment such as Service Assurance Server (SAS) and Clearwater. A single pool of VMs can manage all instances of compatible Metaswitch products you are using.

Upgrade

If you are upgrading from a deployment which already has MDM, ensure all MDM instances are upgraded before starting the upgrade of the RVT nodes. Your Customer Care Representative can provide guidance on upgrading MDM.

If you are upgrading from a deployment which does not have MDM, you must deploy MDM before upgrading any RVT nodes.

Minimum number of nodes required

For a production deployment, all the node types required are listed in the following table, along with the minimum number of nodes of each type. The exact number of nodes of each type required will depend on your projected traffic capacity and profile.

For a lab deployment, we recommend that you install all node types. However, it is possible to omit MMT, ShCM, SMO, or MAG nodes if those node types are not a concern for your lab testing.

| |

The TSNs must be included for all lab deployments, as they are required for successful configuration of other node types. |

| |

A single site can have a maximum of 7 SMO nodes. |

| Node type | Minimum nodes for production deployment | Recommended minimum nodes for lab deployment |

|---|---|---|

TSN |

3 per site |

3 for the whole deployment |

ShCM |

2 per site |

1 for the whole deployment |

MAG |

3 per site |

1 per site |

MMT CDMA |

3 per site |

1 per site |

SMO |

3 per site |

1 per site |

SIMPL |

1 for the whole deployment |

1 for the whole deployment |

MDM |

3 per site |

1 per site |

Flavors

Each node type has a set of specifications that defines RAM, storage, and CPU requirements for different deployment sizes, known as flavors. Refer to the pages of the individual node types for flavor specifications.

| |

The term |

TSN

The TSN nodes can be installed using the following flavors. This option has to be selected in the SDF. The selected option determines the values for RAM, hard disk space and virtual CPU count.

| |

The |

| Spec | Use case | Resources |

|---|---|---|

|

Lab trials and small-size production environments |

|

|

Mid-size production environments |

|

|

Large-size production environments |

|

ShCM

The ShCM nodes can be installed using the following flavors. This option has to be selected in the SDF. The selected option determines the values for RAM, hard disk space and virtual CPU count.

| Spec | Use case | Resources |

|---|---|---|

|

All deployments - this is the only supported deployment size |

|

MAG

The MAG nodes can be installed using the following flavors. This option has to be selected in the SDF. The selected option determines the values for RAM, hard disk space and virtual CPU count.

| |

The |

| Spec | Use case | Resources |

|---|---|---|

|

Lab and small-size production environments |

|

|

Mid and large-size production environments |

|

MMT CDMA

The MMT CDMA nodes can be installed using the following flavors. This option has to be selected in the SDF. The selected option determines the values for RAM, hard disk space and virtual CPU count.

| |

The |

| Spec | Use case | Resources |

|---|---|---|

|

Lab and small-size production environments |

|

|

Mid and large-size production environments |

|

SMO

The SMO nodes can be installed using the following flavors. This option has to be selected in the SDF. The selected option determines the values for RAM, hard disk space and virtual CPU count.

| |

The |

| Spec | Use case | Resources |

|---|---|---|

|

Lab and small-size production environments |

|

|

Mid and large-size production environments |

|

Installation and upgrades

- Installation and upgrades overview

- Installation or upgrades on OpenStack

- Installation on OpenStack

- Automatic rolling upgrades and patches with SIMPL VM on OpenStack

- Rolling upgrades with SIMPL VM

- Rolling upgrades using CSAR EFIX patch with SIMPL VM

- Setting up for a rolling upgrade using CSAR EFIX patch

- Rolling CSAR EFIX patch TSN nodes on OpenStack

- Rolling CSAR EFIX patch ShCM nodes on OpenStack

- Rolling CSAR EFIX patch MAG nodes on OpenStack

- Rolling CSAR EFIX patch MMT CDMA nodes on OpenStack

- Rolling CSAR EFIX patch SMO nodes on OpenStack

- Post rolling upgrade using CSAR EFIX patch steps

- Installation or upgrades on VMware vSphere

- Installation on VMware vSphere

- Automatic rolling upgrades and patches with SIMPL VM on VMware vSphere

- Rolling upgrades with SIMPL VM

- Rolling upgrades using CSAR EFIX patch with SIMPL VM

- Setting up for a rolling upgrade using CSAR EFIX patch

- Rolling CSAR EFIX patch TSN nodes on VMware vSphere

- Rolling CSAR EFIX patch ShCM nodes on VMware vSphere

- Rolling CSAR EFIX patch MAG nodes on VMware vSphere

- Rolling CSAR EFIX patch MMT CDMA nodes on VMware vSphere

- Rolling CSAR EFIX patch SMO nodes on VMware vSphere

- Post rolling upgrade using CSAR EFIX patch steps

Installation and upgrades overview

The steps below describe how to upgrade the nodes that make up your deployment. Select the steps that are appropriate for your VM host: OpenStack or VMware vSphere.

The supported versions for the platforms are listed below:

| Platform | Supported versions |

|---|---|

OpenStack |

Newton to Wallaby |

VMware vSphere |

6.7 and 7.0 |

Live migration of a node to a new VMware vSphere host or a new OpenStack compute node is not supported. To move such a node to a new host, remove it from the old host and add it again to the new host.

Preparing for an upgrade

| Task | More information |

|---|---|

Set up and/or verify your OpenStack or VMware vSphere deployment |

The installation procedures assume that you are upgrading VMs on an existing OpenStack or VMware vSphere host(s). Ensure the host(s) have sufficient vCPU, RAM and disk space capacity for the VMs. Note that for upgrades, you will temporarily need approximately one more VM’s worth of vCPU and RAM, and potentially more than double the disk space, than your existing deployment currently uses. You can later clean up older images to save disk space once you are happy that the upgrade was successful. Perform health checks on your host(s), such as checking for active alarms, to ensure they are in a suitable state to perform VM lifecycle operations. Ensure the VM host credentials that you will use in your SDF are valid and have sufficient permission to create/destroy VMs, power them on and off, change their properties, and access a VM’s terminal via the console. |

Prepare service configuration |

VM configuration information can be found at VM Configuration. |

Installation

The following table sets out the steps you need to take to install and commission your VM deployment.

Be sure you know the number of VMs you need in your deployment. At present it is not possible to change the size of your deployment after it has been created.

| Step | Task | Link |

|---|---|---|

Installation (on OpenStack) |

Prepare the SDF for the deployment |

|

Deploy SIMPL VM into OpenStack |

||

Prepare configuration files for the deployment |

||

Create the OpenStack flavors |

||

Install MDM |

||

Prepare SIMPL VM for deployment |

||

Deploy TSN nodes on OpenStack |

||

Deploy ShCM nodes on OpenStack |

||

Deploy MAG nodes on OpenStack |

||

Deploy MMT CDMA nodes on OpenStack |

||

Deploy SMO nodes on OpenStack |

||

Installation (on VMware vSphere) |

Prepare the SDF for the deployment |

|

Deploy SIMPL VM into VMware vSphere |

||

Prepare configuration files for the deployment |

||

Install MDM |

||

Prepare SIMPL VM for deployment |

||

Deploy TSN nodes on VMware vSphere |

||

Deploy ShCM nodes on VMware vSphere |

||

Deploy MAG nodes on VMware vSphere |

||

Deploy MMT CDMA nodes on VMware vSphere |

||

Deploy SMO nodes on VMware vSphere |

||

Verification |

Run some simple tests to verify that your VMs are working as expected |

Upgrades

The following table sets out the steps you need to execute a rolling upgrade of an existing VM deployment.

| Step | Task | Link |

|---|---|---|

Rolling upgrade (on OpenStack) |

Setting up for a rolling upgrade |

|

Rolling upgrade TSN nodes on OpenStack |

||

Rolling upgrade ShCM nodes on OpenStack |

||

Rolling upgrade MAG nodes on OpenStack |

||

Rolling upgrade MMT CDMA nodes on OpenStack |

||

Rolling upgrade SMO nodes on OpenStack |

||

Rolling upgrade (on OpenStack) |

Post rolling upgrade steps |

|

Rolling upgrade (on VMware vSphere) |

Setting up for a rolling upgrade |

|

Rolling upgrade TSN nodes on VMware vSphere |

||

Rolling upgrade ShCM nodes on VMware vSphere |

||

Rolling upgrade MAG nodes on VMware vSphere |

||

Rolling upgrade MMT CDMA nodes on VMware vSphere |

||

Rolling upgrade SMO nodes on VMware vSphere |

||

Rolling upgrade (on VMware vSphere) |

Post rolling upgrade steps |

|

Verification |

Run some simple tests to verify that your VMs are working as expected |

Patches

The following table sets out the steps you need to execute a patch of an existing VM deployment.

| Step | Task | Link |

|---|---|---|

Rolling upgrade using CSAR EFIX patch (on OpenStack) |

Setting up for a rolling upgrade using CSAR EFIX patch |

|

Rolling CSAR EFIX patch TSN nodes on OpenStack |

||

Rolling CSAR EFIX patch ShCM nodes on OpenStack |

||

Rolling CSAR EFIX patch MAG nodes on OpenStack |

||

Rolling CSAR EFIX patch MMT CDMA nodes on OpenStack |

||

Rolling CSAR EFIX patch SMO nodes on OpenStack |

||

Rolling upgrade using CSAR EFIX patch (on OpenStack) |

Post rolling upgrade using CSAR EFIX patch steps |

|

Rolling upgrade using CSAR EFIX patch (on VMware vSphere) |

Setting up for a rolling upgrade using CSAR EFIX patch |

|

Rolling CSAR EFIX patch TSN nodes on VMware vSphere |

||

Rolling CSAR EFIX patch ShCM nodes on VMware vSphere |

||

Rolling CSAR EFIX patch MAG nodes on VMware vSphere |

||

Rolling CSAR EFIX patch MMT CDMA nodes on VMware vSphere |

||

Rolling CSAR EFIX patch SMO nodes on VMware vSphere |

||

Rolling upgrade using CSAR EFIX patch (on VMware vSphere) |

Post rolling upgrade using CSAR EFIX patch steps |

|

Installation or upgrades on OpenStack

These pages describe how to install or upgrade the RVT nodes on OpenStack.

- Installation on OpenStack

- Automatic rolling upgrades and patches with SIMPL VM on OpenStack

- Rolling upgrades with SIMPL VM

- Rolling upgrades using CSAR EFIX patch with SIMPL VM

- Setting up for a rolling upgrade using CSAR EFIX patch

- Rolling CSAR EFIX patch TSN nodes on OpenStack

- Rolling CSAR EFIX patch ShCM nodes on OpenStack

- Rolling CSAR EFIX patch MAG nodes on OpenStack

- Rolling CSAR EFIX patch MMT CDMA nodes on OpenStack

- Rolling CSAR EFIX patch SMO nodes on OpenStack

- Post rolling upgrade using CSAR EFIX patch steps

Installation on OpenStack

These pages describe how to install the nodes on OpenStack.

Prepare the SDF for the deployment

Planning for the procedure

Background knowledge

This procedure assumes that:

-

you are installing into an existing OpenStack deployment

-

you are using an OpenStack version from Icehouse through to Train inclusive

-

you are thoroughly familiar with working with OpenStack machines and know how to set up tenants, users, roles, client environment scripts, and so on

(For more information, refer to the appropriate OpenStack installation guide for the version that you are using here.)

-

you have read the installation guidelines at Installation and upgrades overview and have everything you need to carry out the installation.

Reserve maintenance period

This procedure does not require a maintenance period. However, if you are integrating into a live network, we recommend that you implement measures to mitigate any unforeseen events.

Tools and access

This page references an external document: SIMPL VM Documentation. Ensure you have a copy available before proceeding.

Installation Questions

| Question | More information |

|---|---|

Do you have the correct CSARs? |

All virtual appliances use the naming convention - |

Do you have a list of the IP addresses that you intend to give to each node of each node type? |

Each node requires an IP address for each interface. You can find a list of the VM’s interfaces on the Network Interfaces page. |

Do you have DNS and NTP Server information? |

It is expected that the deployed nodes will integrate with the IMS Core NTP and DNS servers. |

Method of procedure

Step 1 - Extract the CSAR

This can either be done on your local Linux machine or on a SIMPL VM.

Option A - Running on a local machine

| |

If you plan to do all operations from your local Linux machine instead of SIMPL, Docker must be installed to run the rvtconfig tool in a later step. |

To extract the CSAR, run the command: unzip <path to CSAR> -d <new directory to extract CSAR to>.

Option B - Running on an existing SIMPL VM

For this step, the SIMPL VM does not need to be running on the Openstack deployment where the deployment takes place. It is sufficient to use a SIMPL VM on a lab system to prepare for a production deployment.

Transfer the CSAR onto the SIMPL VM and run csar unpack <path to CSAR>, where <path to CSAR> is the full path to the transferred CSAR.

This will unpack the CSAR to ~/.local/share/csar/.

Step 2 - Write the SDF

The Solution Definition File (SDF) contains all the information required to set up your cluster. It is therefore crucial to ensure all information in the SDF is correct before beginning the deployment. One SDF should be written per deployment.

It is recommended that the SDF is written before starting the deployment. The SDF must be named sdf-rvt.yaml.

See Writing an SDF for more detailed information.

| |

Each deployment needs a unique |

Example SDFs are included in every CSAR and can also be found at Example SDFs. We recommend that you start from a template SDF and edit it as desired instead of writing an SDF from scratch.

Deploy SIMPL VM into OpenStack

| |

Note that one SIMPL VM can be used to deploy multiple node types. Thus, this step only needs to be performed once for all node types. |

| |

The supported version of the SIMPL VM is |

Planning for the procedure

Background knowledge

This procedure assumes that:

-

you are installing into an existing OpenStack deployment

-

you are using a supported OpenStack version, as described in the 'OpenStack requirements' section of the SIMPL VM Documentation

-

you are thoroughly familiar with working with OpenStack machines and know how to set up tenants, users, roles, client environment scripts, and so on

(For more information, refer to the appropriate OpenStack installation guide for the version that you are using here.)

-

you know the IP networking information (IP address, subnet mask in CIDR notation, and default gateway) for the SIMPL VM.

Reserve maintenance period

This procedure does not require a maintenance period. However, if you are integrating into a live network, we recommend that you implement measures to mitigate any unforeseen events.

Tools and access

You must have:

-

access to a local computer with a network connection and browser access to the OpenStack Dashboard

-

administrative access to the OpenStack host machine

-

the OpenStack privileges required to deploy VMs from an image (see OpenStack documentation for specific details).

This page references an external document: the SIMPL VM Documentation. Ensure you have a copy available before proceeding.

Installation Questions

| Question | More information |

|---|---|

Do you have the correct SIMPL VM QCOW2? |

All SIMPL VM virtual appliances use the naming convention - |

Do you know the IP address that you intend to give to the SIMPL VM? |

The SIMPL VM requires one IP address, for management traffic. |

Have you created and do you know the names of the networks and security group for the nodes? |

The SIMPL VM requires a management network with an unrestricted security group. |

Method of procedure

Deploy and configure the SIMPL VM

Follow the SIMPL VM Documentation on how to deploy the SIMPL VM and set up the configuration.

Prepare configuration files for the deployment

To deploy nodes, you need to prepare configuration files that would be uploaded to the VMs.

Method of procedure

Step 1 - Create configuration YAML files

Create configuration YAML files relevant for your node type on the SIMPL VM. Store these files in the same directory as your prepared SDF.

See Example configuration YAML files for example configuration files.

Create the OpenStack flavors

About this task

This task creates the node flavor(s) that you will need when installing your deployment on OpenStack virtual machines.

| |

You must complete this procedure before you begin the installation of the first node on OpenStack, but will not need to carry it out again for subsequent node installations. |

Create your node flavor(s)

Detailed procedure

-

Run the following command to create the OpenStack flavor, replacing

<flavor name>with a name that will help you identify the flavor in future.nova flavor-create <flavor name> auto <ram_mb> <disk_gb> <vcpu_count>where:

-

<ram_mb>is the amount of RAM, in megabytes -

<disk_gb>is the amount of hard disk space, in gigabytes -

<vpu_count>is the number of virtual CPUs.Specify the parameters as pure numbers without units.

-

You can find the possible flavors in the Flavors section, and it is recommended to use the same flavor name as described there.

Some node types share flavors. If the same flavor is to be used for multiple node types, only create it once.

-

Make note of the flavor ID value provided in the command output because you will need it when installing your OpenStack deployment.

-

To check that the flavor you have just created has the correct values, run the command:

nova flavor-list -

If you need to remove an incorrectly-configured flavor (replacing <flavor name> with the name of the flavor), run the command:

nova flavor-delete <flavor name>

Install MDM

Before deploying any nodes, you will need to first install Metaswitch Deployment Manager (MDM).

Prerequisites

-

The MDM CSAR

-

A deployed and powered-on SIMPL virtual machine

-

The MDM deployment parameters (hostnames; management and signaling IP addresses)

-

Addresses for NTP, DNS and SNMP servers that the MDM instances will use

| |

The minimum supported version of MDM is |

Method of procedure

Your Customer Care Representative can provide guidance on using the SIMPL VM to deploy MDM. Follow the instructions in the SIMPL VM Documentation.

As part of the installation, you will add MDM to the Solution Definition File (SDF) with the following data:

-

certificates and keys

-

custom topology

Generation of certificates and keys

MDM requires the following certificates and keys. Refer to the MDM documentation for more details.

-

An SSH key pair (for logging into all instances in the deployment, including MDM, which does not allow SSH access using passwords)

-

A CA (certificate authority) certificate and private key (used for the server authentication side of mutual TLS)

-

A "static", also called "client", certificate and private key (used for the client authentication side of mutual TLS)

The CA private key is unused, but should be kept safe in order to generate a new static certificate and private key in the future. Add the other credentials to the SDF sdf-rvt.yaml as described in MDM service group.

Prepare SIMPL VM for deployment

Before deploying the VMs, the following files must be uploaded onto the SIMPL VM.

Upload the CSARs to the SIMPL VM

If not already done, transfer the CSARs onto the SIMPL VM. For each CSAR, run csar unpack <path to CSAR>, where <path to CSAR> is the full path to the transferred CSAR.

This will unpack the CSARs to ~/.local/share/csar/.

Upload the SDF to SIMPL VM

If the CSAR SDF was not created on the SIMPL VM, transfer the previously written CSAR SDF onto the SIMPL VM.

| |

Ensure that each version in the vnfcs section of the SDF matches each node type’s CSAR version. |

Deploy the nodes on OpenStack

To install all node types, refer to the following pages in the order below.

Deploy TSN nodes on OpenStack

Planning for the procedure

Background knowledge

This procedure assumes that:

-

you are installing into an existing OpenStack deployment

-

The OpenStack deployment must be set up with support for Heat templates.

-

-

you are using an OpenStack version from Icehouse through to Train inclusive

-

you are thoroughly familiar with working with OpenStack machines and know how to set up tenants, users, roles, client environment scripts, and so on.

(For more information, refer to the appropriate OpenStack installation guide for the version that you are using here.)

-

you have deployed a SIMPL VM, unpacked the CSAR, and prepared an SDF.

Method of procedure

| |

Refer to the SIMPL VM Documentation for details on the commands mentioned in the procedure. |

Step 1 - Check OpenStack quotas

The SIMPL VM creates one server group per VM, and one security group per interface on each VM. OpenStack sets limits on the number of server groups and security groups through quotas.

View the quota by running openstack quota show <project id> on OpenStack Controller node. This shows the maximum number of various resources.

You can view the existing server groups by running openstack server group list. Similarly, you can find the security groups by running openstack security group list

If the quota is too small to accommodate the new VMs that will be deployed, increase it by running

openstack quota set --<quota field to increase> <new quota value> <project ID>. For example:

openstack quota set --server-groups 100 125610b8bf424e61ad2aa5be27ad73bb

Step 2 - Deploy the OVA

Run csar deploy --vnf tsn --sdf <path to SDF>.

This will validate the SDF, and generate the heat template. After successful validation, this will upload the image, and deploy the number of TSN nodes specified in the SDF.

| |

Only one node type should be deployed at the same time. I.e. when deploying these TSN nodes, don’t deploy other node types at the same time in parallel. |

Step 3 - Validate TSN RVT configuration

Validate the configuration for the TSN nodes to ensure that each TSN node can properly self-configure.

To validate the configuration after creating the YAML files, run

rvtconfig validate -t tsn -i <yaml-config-file-directory>

on the SIMPL VM from the resources subdirectory of the TSN CSAR.

Step 4 - Upload TSN RVT configuration

Upload the configuration for the TSN nodes to the CDS. This will enable each TSN node to self-configure.

To upload configuration after creating the YAML files and validating them as described above, run

rvtconfig upload-config -c <tsn-mgmt-addresses> -t tsn -i <yaml-config-file-directory> (--vm-version-source this-rvtconfig | --vm-version <version>)

on the SIMPL VM from the resources subdirectory of the TSN CSAR.

See Example configuration YAML files for example configuration files.

An in-depth description of RVT YAML configuration can be found in the Rhino VoLTE TAS Configuration and Management Guide.

Backout procedure

To delete the deployed VMs, run csar delete --vnf tsn --sdf <path to SDF>.

You must also delete the MDM state for each VM. To do this, you must first SSH into one of the MDM VMs. Get the instance IDs by running: mdmhelper --deployment-id <deployment ID> instance list. Then for each TSN VM, run the following command:

curl -X DELETE -k \

--cert /etc/certs-agent/upload/mdm-cert.crt \

--cacert /etc/certs-agent/upload/mdm-cas.crt \

--key /etc/certs-agent/upload/mdm-key.key \

https://127.0.0.1:4000/api/v1/deployments/<deployment ID>/instances/<instance ID>Verify that the deletion worked by running mdmhelper --deployment-id <deployment ID> instance list again. You may now log out of the MDM VM.

Next Step

If you are upgrading a full set of VMs, go to Deploy ShCM nodes on OpenStack, otherwise follow the verification instructions here: Verify the state of the nodes and processes

Deploy ShCM nodes on OpenStack

Planning for the procedure

Background knowledge

This procedure assumes that:

-

you are installing into an existing OpenStack deployment

-

The OpenStack deployment must be set up with support for Heat templates.

-

-

you are using an OpenStack version from Icehouse through to Train inclusive

-

you are thoroughly familiar with working with OpenStack machines and know how to set up tenants, users, roles, client environment scripts, and so on.

(For more information, refer to the appropriate OpenStack installation guide for the version that you are using here.)

-

you have deployed a SIMPL VM, unpacked the CSAR, and prepared an SDF.

Method of procedure

| |

Refer to the SIMPL VM Documentation for details on the commands mentioned in the procedure. |

Step 1 - Check OpenStack quotas

The SIMPL VM creates one server group per VM, and one security group per interface on each VM. OpenStack sets limits on the number of server groups and security groups through quotas.

View the quota by running openstack quota show <project id> on OpenStack Controller node. This shows the maximum number of various resources.

You can view the existing server groups by running openstack server group list. Similarly, you can find the security groups by running openstack security group list

If the quota is too small to accommodate the new VMs that will be deployed, increase it by running

openstack quota set --<quota field to increase> <new quota value> <project ID>. For example:

openstack quota set --server-groups 100 125610b8bf424e61ad2aa5be27ad73bb

Step 2 - Validate ShCM RVT configuration

Validate the configuration for the ShCM nodes to ensure that each ShCM node can properly self-configure.

To validate the configuration after creating the YAML files, run

rvtconfig validate -t shcm -i <yaml-config-file-directory>

on the SIMPL VM from the resources subdirectory of the ShCM CSAR.

Step 3 - Upload ShCM RVT configuration

Upload the configuration for the ShCM nodes to the CDS. This will enable each ShCM node to self-configure when they are deployed in the next step.

To upload configuration after creating the YAML files and validating them as described above, run

rvtconfig upload-config -c <tsn-mgmt-addresses> -t shcm -i <yaml-config-file-directory> (--vm-version-source this-rvtconfig | --vm-version <version>)

on the SIMPL VM from the resources subdirectory of the ShCM CSAR.

See Example configuration YAML files for example configuration files.

An in-depth description of RVT YAML configuration can be found in the Rhino VoLTE TAS Configuration and Management Guide.

Step 4 - Deploy the OVA

Run csar deploy --vnf shcm --sdf <path to SDF>.

This will validate the SDF, and generate the heat template. After successful validation, this will upload the image, and deploy the number of ShCM nodes specified in the SDF.

| |

Only one node type should be deployed at the same time. I.e. when deploying these ShCM nodes, don’t deploy other node types at the same time in parallel. |

Backout procedure

To delete the deployed VMs, run csar delete --vnf shcm --sdf <path to SDF>.

You must also delete the MDM state for each VM. To do this, you must first SSH into one of the MDM VMs. Get the instance IDs by running: mdmhelper --deployment-id <deployment ID> instance list. Then for each ShCM VM, run the following command:

curl -X DELETE -k \

--cert /etc/certs-agent/upload/mdm-cert.crt \

--cacert /etc/certs-agent/upload/mdm-cas.crt \

--key /etc/certs-agent/upload/mdm-key.key \

https://127.0.0.1:4000/api/v1/deployments/<deployment ID>/instances/<instance ID>Verify that the deletion worked by running mdmhelper --deployment-id <deployment ID> instance list again. You may now log out of the MDM VM.

You must also delete state for this node type and version from the CDS prior to re-deploying the VMs. To delete the state, run rvtconfig delete-node-type --cassandra-contact-point <any TSN IP> --deployment-id <deployment ID>.

--site-id <site ID> --node-type shcm

(--vm-version-source [this-vm | this-rvtconfig] | --vm-version <vm_version>)

Next Step

If you are upgrading a full set of VMs, go to Deploy MAG nodes on OpenStack, otherwise follow the verification instructions here: Verify the state of the nodes and processes

Deploy MAG nodes on OpenStack

Planning for the procedure

Background knowledge

This procedure assumes that:

-

you are installing into an existing OpenStack deployment

-

The OpenStack deployment must be set up with support for Heat templates.

-

-

you are using an OpenStack version from Icehouse through to Train inclusive

-

you are thoroughly familiar with working with OpenStack machines and know how to set up tenants, users, roles, client environment scripts, and so on.

(For more information, refer to the appropriate OpenStack installation guide for the version that you are using here.)

-

you have deployed a SIMPL VM, unpacked the CSAR, and prepared an SDF.

Method of procedure

| |

Refer to the SIMPL VM Documentation for details on the commands mentioned in the procedure. |

Step 1 - Check OpenStack quotas

The SIMPL VM creates one server group per VM, and one security group per interface on each VM. OpenStack sets limits on the number of server groups and security groups through quotas.

View the quota by running openstack quota show <project id> on OpenStack Controller node. This shows the maximum number of various resources.

You can view the existing server groups by running openstack server group list. Similarly, you can find the security groups by running openstack security group list

If the quota is too small to accommodate the new VMs that will be deployed, increase it by running

openstack quota set --<quota field to increase> <new quota value> <project ID>. For example:

openstack quota set --server-groups 100 125610b8bf424e61ad2aa5be27ad73bb

Step 2 - Validate MAG RVT configuration

Validate the configuration for the MAG nodes to ensure that each MAG node can properly self-configure.

To validate the configuration after creating the YAML files, run

rvtconfig validate -t mag -i <yaml-config-file-directory>

on the SIMPL VM from the resources subdirectory of the MAG CSAR.

Step 3 - Upload MAG RVT configuration

Upload the configuration for the MAG nodes to the CDS. This will enable each MAG node to self-configure when they are deployed in the next step.

To upload configuration after creating the YAML files and validating them as described above, run

rvtconfig upload-config -c <tsn-mgmt-addresses> -t mag -i <yaml-config-file-directory> (--vm-version-source this-rvtconfig | --vm-version <version>)

on the SIMPL VM from the resources subdirectory of the MAG CSAR.

See Example configuration YAML files for example configuration files.

An in-depth description of RVT YAML configuration can be found in the Rhino VoLTE TAS Configuration and Management Guide.

Step 4 - Deploy the OVA

Run csar deploy --vnf mag --sdf <path to SDF>.

This will validate the SDF, and generate the heat template. After successful validation, this will upload the image, and deploy the number of MAG nodes specified in the SDF.

| |

Only one node type should be deployed at the same time. I.e. when deploying these MAG nodes, don’t deploy other node types at the same time in parallel. |

Backout procedure

To delete the deployed VMs, run csar delete --vnf mag --sdf <path to SDF>.

You must also delete the MDM state for each VM. To do this, you must first SSH into one of the MDM VMs. Get the instance IDs by running: mdmhelper --deployment-id <deployment ID> instance list. Then for each MAG VM, run the following command:

curl -X DELETE -k \

--cert /etc/certs-agent/upload/mdm-cert.crt \

--cacert /etc/certs-agent/upload/mdm-cas.crt \

--key /etc/certs-agent/upload/mdm-key.key \

https://127.0.0.1:4000/api/v1/deployments/<deployment ID>/instances/<instance ID>Verify that the deletion worked by running mdmhelper --deployment-id <deployment ID> instance list again. You may now log out of the MDM VM.

You must also delete state for this node type and version from the CDS prior to re-deploying the VMs. To delete the state, run rvtconfig delete-node-type --cassandra-contact-point <any TSN IP> --deployment-id <deployment ID>.

--site-id <site ID> --node-type mag

(--vm-version-source [this-vm | this-rvtconfig] | --vm-version <vm_version>)

Next Step

If you are upgrading a full set of VMs, go to Deploy MMT CDMA nodes on OpenStack, otherwise follow the verification instructions here: Verify the state of the nodes and processes

Deploy MMT CDMA nodes on OpenStack

Planning for the procedure

Background knowledge

This procedure assumes that:

-

you are installing into an existing OpenStack deployment

-

The OpenStack deployment must be set up with support for Heat templates.

-

-

you are using an OpenStack version from Icehouse through to Train inclusive

-

you are thoroughly familiar with working with OpenStack machines and know how to set up tenants, users, roles, client environment scripts, and so on.

(For more information, refer to the appropriate OpenStack installation guide for the version that you are using here.)

-

you have deployed a SIMPL VM, unpacked the CSAR, and prepared an SDF.

Method of procedure

| |

Refer to the SIMPL VM Documentation for details on the commands mentioned in the procedure. |

Step 1 - Check OpenStack quotas

The SIMPL VM creates one server group per VM, and one security group per interface on each VM. OpenStack sets limits on the number of server groups and security groups through quotas.

View the quota by running openstack quota show <project id> on OpenStack Controller node. This shows the maximum number of various resources.

You can view the existing server groups by running openstack server group list. Similarly, you can find the security groups by running openstack security group list

If the quota is too small to accommodate the new VMs that will be deployed, increase it by running

openstack quota set --<quota field to increase> <new quota value> <project ID>. For example:

openstack quota set --server-groups 100 125610b8bf424e61ad2aa5be27ad73bb

Step 2 - Validate MMT CDMA RVT configuration

Validate the configuration for the MMT CDMA nodes to ensure that each MMT CDMA node can properly self-configure.

To validate the configuration after creating the YAML files, run

rvtconfig validate -t mmt-cdma -i <yaml-config-file-directory>

on the SIMPL VM from the resources subdirectory of the MMT CDMA CSAR.

Step 3 - Upload MMT CDMA RVT configuration

Upload the configuration for the MMT CDMA nodes to the CDS. This will enable each MMT CDMA node to self-configure when they are deployed in the next step.

To upload configuration after creating the YAML files and validating them as described above, run

rvtconfig upload-config -c <tsn-mgmt-addresses> -t mmt-cdma -i <yaml-config-file-directory> (--vm-version-source this-rvtconfig | --vm-version <version>)

on the SIMPL VM from the resources subdirectory of the MMT CDMA CSAR.

See Example configuration YAML files for example configuration files.

An in-depth description of RVT YAML configuration can be found in the Rhino VoLTE TAS Configuration and Management Guide.

Step 4 - Deploy the OVA

Run csar deploy --vnf mmt-cdma --sdf <path to SDF>.

This will validate the SDF, and generate the heat template. After successful validation, this will upload the image, and deploy the number of MMT CDMA nodes specified in the SDF.

| |

Only one node type should be deployed at the same time. I.e. when deploying these MMT CDMA nodes, don’t deploy other node types at the same time in parallel. |

Backout procedure

To delete the deployed VMs, run csar delete --vnf mmt-cdma --sdf <path to SDF>.

You must also delete the MDM state for each VM. To do this, you must first SSH into one of the MDM VMs. Get the instance IDs by running: mdmhelper --deployment-id <deployment ID> instance list. Then for each MMT CDMA VM, run the following command:

curl -X DELETE -k \

--cert /etc/certs-agent/upload/mdm-cert.crt \

--cacert /etc/certs-agent/upload/mdm-cas.crt \

--key /etc/certs-agent/upload/mdm-key.key \

https://127.0.0.1:4000/api/v1/deployments/<deployment ID>/instances/<instance ID>Verify that the deletion worked by running mdmhelper --deployment-id <deployment ID> instance list again. You may now log out of the MDM VM.

You must also delete state for this node type and version from the CDS prior to re-deploying the VMs. To delete the state, run rvtconfig delete-node-type --cassandra-contact-point <any TSN IP> --deployment-id <deployment ID>.

--site-id <site ID> --node-type mmt-cdma

(--vm-version-source [this-vm | this-rvtconfig] | --vm-version <vm_version>)

Next Step

If you are upgrading a full set of VMs, go to Deploy SMO nodes on OpenStack, otherwise follow the verification instructions here: Verify the state of the nodes and processes

Deploy SMO nodes on OpenStack

Planning for the procedure

Background knowledge

This procedure assumes that:

-

you are installing into an existing OpenStack deployment

-

The OpenStack deployment must be set up with support for Heat templates.

-

-

you are using an OpenStack version from Icehouse through to Train inclusive

-

you are thoroughly familiar with working with OpenStack machines and know how to set up tenants, users, roles, client environment scripts, and so on.

(For more information, refer to the appropriate OpenStack installation guide for the version that you are using here.)

-

you have deployed a SIMPL VM, unpacked the CSAR, and prepared an SDF.

Method of procedure

| |

Refer to the SIMPL VM Documentation for details on the commands mentioned in the procedure. |

Step 1 - Check OpenStack quotas

The SIMPL VM creates one server group per VM, and one security group per interface on each VM. OpenStack sets limits on the number of server groups and security groups through quotas.

View the quota by running openstack quota show <project id> on OpenStack Controller node. This shows the maximum number of various resources.

You can view the existing server groups by running openstack server group list. Similarly, you can find the security groups by running openstack security group list

If the quota is too small to accommodate the new VMs that will be deployed, increase it by running

openstack quota set --<quota field to increase> <new quota value> <project ID>. For example:

openstack quota set --server-groups 100 125610b8bf424e61ad2aa5be27ad73bb

Step 2 - Validate SMO RVT configuration

Validate the configuration for the SMO nodes to ensure that each SMO node can properly self-configure.

To validate the configuration after creating the YAML files, run

rvtconfig validate -t smo -i <yaml-config-file-directory>

on the SIMPL VM from the resources subdirectory of the SMO CSAR.

Step 3 - Upload SMO RVT configuration

Upload the configuration for the SMO nodes to the CDS. This will enable each SMO node to self-configure when they are deployed in the next step.

To upload configuration after creating the YAML files and validating them as described above, run

rvtconfig upload-config -c <tsn-mgmt-addresses> -t smo -i <yaml-config-file-directory> (--vm-version-source this-rvtconfig | --vm-version <version>)

on the SIMPL VM from the resources subdirectory of the SMO CSAR.

See Example configuration YAML files for example configuration files.

An in-depth description of RVT YAML configuration can be found in the Rhino VoLTE TAS Configuration and Management Guide.

Step 4 - Deploy the OVA

Run csar deploy --vnf smo --sdf <path to SDF>.

This will validate the SDF, and generate the heat template. After successful validation, this will upload the image, and deploy the number of SMO nodes specified in the SDF.

| |

Only one node type should be deployed at the same time. I.e. when deploying these SMO nodes, don’t deploy other node types at the same time in parallel. |

Backout procedure

To delete the deployed VMs, run csar delete --vnf smo --sdf <path to SDF>.

You must also delete the MDM state for each VM. To do this, you must first SSH into one of the MDM VMs. Get the instance IDs by running: mdmhelper --deployment-id <deployment ID> instance list. Then for each SMO VM, run the following command:

curl -X DELETE -k \

--cert /etc/certs-agent/upload/mdm-cert.crt \

--cacert /etc/certs-agent/upload/mdm-cas.crt \

--key /etc/certs-agent/upload/mdm-key.key \

https://127.0.0.1:4000/api/v1/deployments/<deployment ID>/instances/<instance ID>Verify that the deletion worked by running mdmhelper --deployment-id <deployment ID> instance list again. You may now log out of the MDM VM.

You must also delete state for this node type and version from the CDS prior to re-deploying the VMs. To delete the state, run rvtconfig delete-node-type --cassandra-contact-point <any TSN IP> --deployment-id <deployment ID>.

--site-id <site ID> --node-type smo

(--vm-version-source [this-vm | this-rvtconfig] | --vm-version <vm_version>)

Next Step

Follow the verification instructions here: Verify the state of the nodes and processes

Automatic rolling upgrades and patches with SIMPL VM on OpenStack

This section provides information on Upgrades and CSAR EFIX patches.

Before running a rolling upgrade or patch, ensure that all node types in the deployment pass validation. See Verify the state of the nodes and processes for instructions on how to do this.

All uplevel CSARs or CSAR EFIX patches must be uploaded to SIMPL for all upgraded node types before installation. In addition, the uplevel SDF must contain the uplevel CSAR versions for all upgraded node types.

- Rolling upgrades with SIMPL VM

- Rolling upgrades using CSAR EFIX patch with SIMPL VM

- Setting up for a rolling upgrade using CSAR EFIX patch

- Rolling CSAR EFIX patch TSN nodes on OpenStack

- Rolling CSAR EFIX patch ShCM nodes on OpenStack

- Rolling CSAR EFIX patch MAG nodes on OpenStack

- Rolling CSAR EFIX patch MMT CDMA nodes on OpenStack

- Rolling CSAR EFIX patch SMO nodes on OpenStack

- Post rolling upgrade using CSAR EFIX patch steps

Rolling upgrades with SIMPL VM

To upgrade all node types, refer to the following pages in the order below.

Setting up for a rolling upgrade

Before running a rolling upgrade, some steps must be completed first.

Verify that Rhino has no duplicate OIDs

This can be done prior to the maintenance window. For each node type with Rhino, SSH into one of the VMs.

Run the following command:

last_seen=0; rhino-console listsnmpoidmappings | while read line;do array=($line); if [ "${array[0]}" == "$last_seen" ]; then

echo "Duplicate ${array[0]}"; fi; last_seen=${array[0]}; doneIf there are any duplicates, please contact your Metaswitch Customer Care representative.

Disable scheduled Rhino restarts

If you have configured scheduled Rhino restarts, then these should be disabled before running an upgrade. This can be done by commenting out the scheduled-rhino-restarts section in the VM pool YAML config files. An example is shown below.

virtual-machines:

- vm-id: vm01

rhino-node-id: 101

# scheduled-rhino-restarts:

# day-of-week: Saturday

# time-of-day: 03:00

- vm-id: vm02

rhino-node-id: 102

# scheduled-rhino-restarts:

# day-of-week: Saturday

# time-of-day: 04:00Then to update the VMs with the disabled scheduled restarts, use rvtconfig upload-config.

Verify that HTTPS certificates are valid

The HTTPS certificates on the VMs must be valid for more than 30 days, and must remain valid during the upgrade for the whole deployment. For example, your upgrade will fail if your certificate is valid for 32 days and it takes more than 1 day to upgrade all of the VMs for all node types.

Using your own certificates

If using your own generated certificates, check its expiry date using:

openssl x509 -in <certificate file> -enddate -nooutIf the certificates are expiring, you must first upload the new certificates using rvtconfig upload-config before upgrading.

Using VM generated certificates

If you did not provide certificates to the VMs, the VM will generate its own certificates which are valid for 5 years. So if the current VMs have been deployed less than 5 years ago then there is nothing further to do. If it has been over 5 years, then please contact your Metaswitch Customer Care representative.

Verify all VMs are healthy

All the VMs in the deployment need to be healthy. To check this, run the common health checks for the VMs by following: Verify the state of the nodes and processes. The per-node checks should also be run by following each page under: Per-node checks.

Collect diagnostics from all of the VMs

The diagnostics from all the VMs should be collected. To do this, follow instructions from RVT Diagnostics Gatherer. After generating the diagnostics, transfer it from the VMs to a local machine.

Disable TSN Housekeeping Tasks

Before upgrading TSN VMs, do the following on all downlevel TSN VMs:

sudo systemctl list-timersIf cassandra-repair-daily.timer is present, do the following:

sudo systemctl disable cassandra-repair-daily.timer

sudo systemctl stop cassandra-repair-daily.timerRun the following command to verify that the cassandra-repair-daily.timer is not present:

sudo systemctl list-timersDisable SNMP on SMO VMs if SNMPv3 is enabled

| |

Omitting this step on the SMO VMs when SNMPv3 is configured will result in Initconf failing to converge on the uplevel VMs. |

SNMP is only required to be disabled on the SMO VMs when:

-

Performing a rolling upgrade or rollback of the SMO; and

-

SNMPv3 is enabled (even if SGC notifications are disabled); and

-

the downlevel VM version is 4.0.0-23-1.0.0 or older; and

-

the uplevel VM version is 4.0.0-24-1.0.0 or newer.

The complete process for doing this is documented in Reconfiguring the SGC’s SNMP subsystem.

Download CDRs from all VMs

If your deployment is configured to generate CDRs on the Rhino VMs, these CDRs are stored on the local disk of the VMs and will be lost when the VMs are upgraded.

Therefore, if you need to keep a record of all CDRs generated the platform, you must download any CDRs not yet downloaded before the upgrade. Any CDRs not downloaded from a VM before that VM is upgraded will be permanently lost.

Upload the uplevel CSARs to the SIMPL VM

If not already done, transfer the uplevel CSARs onto the SIMPL VM. For each CSAR, run csar unpack <path to CSAR>, where <path to CSAR> is the full path to the transferred uplevel CSAR.

This will unpack the uplevel CSARs to ~/.local/share/csar/.

Upload the uplevel SDF to SIMPL VM

If the CSAR uplevel SDF was not created on the SIMPL VM, transfer the previously written CSAR uplevel SDF onto the SIMPL VM.

| |

Ensure that each version in the vnfcs section of the uplevel SDF matches each node type’s CSAR version. |

Upload uplevel RVT configuration

Upload the uplevel configuration for all of the node types to the CDS. This is required for the rolling upgrade to complete.

| |

As configuration is stored against a specific version, you need to re-upload, the uplevel configuration even if it is identical to the downlevel configuration. |

When performing a rolling upgrade some elements of the uplevel configuration must remain identical to those in the downlevel configuration. These elements (and the remedy if that configuration change was made and the cluster upgrade process started) are described in the following table:

Node Type |

Disallowed Configuration Change |

Remedy |

All |

The |