This guide describes how to deploy, manage and maintain the OCSS7 SGC component of Metaswitch’s SS7 Stack.

The SGC works in concert with CGIN to deliver SS7/SIGTRAN capability to Rhino. For information on configuring CGIN to use OCSS7, and other technical details and considerations about SGC to CGIN communication, please see Using the OCSS7 TCAP stack in the CGIN manual.

This document assumes a working knowledge of SS7 and SIGTRAN, as well as some familiarity with the administration of Linux systems and Java applications.

Topics

This document includes the following topics:

An overview of OCSS7, including features and architecture |

|

A step-by-step setup guide from packages through a test dialog, ignoring production-grade details |

|

SGC installation in detail, including production-grade features and tuning |

|

Configuration options for startup and runtime operations |

|

Monitoring OCC7’s operational state |

|

Driving OCCS7 from the command line |

|

Upgrading the SGC and the CGIN TCAP stack |

|

Appendix describing the configuration properties that may be set in the |

|

Gathering information for support requests |

|

The SGC versions that support online upgrade |

|

Glossary of acronyms |

Other documentation for OCSS7 can be found on the OCSS7 product page.

About OCSS7

What is OCSS7?

OCSS7 provides SS7 network connectivity to Metaswitch products, including the Rhino CGIN RA and the Metaswitch Scenario Simulator. It does this by implementing a set of SS7 and related SIGTRAN protocols. The current version supports M3UA, ITU-T SCCP, ANSI SCCP, ITU-T TCAP and ANSI TCAP, and can act as an M3UA Application Server connected either to an M3UA Signalling Gateway or to another Application Server as an IP Server Process.

Main features

OCSS7 offers the following main features:

-

Provides IN network connectivity to Metaswitch’s CGIN and Scenario Simulator

-

SIGTRAN M3UA communication with Signalling Gateways and/or IPSP peers

-

Cluster deployment — SGC may be deployed in a cluster where multiple SGC instances cooperate and represent a single Signalling Point Code.

Supported specifications

| Specification | Comment |

|---|---|

RFC 4666 |

M3UA (ASP and IPSP functionality) |

ITU-T Q.711-Q.714 |

ITU-T SCCP (excluding LUDT) |

ATIS-1000112 (previously ANSI T1.112) |

ANSI SCCP (excluding LUDT, INS and ISNI) |

ITU-T Q.771-Q.774 |

TCAP (constrained to features supported by the TCAP SPI interface provided by the CGIN RA) |

ANSI T1.114-2000 and T1.114-1988 |

ANSI TCAP (constrained to features supported by the TCAP SPI interface provided by the CGIN RA) |

More details on supported RFCs, see the OCSS7 Compliance Matrix.

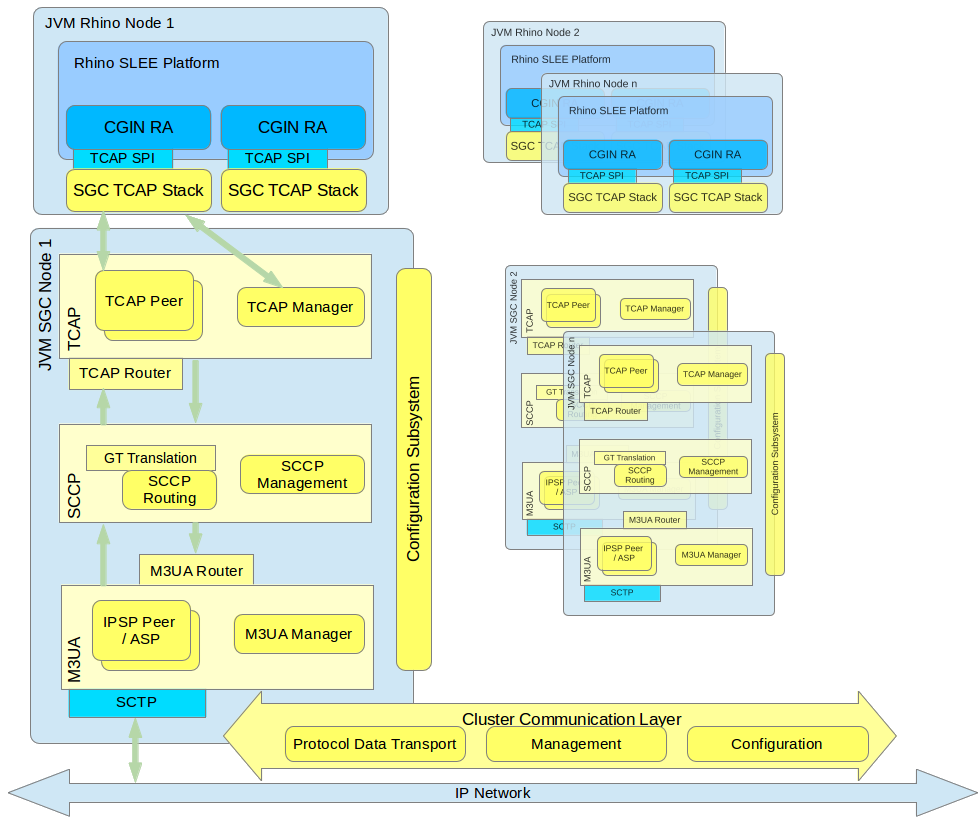

Architecture

OCSS7 is composed of two main user-visible components:

-

the Signalling Gateway Client (SGC) TCAP Stack (frontend), automatically deployed as part of the Rhino CGIN RA, and

-

the SGC (backend), deployed as one or more separate processes.

After initialization, the TCAP Stack (frontend) registers with a preconfigured SSN at the SGC instance, and allows the CGIN RA to accept and initiate TCAP dialogs. Multiple TCAP Stack (frontend) instances representing the same SSN can be connected to the SGC instance (backend); in this case all incoming traffic will be load balanced among them.

The SGC provides IN connectivity for exactly one Point Code. Typically, the SGC is deployed as a cluster of at least two instances to meet standard carrier-grade, high-availability requirements. This manual primarily concerns itself with the OCSS7 SGC, as the TCAP stack component within CGIN RA is managed as part of CGIN.

Below is a high-level diagram followed by descriptions of the main components of the OCSS7 stack. The OCSS7 stack components are yellow. Components that are provided by the Rhino platform, CGIN, or the operating system environment are blue.

SGC Subsystems overview

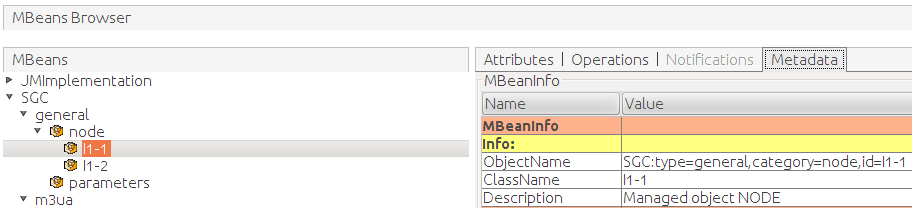

All SGC nodes in the cluster represent a single logical system with a single PC (SS7 Point Code). Each SGC cluster node instantiates all stack layers and subsystems, which coordinate with each other to provide a single logical view of the cluster.

The major SS7 SGC subsystems are:

-

Configuration Subsystem — plays a central role in managing the life cycle of all processing objects in the SGC Stack. The Configuration Subsystem is distributed, so an object on any cluster node may be managed from every other cluster node. Configuration is exposed through a set of JMX MBeans which allow manipulation of the underlying configuration objects

-

TCAP Layer — manages routing and load balancing of TCAP messages to appropriate Rhino CGIN RAs (through registered TCAP Stacks)

-

SCCP Layer — responsible for routing SCCP messages, GT translation, and managing internal and external subsystem availability states

-

M3UA Layer — establishes and manages the SG, IPSP connectivity, and AS state.

Internally, all layers use Cluster Communication subsystems to distribute management state and configuration data, to provide a single logical view of the entire cluster. Irrespective of which cluster node receives or originates an SS7 message, that message is transported to the appropriate cluster node for processing (by the Rhino CGIN RA) or for further sending using one of the nodes' established SCTP associations.

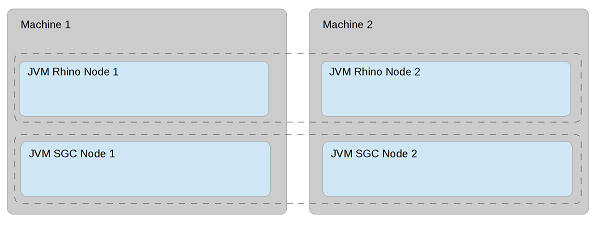

SGC Deployment model

The SGC cluster physical deployment model is independent from Rhino cluster deployment. That is, SGC nodes can be deployed on separate hosts than Rhino nodes OR share the same machines.

| |

See also

Installing the SGC |

Management tools

The SGC installation package provides a CLI management console that exposes a set of CRUD commands that are used to configure the SGC cluster and observe its runtime state. SGC cluster configuration and runtime state can be managed from each and every node; there is no need to connect separately to each node. As the SGC exposes a standard Java JMX management interface, users are in no way constrained to provided tools and are free to create custom management clients to serve their particular needs.

The SGC also provides an SNMP agent, exposing SGC-gathered statistics and Alarm notifications (Traps) through the SNMP protocol.

The TCAP stack component installed as part of the Rhino CGIN RA is managed using the usual Rhino and CGIN RA management facilities.

Quick Start

This section provides a step-by-step walk through basic OCSS7 setup from unpacking software packages through running test traffic. The end result is a functional OCSS7 network suitable for basic testing. For production installations and installation reference material please see Installing the SGC.

Introduction

In this walk-through we will be:

-

setting up two OCSS7 clusters, each with a single SGC node, and

-

running one of the example IN scenarios through the network using the Metaswitch Scenario Simulator.

To complete this walk-through you will need the:

-

OCSS7 package,

-

IN Scenario Pack 3.1 or higher for the Scenario Simulator, and

These instructions should be followed on a test system which:

-

runs Linux,

-

has SCTP support, and

-

is unlikely to be hampered by local firewall or other security restrictions.

Finally, you will need to make sure that the JAVA_HOME environment variable is set to the location of your Oracle Java JDK installation.

The Plan

We will set up two clusters, each with a single node, both running on our single test system. At the M3UA level:

-

cluster 1 will use Point Code 1

-

cluster 2 will use Point Code 2

-

there will be one Application Server (AS) with routing context 2

-

there will be one SCTP association between the two nodes

We will test the network using two Metaswitch Scenario Simulators:

-

Simulator 1 will:

-

connect to cluster 1

-

use SSN 101

-

use GT 1234

-

-

Simulator 2 will:

-

connect to cluster 2

-

use SSN 102

-

use GT 4321

-

Routing between the two simulators will be via Global Title translation.

Once we think that the network is operational we will test it by running one of the example scenarios shipped with the IN Scenario Pack for the Scenario Simulator.

SGC installation

Naming Conventions

The cluster naming convention in this example uses PC followed by the point code. For example, a cluster whose point code is 1 will have a name of PC1.

The node naming convention lists the cluster name first, and then a hyphen, followed by the node number within that SGC cluster. For example, PC1-1 or PC2-1. During this walk-through the number after the hyphen will always be 1, but this convention provides space to expand if you wish to add additional nodes after completing the walk through.

Installation

We will now install two SGC clusters, each containing one node.

1 |

Create the root installation directory for the PC1 cluster and PC1-1 node:

mkdir PC1/PC1-1 |

|---|---|

2 |

Unpack the SGC archive file in the

PC1/PC1-1 directory

unzip ocss7-package-VERSION.zip (replacing This creates the distribution directory, Example: $ unzip ocss7-package-4.1.1.zip Archive: ocss7-package.zip creating: ocss7-4.1.1/ inflating: ocss7-4.1.1/CHANGELOG inflating: ocss7-4.1.1/README creating: ocss7-4.1.1/config/ creating: ocss7-4.1.1/doc/ creating: ocss7-4.1.1/license/ creating: ocss7-4.1.1/logs/ creating: ocss7-4.1.1/var/ inflating: ocss7-4.1.1/config/SGC.properties inflating: ocss7-4.1.1/config/SGC_bundle.properties.sample inflating: ocss7-4.1.1/config/log4j2.test.xml inflating: ocss7-4.1.1/config/log4j2.xml inflating: ocss7-4.1.1/config/sgcenv inflating: ocss7-4.1.1/lib/LICENSE.apache-log4j-extras.txt inflating: ocss7-4.1.1/lib/LICENSE.commons-cli.txt inflating: ocss7-4.1.1/lib/LICENSE.commons-lang.txt inflating: ocss7-4.1.1/lib/LICENSE.guava.txt inflating: ocss7-4.1.1/lib/LICENSE.hazelcast.txt inflating: ocss7-4.1.1/lib/LICENSE.istack-commons-runtime.html inflating: ocss7-4.1.1/lib/LICENSE.jakarta.activation.html inflating: ocss7-4.1.1/lib/LICENSE.jakarta.xml.bind-api.html inflating: ocss7-4.1.1/lib/LICENSE.jaxb.html inflating: ocss7-4.1.1/lib/LICENSE.jline3.txt inflating: ocss7-4.1.1/lib/LICENSE.jsr305.txt inflating: ocss7-4.1.1/lib/LICENSE.log4j2.txt inflating: ocss7-4.1.1/lib/LICENSE.netty.txt inflating: ocss7-4.1.1/lib/LICENSE.protobuf.txt inflating: ocss7-4.1.1/lib/LICENSE.slf4j.txt inflating: ocss7-4.1.1/lib/LICENSE.snmp4j.txt inflating: ocss7-4.1.1/lib/LICENSE.velocity.txt creating: ocss7-4.1.1/bin/ inflating: ocss7-4.1.1/bin/generate-report.sh inflating: ocss7-4.1.1/bin/sgc inflating: ocss7-4.1.1/bin/sgcd inflating: ocss7-4.1.1/bin/sgckeygen inflating: ocss7-4.1.1/sgc.jar creating: ocss7-4.1.1/lib/ inflating: ocss7-4.1.1/lib/failureaccess-1.0.1.jar inflating: ocss7-4.1.1/lib/guava-30.1.1-jre.jar inflating: ocss7-4.1.1/lib/hazelcast-3.7.jar inflating: ocss7-4.1.1/lib/istack-commons-runtime-4.0.1.jar inflating: ocss7-4.1.1/lib/jakarta.activation-2.0.1.jar inflating: ocss7-4.1.1/lib/jakarta.xml.bind-api-3.0.1.jar inflating: ocss7-4.1.1/lib/jaxb-core-3.0.2.jar inflating: ocss7-4.1.1/lib/jaxb-runtime-3.0.2.jar inflating: ocss7-4.1.1/lib/jsr305-3.0.2.jar inflating: ocss7-4.1.1/lib/log4j-api-2.17.1.jar inflating: ocss7-4.1.1/lib/log4j-core-2.17.1.jar inflating: ocss7-4.1.1/lib/log4j-slf4j-impl-2.17.1.jar inflating: ocss7-4.1.1/lib/netty-buffer-4.1.70.Final.jar inflating: ocss7-4.1.1/lib/netty-codec-4.1.70.Final.jar inflating: ocss7-4.1.1/lib/netty-codec-http-4.1.70.Final.jar inflating: ocss7-4.1.1/lib/netty-common-4.1.70.Final.jar inflating: ocss7-4.1.1/lib/netty-handler-4.1.70.Final.jar inflating: ocss7-4.1.1/lib/netty-resolver-4.1.70.Final.jar inflating: ocss7-4.1.1/lib/netty-transport-4.1.70.Final.jar inflating: ocss7-4.1.1/lib/protobuf-java-3.17.3.jar inflating: ocss7-4.1.1/lib/protobuf-library-3.1.4.jar inflating: ocss7-4.1.1/lib/slf4j-api-1.7.30.jar inflating: ocss7-4.1.1/lib/snmp4j-3.5.1.jar inflating: ocss7-4.1.1/lib/snmp4j-agent-3.5.0.jar creating: ocss7-4.1.1/lib/upgrade-packs/ inflating: ocss7-4.1.1/lib/upgrade-packs/ocss7-upgrade-pack-4.1.1.jar creating: ocss7-4.1.1/cli/ inflating: ocss7-4.1.1/cli/sgc-cli.sh creating: ocss7-4.1.1/cli/conf/ creating: ocss7-4.1.1/cli/lib/ inflating: ocss7-4.1.1/cli/conf/cli.properties inflating: ocss7-4.1.1/cli/conf/log4j2.xml inflating: ocss7-4.1.1/cli/lib/commons-cli-1.4.jar inflating: ocss7-4.1.1/cli/lib/commons-lang3-3.12.0.jar inflating: ocss7-4.1.1/cli/lib/jline-3.21.0.jar inflating: ocss7-4.1.1/cli/lib/log4j-api-2.17.1.jar inflating: ocss7-4.1.1/cli/lib/log4j-core-2.17.1.jar inflating: ocss7-4.1.1/cli/lib/log4j-slf4j-impl-2.17.1.jar inflating: ocss7-4.1.1/cli/lib/ocss7-cli.jar inflating: ocss7-4.1.1/cli/lib/ocss7-remote-4.1.1.jar inflating: ocss7-4.1.1/cli/lib/ocss7-version-4.1.1.jar inflating: ocss7-4.1.1/cli/lib/slf4j-api-1.7.30.jar inflating: ocss7-4.1.1/cli/lib/velocity-engine-core-2.3.jar inflating: ocss7-4.1.1/cli/sgc-cli.bat creating: ocss7-4.1.1/doc/mibs/ inflating: ocss7-4.1.1/doc/mibs/COMPUTARIS-MIB.txt inflating: ocss7-4.1.1/doc/mibs/CTS-SGC-MIB.txt inflating: ocss7-4.1.1/doc/mibs/OPENCLOUD-OCSS7-MIB.txt inflating: ocss7-4.1.1/config/hazelcast.xml.sample |

3 |

Create the root installation directory for the PC2 cluster and PC2-1 node:

mkdir PC2/PC2-1 |

4 |

Unpack the SGC archive file in the

PC2/PC2-1 directory

unzip ocss7-package-VERSION.zip (replacing This creates the distribution directory, |

We now have two SGC nodes with no configuration. The next step is to set up their cluster configuration.

SGC cluster membership configuration

We will now do the cluster membership configuration for our two SGC nodes/clusters:

-

The node name is specified by the

ss7.instanceparameter -

And the cluster name is specified by the

hazelcast.groupparameter

Later on, during SS7 configuration, the ss7.instance value is used to specify which node in the cluster certain configuration elements (such as SCTP endpoints) are associated with.

1 |

Give node PC1-1 its identity

Edit the file # SGC instance node name ss7.instance=PC1-1 # Path to the Hazelcast config file hazelcast.config.file=config/hazelcast.xml # Default Hazelcast group name hazelcast.group=PC1 #path where sgc data file should be stored sgc.data.dir=var |

|---|---|

2 |

Give node PC2-1 its identity

Edit the file # SGC instance node name ss7.instance=PC2-1 # Path to the Hazelcast config file hazelcast.config.file=config/hazelcast.xml # Default Hazelcast group name hazelcast.group=PC2 #path where sgc data file should be stored sgc.data.dir=var |

| |

For clusters with multiple nodes the |

Starting the clusters

We will now start the two SGC clusters.

1 |

Check

JAVA_HOME

Make sure your $ echo $JAVA_HOME /opt/jdk-11 |

||

|---|---|---|---|

2 |

Change the management port for node PC1-1

Edit the file The

|

||

3 |

Start node PC1-1

./PC1/PC1-1/ocss7-4.1.1/bin/sgc start If all is well, you should see: SGC starting - daemonizing ... SGC started successfully |

||

4 |

Change the management port for node PC2-1

Edit the file The |

||

5 |

Start node PC2-1

./PC2/PC2-1/ocss7-4.1.1/bin/sgc start If all is well, you should see: SGC starting - daemonizing ... SGC started successfully |

If the SGC start command reported any errors please double check your JAVA_HOME environment variable and make sure that nothing has already bound the management ports 10111 and 10121. If these ports are already in use on your system you may simply change them to something else and make a note of the values for later use.

Connect the management console

We now have two running OCSS7 clusters with blank configuration. The configuration we have done so far was done on a per-node basis using configuration files, but this does no more than give a node the minimal configuration it needs to boot and become a cluster member. The rest of our SGC configuration will now be done using the Command-Line Management Console. Configuration done in this manner becomes cluster-wide configuration which is automatically propagated to and saved by every other cluster node, although for our single-node clusters that detail will not be particularly relevant.

It is recommended that you start one management console per node for this walk-through, however, if your system is low on RAM you may wish to start and stop these consoles as required.

1 |

Start the mangement console for PC1-1

./PC1/PC1-1/ocss7-4.1.1/cli/sgc-cli.sh Example: $ ./PC1/PC1-1/ocss7-4.1.1/cli/sgc-cli.sh Preparing to start SGC CLI ... Checking environment variables [JAVA_HOME]=[/opt/jdk-11] [CLI_HOME]=[/home/ocss7/quick-start/PC1/PC1-1/ocss7-4.1.1/cli] Environment is OK! Determining SGC home and JMX configuration [SGC_HOME]=/home/ocss7/quick-start/PC1/PC1-1/ocss7-4.1.1 [JMX_HOST]=127.0.0.1 [JMX_PORT]=10111 Done +---------------------------Environment--------------------------------+ CLI_HOME: /home/ocss7/quick-start/PC1/PC1-1/ocss7-4.1.1/cli JAVA: /opt/jdk-11 JAVA_OPTS: -Dlog4j.configuration=file:/home/ocss7/quick-start/PC1/PC1-1/ocss7-4.1.1/cli/conf/log4j.xml -Dsgc.home=/home/ocss7/quick-start/PC1/PC1-1/ocss7-4.1.1/cli +----------------------------------------------------------------------+ 127.0.0.1:10111 PC1-1> Here we can see the management console’s prompt, which identifies the node to which it is connected by host and port.

|

||

|---|---|---|---|

2 |

Start the management console for PC2-1

./PC2/PC2-1/ocss7-4.1.1/cli/sgc-cli.sh Example: $ ./PC2/PC2-1/ocss7-4.1.1/cli/sgc-cli.sh Preparing to start SGC CLI ... Checking environment variables [JAVA_HOME]=[/opt/jdk-11/] [CLI_HOME]=[/home/ocss7/quick-start/PC2/PC2-1/ocss7-4.1.1/cli] Environment is OK! Determining SGC home and JMX configuration [SGC_HOME]=/home/ocss7/quick-start/PC2/PC2-1/ocss7-4.1.1 [JMX_HOST]=127.0.0.1 [JMX_PORT]=10121 Done +---------------------------Environment--------------------------------+ CLI_HOME: /home/ocss7/quick-start/PC2/PC2-1/ocss7-4.1.1/cli JAVA: /opt/jdk-11/ JAVA_OPTS: -Dlog4j.configuration=file:/home/ocss7/quick-start/PC2/PC2-1/ocss7-4.1.1/cli/conf/log4j.xml -Dsgc.home=/home/ocss7/quick-start/PC2/PC2-1/ocss7-4.1.1/cli +----------------------------------------------------------------------+ 127.0.0.1:10121 PC2-1> |

| |

The management console supports tab completion and suggestions. If you hit tab while in the console it will complete the command, parameter, or value as best it can. If the console is unable to complete the command, parameter, or value entirely because there are multiple completion choices then it will display the available choices. |

| |

You can exit the management console either by hitting ctrl-d or entering the quit command. |

General configuration

General Configuration is that which is fundamental to the cluster and the nodes within it. For our purposes this means:

-

setting the local Point Codes for the clusters,

-

setting the basic communication attributes of each node.

The basic communication attributes of each node are used to control:

-

payload message transfer between SGCs within the cluster; and

-

communication with client TCAP stacks running in Rhino or the Scenario Simulator.

| |

The distinction between clusters and nodes is about to become apparent because each cluster has exactly one local Point Code for which it provides services and which is set once for the entire cluster. In contrast, each node must be defined and given its own basic communication configuration. |

1a |

Set the Point Code for PC1-1’s cluster to 1

Within the management console for PC1-1 run: modify-parameters: sp=1 Example: 127.0.0.1:10111 PC1-1> modify-parameters: sp=1 OK parameters updated. |

||||

|---|---|---|---|---|---|

1b |

Set the Point Code for PC2-1’s cluster to 2

Within the management console for PC2-1 run: modify-parameters: sp=2 Example: 127.0.0.1:10121 PC2-1> modify-parameters: sp=2 OK parameters updated. |

||||

2a |

Configure node PC1-1’s basic communication attributes

Within the management console for PC1-1 run: create-node: oname=PC1-1, switch-local-address=127.0.0.1, switch-port=11011, stack-data-port=12011, stack-http-port=13011, enabled=true Example: 127.0.0.1:10111 PC1-1> create-node: oname=PC1-1, switch-local-address=127.0.0.1, switch-port=11011, stack-data-port=12011, stack-http-port=13011, enabled=true OK node created.

This command configures network communication for:

|

||||

2b |

Configure node PC2-1’s basic communication attributes

Within the management console for PC2-1 run: create-node: oname=PC2-1, switch-local-address=127.0.0.1, switch-port=11021, stack-data-port=12021, stack-http-port=13021, enabled=true

Example: 127.0.0.1:10121 PC1-1> create-node: oname=PC2-1, switch-local-address=127.0.0.1, switch-port=11021, stack-data-port=12021, stack-http-port=13021, enabled=true OK node created.

|

Of the attributes we set above only the switch-local-address and stack-data-port settings are required for future configuration; we’ll use them when we get to the Scenario Simulator configuration section.

| |

The create-node command is discussed above in the context of configuring the basic communication attributes to be used, but it also creates a node configuration object which can be enabled or disabled and for which the current state can be seen when using the display-node command. It has been discussed this way because some configuration must be provided, no matter what your configuration. If it was not necessary to provide some configuration then the SGC could simply automatically detect and add cluster nodes as they come online. |

M3UA configuration

We will now begin configuring the M3UA layer of our network. There are a number of ways this can be done, but for the purposes of this walk-through we will use:

-

a single Application Server (AS) between the two instances,

-

the cluster for Point Code 1 as a client (in IPSP mode),

-

the cluster for Point Code 2 as a server (in IPSP mode), and

-

one SCTP association between the two nodes.

At a high level the procedure we’re about to follow will:

-

define the Application Server (AS) on each cluster,

-

define routes to our destination Point Codes through the defined AS,

-

define the SCTP connection on each node, and

-

associate the SCTP connection with the Application Server.

All the steps below are in two parts, the part "a" commands must be run on the management console connected to node PC1-1 and the part "b" commands must be run on the management console connected to node PC2-1. If this becomes confusing please check the examples given, which will indicate the correct management console by the port number in the prompt.

| |

Those familiar with M3UA will note that Single Exchange is used. The SGC does not support double exchange. |

1a |

Define the Application Server for PC1-1

Create the AS with create-as: oname=PC2, traffic-maintenance-role=ACTIVE, rc=2, enabled=true Example: 127.0.0.1:10111 PC1-1> create-as: oname=PC2, traffic-maintenance-role=ACTIVE, rc=2, enabled=true OK as created. |

||

|---|---|---|---|

1b |

Define the Application Server for PC2-1

On PC2-1 note that create-as: oname=PC2, traffic-maintenance-role=PASSIVE, rc=2, enabled=true Example: 127.0.0.1:10121 PC1-1> create-as: oname=PC2, traffic-maintenance-role=PASSIVE, rc=2, enabled=true OK as created. |

||

2a |

Define the local SCTP association’s endpoint for PC1-1

create-local-endpoint: oname=PC1-1-PC2-1, node=PC1-1, port=21121 Example: 127.0.0.1:10111 PC1-1> create-local-endpoint: oname=PC1-1-PC2-1, node=PC1-1, port=21121 OK local-endpoint created. This defines a local endpoint which will be bound to SCTP port

|

||

2b |

Define the local SCTP association’s endpoint for PC2-1

create-local-endpoint: oname=PC2-1, node=PC2-1, port=22100 This defines a local endpoint which will be bound to SCTP port Example: 127.0.0.1:10121 PC1-1> create-local-endpoint: oname=PC2-1, node=PC2-1, port=22100 OK local-endpoint created.

|

||

3a |

Define the local SCTP endpoint IP addresses for PC1-1

We will now define the IP address to be used by our SCTP association. create-local-endpoint-ip: oname=PC1-1-PC2-1, ip=127.0.0.1, local-endpoint-name=PC1-1-PC2-1 Example: 127.0.0.1:10111 PC1-1> create-local-endpoint-ip: oname=PC1-1-PC2-1, ip=127.0.0.1, local-endpoint-name=PC1-1-PC2-1 OK local-endpoint-ip created. As you can see above, a |

||

3b |

Define the local SCTP endpoint IP addresses for PC2-1

Similar to 3a, above: create-local-endpoint-ip: oname=PC2-1, ip=127.0.0.1, local-endpoint-name=PC2-1 Example: 127.0.0.1:10121 PC1-1> create-local-endpoint-ip: oname=PC2-1, ip=127.0.0.1, local-endpoint-name=PC2-1 OK local-endpoint-ip created. |

||

4a |

Enable the local endpoint for PC1-1

The local endpoint was created in its default enable-local-endpoint: oname=PC1-1-PC2-1 Example: 127.0.0.1:10111 PC1-1> enable-local-endpoint: oname=PC1-1-PC2-1 OK local-endpoint enabled. |

||

4b |

Enable the local endpoint for PC2-1

enable-local-endpoint: oname=PC2-1 Example: 127.0.0.1:10121 PC1-1> enable-local-endpoint: oname=PC2-1 OK local-endpoint enabled. |

||

5a |

Define the client connection for PC1-1 to PC2-1

We will now define the SCTP association used by PC1-1, as well as some M3UA settings for the connection: create-connection: oname=PC1-1-PC2-1, port=22100, local-endpoint-name=PC1-1-PC2-1, conn-type=CLIENT, state-maintenance-role=ACTIVE, is-ipsp=true, enabled=true Example: 127.0.0.1:10111 PC1-1> create-connection: oname=PC1-1-PC2-1, port=22100, local-endpoint-name=PC1-1-PC2-1, conn-type=CLIENT, state-maintenance-role=ACTIVE, is-ipsp=true, enabled=true OK connection created. The |

||

5b |

Define the server connection for PC2-1 from PC1-1

Similar to the above, this defines a client connection to the node, which is acting as a server: create-connection: oname=PC1-1-PC2-1, port=21121, local-endpoint-name=PC2-1, conn-type=SERVER, state-maintenance-role=PASSIVE, is-ipsp=true, enabled=true Example: 127.0.0.1:10121 PC1-1> create-connection: oname=PC1-1-PC2-1, port=21121, local-endpoint-name=PC2-1, conn-type=SERVER, state-maintenance-role=PASSIVE, is-ipsp=true, enabled=true OK connection created. the port in this command is the remote port from which the connection will be initiated. It must match the configuration in node PC1-1 or the connection will not be accepted. |

||

6a |

Define the connection IP addresses for PC1-1 to PC2-1

Just as we had to define local endpoint IP addresses earlier, we must now define the remote connection IP addresses to which the node should connect: create-conn-ip: oname=PC1-1-PC2-1, ip=127.0.0.1, conn-name=PC1-1-PC2-1 Example: 127.0.0.1:10111 PC1-1> create-conn-ip: oname=PC1-1-PC2-1, ip=127.0.0.1, conn-name=PC1-1-PC2-1 OK conn-ip created. Again, this extra step is because SCTP supports multi-homing. |

||

6b |

Define the connection IP addresses for PC2-1 from PC1-1

The compliment of step 6a, above, PC2-1 needs to know which IP addresses to expect a connection from: create-conn-ip: oname=PC1-1-PC2-1, ip=127.0.0.1, conn-name=PC1-1-PC2-1 Example: 127.0.0.1:10121 PC1-1> create-conn-ip: oname=PC1-1-PC2-1, ip=127.0.0.1, conn-name=PC1-1-PC2-1 OK conn-ip created. The IP address here must match the |

||

7a |

Connect the AS to the connection on PC1-1

We must now tell the SGC that our AS should use the connection we have defined: create-as-connection: oname=PC1-1-PC2-1, as-name=PC2, conn-name=PC1-1-PC2-1 Example: 127.0.0.1:10111 PC1-1> create-as-connection: oname=PC1-1-PC2-1, as-name=PC2, conn-name=PC1-1-PC2-1 OK as-connection created. This |

||

7b |

Connect the AS to the connection on PC2-1

create-as-connection: oname=PC1-1-PC2-1, as-name=PC2, conn-name=PC1-1-PC2-1 Example: 127.0.0.1:10121 PC1-1> create-as-connection: oname=PC1-1-PC2-1, as-name=PC2, conn-name=PC1-1-PC2-1 OK as-connection created. |

||

8a |

Define the route on PC1-1 to Point Code 2

The final step, we must now define which Destination Point Codes can be reached via our Application Server. Define a Destination Point Code for PC=2 and a route to it via our AS with the following commands: create-dpc: oname=PC2, dpc=2 create-route: oname=PC2, as-name=PC2, dpc-name=PC2 Example: 127.0.0.1:10111 PC1-1> create-dpc: oname=PC2, dpc=2 OK dpc created. 127.0.0.1:10111 PC1-1> create-route: oname=PC2, as-name=PC2, dpc-name=PC2 OK route created. |

||

8b |

Define the route on PC2-1 to Point Code 1

Define a Destination Point Code for PC=1 and a route to it via our AS with the following commands: create-dpc: oname=PC1, dpc=1 create-route: oname=PC1, as-name=PC2, dpc-name=PC1 Example: 127.0.0.1:10121 PC1-1> create-dpc: oname=PC1, dpc=1 OK dpc created. 127.0.0.1:10121 PC1-1> create-route: oname=PC1, as-name=PC2, dpc-name=PC1 OK route created. |

General and M3UA configuration is now complete. In the next section we will check that everything is working correctly.

M3UA state inspection

You should now have two SGCs which are connected to each other at the M3UA layer. Before we move on to the upper layers of configuration we should check that everything is working as expected up to this point. If you are confident of your setup and in a hurry you can skip this section.

Please note that it is not normally necessary to check state in this exhaustive a manner, we are doing it in this step-by-step fashion to provide some familiarization with the SGC state inspection facilities and assist with troubleshooting.

| |

Most of the commands shown below show both the definition and the state of the various configuration objects they examine, and are intended for those modifying or considering modifying the configuration of the SGC. If you are interested strictly in state rather than configuration, there is a related family of commands which start with display-info- which will show extended state information without any configuration details. |

1 |

Check the

display-active-alarms command for problems

The PC1-1 127.0.0.1:10111 PC1-1> display-active-alarm: Found 0 objects. PC2-1 127.0.0.1:10121 PC1-1> display-active-alarm: Found 0 objects. If, instead, you see one or more alarms, don’t worry, we’ll step through the diagnostics one by one. |

||

|---|---|---|---|

2 |

Check the node state

If something is wrong with the node state or configuration than nothing will work. Run display-node on both nodes. Both nodes should say that the

|

||

3 |

Check the local endpoint state

The local endpoint must be enabled and active before the connection between the nodes will work. Run: display-local-endpoint on both nodes. Both nodes should say that the

|

||

4 |

Check the connection state

The next thing to check, working up the stack, is the SCTP association. Run display-connection on both nodes. Both nodes should say that the

It is often helpful to consult either the active alarms list or the logs when diagnosing connection issues, but that is outside the scope of this walk-through. |

||

5 |

Check the AS state

The AS should be active on both nodes. Run: display-as on both nodes to check. The state should be listed as

|

||

6 |

Check the SCCP state

SCCP is the next layer up, and we have not yet configured it, but it should be able to activate and communicate with its peer at this point. Run this command on both nodes to check: display-info-remotessninfo This should show the following output on both nodes: 127.0.0.1:10111 PC1-1> display-info-remotessninfo Found 2 object(s): +----------+----------+---------------+ |dpc |ssn |status | +----------+----------+---------------+ |1 |1 |ALLOWED | +----------+----------+---------------+ |2 |1 |ALLOWED | +----------+----------+---------------+ This output shows that the SCCP layers on each node are communicating with each other.

If the status shown above is

|

SCCP configuration

In The Plan we can see that the two Scenario Simulators expect to refer to each other by their global titles as follows:

-

1234: PC=1,SSN=101

-

4321: PC=2,SSN=102

Several inbound and outbound global title translation (GTT) rules are required to allow this to happen, which we will create now.

Also, while not technically necessary, we will configure Concerned Point Codes for each of the two nodes, so that they will inform each other about changes to the state of interesting SSNs.

All the steps below are in two parts, the part "a" commands must be run on the management console connected to node PC1-1 and the part "b" commands must be run on the management console connected to node PC2-1. If this becomes confusing please check the examples given, which will indicate the correct management console by the port number in the prompt.

1a |

Outbound GTT setup on PC1-1

Run the following commands to setup outbound global title translation on PC1-1: create-outbound-gt: oname=4321, addrinfo=4321 create-outbound-gtt: oname=4321, gt=4321, dpc=2, priority=5 Example: 127.0.0.1:10111 PC1-1> create-outbound-gt: oname=4321, addrinfo=4321 OK outbound-gt created. 127.0.0.1:10111 PC1-1> create-outbound-gtt: oname=4321, gt=4321, dpc=2, priority=5 OK outbound-gtt created. This defines a Global Title and then creates a translation rule which will cause messages with that GT in the Called Party Address to be routed to our peer at PC=2. |

|---|---|

1b |

Outbound GTT setup on PC2-1

Run the following commands to setup outbound global title translation on PC2-1: create-outbound-gt: oname=1234, addrinfo=1234 create-outbound-gtt: oname=1234, gt=1234, dpc=1, priority=5 Example: 127.0.0.1:10121 PC1-1> create-outbound-gt: oname=1234, addrinfo=1234 OK outbound-gt created. 127.0.0.1:10121 PC1-1> create-outbound-gtt: oname=1234, gt=1234, dpc=1, priority=5 OK outbound-gtt created. This defines a Global Title and then creates a translation rule which will cause messages with that GT in the Called Party Address to be routed to our peer at PC=1. |

2a |

Inbound GTT setup on PC1-1

Run the following to setup inbound GTT on PC1-1: create-inbound-gtt: oname=1234, addrinfo=1234, ssn=101 create-outbound-gt: oname=1234, addrinfo=1234 create-outbound-gtt: oname=1234, gt=1234, dpc=1, priority=5 Example 127.0.0.1:10111 PC1-1> create-inbound-gtt: oname=1234, addrinfo=1234, ssn=101 OK inbound-gtt created. 127.0.0.1:10111 PC1-1> create-outbound-gt: oname=1234, addrinfo=1234 OK outbound-gt created. 127.0.0.1:10111 PC1-1> create-outbound-gtt: oname=1234, gt=1234, dpc=1, priority=5 OK outbound-gtt created. The first command creates an inbound GTT rule for the Global Title we expect to be accepted traffic on. The second and third commands may look somewhat surprising, as they create an outbound GTT rule. This is the correct configuration for our network, as SCCP’s service messages (UDTS and XUDTS) may be generated locally in response to traffic we are attempting to send, and these service messages are routed as outbound messages. |

2b |

Inbound GTT setup on PC2-1

Run the following to setup inbound GTT on PC2-1: create-inbound-gtt: oname=4321, addrinfo=4321, ssn=102 create-outbound-gt: oname=4321, addrinfo=4321 create-outbound-gtt: oname=4321, gt=4321, dpc=2, priority=5 Example: GC[127.0.0.1:10121]> create-inbound-gtt: oname=4321, addrinfo=4321, ssn=102 OK inbound-gtt created. 127.0.0.1:10121 PC1-1> create-outbound-gt: oname=4321, addrinfo=4321 OK outbound-gt created. 127.0.0.1:10121 PC1-1> create-outbound-gtt: oname=4321, gt=4321, dpc=2, priority=5 OK outbound-gtt created. |

3a |

Create the Concerned Point Code on PC1-1

Run the following to configure PC1-1 to announce SSN changes for SSN=101 to the PC2 cluster: create-cpc: oname=PC2-101, dpc=2, ssn=101 Example: 127.0.0.1:10111 PC1-1> create-cpc: oname=PC2-101, dpc=2, ssn=101 OK cpc created. |

3b |

Create the Concerned Point Code on PC2-1

Run the following to configure PC2-1 to announce SSN changes for SSN=102 to the PC1 cluster: create-cpc: oname=PC1-102, dpc=1, ssn=102 Example: 127.0.0.1:10121 PC1-1> create-cpc: oname=PC1-102, dpc=1, ssn=102 OK cpc created. |

This completes our SCCP configuration, which we will check in the next section.

SCCP state inspection

We now have two fully configured SCCP layers. We will now check their state to make sure they will work as expected.

1 |

Check the outbound GTT state on PC1-1

The following command will show the current state of configured outbound GTT rules: display-info-ogtinfo: column=addrInfo, column=connId, column=rc, column=dpc Example on PC1-1 127.0.0.1:10111 PC1-1> display-info-ogtinfo: column=addrInfo, column=connId, column=rc, column=dpc Found 2 object(s): +---------------+---------------+----------+----------+ |addrInfo |connId |rc |dpc | +---------------+---------------+----------+----------+ |1234 | |-1 |1 | +---------------+---------------+----------+----------+ |4321 |PC1-1-PC2-1 |2 |2 | +---------------+---------------+----------+----------+ For GT 1234 we can see that:

This GT will be routed to the local SGC. For GT 4321 we can see that:

This GT will be routed to PC2-1 using the specified connection and Routing Context. Example on PC2-1 127.0.0.1:10121 PC1-1> display-info-ogtinfo: column=addrInfo, column=connId, column=rc, column=dpc Found 2 object(s): +---------------+---------------+----------+----------+ |addrInfo |connId |rc |dpc | +---------------+---------------+----------+----------+ |1234 |PC1-1-PC2-1 |2 |1 | +---------------+---------------+----------+----------+ |4321 | |-1 |2 | +---------------+---------------+----------+----------+

|

||

|---|---|---|---|

2 |

Check the local SSN state

The command: display-info-localssninfo will list the state of all SSNs which are either:

Example on PC1-1 GC[127.0.0.1:10111]> display-info-localssninfo: column=ssn, column=status Found 2 object(s): +----------+---------------+ |ssn |status | +----------+---------------+ |1 |ALLOWED | +----------+---------------+ |101 |PROHIBITED | +----------+---------------+ Example on PC2-1 127.0.0.1:10121 PC1-1> display-info-localssninfo: column=ssn, column=status Found 2 object(s): +----------+---------------+ |ssn |status | +----------+---------------+ |1 |ALLOWED | +----------+---------------+ |102 |PROHIBITED | +----------+---------------+ |

Scenario Simulator installation

This quick start walk-through will use the OC Scenario Simulator to test the network, rather than Rhino with CGIN, for simplicity.

For this quick start we will be assuming that your Scenario Simulator package is shipped with an IN Scenario Pack which does not support OCSS7 (which is true for Scenario Simulator 2.2.0.x), or with an obsolete version of the IN Scenario Pack. If you know that your Scenario Simulator contains a suitable IN Scenario Pack you may skip this section after completing it through step 2.

1 |

Unpack the Scenario Simulator archive file

unzip scenario-simulator-package-VERSION.zip (replacing This creates the distribution directory, Example: $ unzip scenario-simulator-package-2.3.0.6.zip Archive: scenario-simulator-package-2.3.0.6.zip creating: scenario-simulator-2.3.0.6/ creating: scenario-simulator-2.3.0.6/licenses/ inflating: scenario-simulator-2.3.0.6/licenses/LICENSE-XPathOverSchema.txt inflating: scenario-simulator-2.3.0.6/licenses/LICENSE-antlr.txt [...] |

|---|---|

2 |

Change directory into the Scenario Simulator directory

cd scenario-simulator-VERSION (replacing Example: $ cd scenario-simulator-2.3.0.6/ |

3 |

Install the new IN Scenario Pack

We want to replace the old IN Scenario Pack with the new, which can be done with the following commands. Please ensure that you are in the Scenario Simulator’s installation directory before running these commands. rm -r in-examples/ protocols/in-scenario-pack-* unzip -o ../in-scenario-pack-VERSION.zip (replacing Example: $ rm -r in-examples/ protocols/in-scenario-pack-* $ unzip -o ../in-scenario-pack-2.0.0.0.zip Archive: ../in-scenario-pack-2.0.0.0.zip inflating: protocols/in-scenario-pack-1.5.3.jar creating: in-examples/ creating: in-examples/2sims/ creating: in-examples/2sims/config/ creating: in-examples/2sims/config/loopback/ creating: in-examples/2sims/config/mach7/ creating: in-examples/2sims/config/ocss7/ creating: in-examples/2sims/config/signalware/ creating: in-examples/2sims/scenarios/ creating: in-examples/3sims/ creating: in-examples/3sims/config/ creating: in-examples/3sims/config/loopback/ creating: in-examples/3sims/config/mach7/ creating: in-examples/3sims/config/ocss7/ creating: in-examples/3sims/config/signalware/ creating: in-examples/3sims/scenarios/ inflating: CHANGELOGS/CHANGELOG-in.txt inflating: README/README-in.txt inflating: in-examples/2sims/config/loopback/cgin-tcapsim-endpoint1.properties inflating: in-examples/2sims/config/loopback/cgin-tcapsim-endpoint2.properties inflating: in-examples/2sims/config/loopback/setup-sim1.commands inflating: in-examples/2sims/config/loopback/setup-sim2.commands inflating: in-examples/2sims/config/loopback/tcapsim-gt-table.txt inflating: in-examples/2sims/config/mach7/mach7-endpoint1.properties inflating: in-examples/2sims/config/mach7/mach7-endpoint2.properties inflating: in-examples/2sims/config/mach7/setup-mach7-endpoint1.commands inflating: in-examples/2sims/config/mach7/setup-mach7-endpoint2.commands inflating: in-examples/2sims/config/ocss7/ocss7-endpoint1.properties inflating: in-examples/2sims/config/ocss7/ocss7-endpoint2.properties inflating: in-examples/2sims/config/ocss7/setup-sim-endpoint1.commands inflating: in-examples/2sims/config/ocss7/setup-sim-endpoint2.commands inflating: in-examples/2sims/config/setup-examples-sim1.commands inflating: in-examples/2sims/config/setup-examples-sim2.commands inflating: in-examples/2sims/config/signalware/setup-signalware-endpoint1.commands inflating: in-examples/2sims/config/signalware/setup-signalware-endpoint2.commands inflating: in-examples/2sims/config/signalware/signalware-endpoint1.properties inflating: in-examples/2sims/config/signalware/signalware-endpoint2.properties inflating: in-examples/2sims/scenarios/CAPv3-Demo-ContinueRequest.scen inflating: in-examples/2sims/scenarios/CAPv3-Demo-ReleaseCallRequest.scen inflating: in-examples/2sims/scenarios/INAP-SSP-SCP.scen inflating: in-examples/3sims/config/loopback/cgin-tcapsim-endpoint1.properties inflating: in-examples/3sims/config/loopback/cgin-tcapsim-endpoint2.properties inflating: in-examples/3sims/config/loopback/cgin-tcapsim-endpoint3.properties inflating: in-examples/3sims/config/loopback/setup-sim1.commands inflating: in-examples/3sims/config/loopback/setup-sim2.commands inflating: in-examples/3sims/config/loopback/setup-sim3.commands inflating: in-examples/3sims/config/loopback/tcapsim-gt-table.txt inflating: in-examples/3sims/config/mach7/mach7-endpoint1.properties inflating: in-examples/3sims/config/mach7/mach7-endpoint2.properties inflating: in-examples/3sims/config/mach7/mach7-endpoint3.properties inflating: in-examples/3sims/config/mach7/setup-mach7-endpoint1.commands inflating: in-examples/3sims/config/mach7/setup-mach7-endpoint2.commands inflating: in-examples/3sims/config/mach7/setup-mach7-endpoint3.commands inflating: in-examples/3sims/config/ocss7/ocss7-endpoint1.properties inflating: in-examples/3sims/config/ocss7/ocss7-endpoint2.properties inflating: in-examples/3sims/config/ocss7/ocss7-endpoint3.properties inflating: in-examples/3sims/config/ocss7/setup-sim-endpoint1.commands inflating: in-examples/3sims/config/ocss7/setup-sim-endpoint2.commands inflating: in-examples/3sims/config/ocss7/setup-sim-endpoint3.commands inflating: in-examples/3sims/config/setup-examples-sim1.commands inflating: in-examples/3sims/config/setup-examples-sim2.commands inflating: in-examples/3sims/config/setup-examples-sim3.commands inflating: in-examples/3sims/config/signalware/setup-signalware-endpoint1.commands inflating: in-examples/3sims/config/signalware/setup-signalware-endpoint2.commands inflating: in-examples/3sims/config/signalware/setup-signalware-endpoint3.commands inflating: in-examples/3sims/config/signalware/signalware-endpoint1.properties inflating: in-examples/3sims/config/signalware/signalware-endpoint2.properties inflating: in-examples/3sims/config/signalware/signalware-endpoint3.properties inflating: in-examples/3sims/scenarios/CAPv2-Relay.scen inflating: in-examples/3sims/scenarios/INAP-SSP-SCP-HLR.scen inflating: in-examples/3sims/scenarios/MAP-MT-SMS-DeliveryAbsentSubscriber.scen inflating: in-examples/3sims/scenarios/MAP-MT-SMS-DeliveryPresentSubscriber.scen inflating: in-examples/README-in-examples.txt inflating: licenses/LICENSE-netty.txt inflating: licenses/LICENSE-slf4j.txt inflating: licenses/README-LICENSES-in-scenario-pack.txt |

Scenario Simulator configuration

We will now configure two Scenario Simulator instances and connect them to the cluster. This work should be done in the Scenario Simulator installation directory, which is where the steps from the previous section left us.

| |

The Scenario Simulator and CGIN use identical configuration properties and values when using OCSS7, the only difference between the two is the procedure used for setup and configuration. |

1 |

Set the OCSS7 connection properties for Simulator 1

Edit the file local-sccp-address = type=C7,ri=gt,ssn=101,digits=1234,national=true and ocss7.sgcs = 127.0.0.1:12011 The port in the |

||

|---|---|---|---|

2 |

Set the OCSS7 connection properties for Simulator 2

Edit the file local-sccp-address = type=C7,ri=gt,ssn=102,digits=4321,national=true and ocss7.sgcs = 127.0.0.1:12021 The port in the |

||

3 |

Set the Scenario Simulator endpoint addresses

Edit the following files:

and replace the two lines beginning: set-endpoint-address endpoint1 set-endpoint-address endpoint2 with set-endpoint-address endpoint1 type=c7,ri=gt,pc=1,ssn=101,digits=1234,national=true set-endpoint-address endpoint2 type=c7,ri=gt,pc=2,ssn=102,digits=4321,national=true

|

The Scenario Simulators are now fully configured and ready to test our network.

Test the network

We will now test the network using the Metaswitch Scenario Simulator and one of the example IN scenarios included with it.

1 |

Start the scenario simulators

We need two Scenario Simulator instances for this test, one to initiate our test traffic, and one to respond. Start them with these two commands: ./scenario-simulator.sh -f in-examples/2sims/config/ocss7/setup-sim-endpoint1.commands -f in-examples/2sims/config/setup-examples-sim1.commands and ./scenario-simulator.sh -f in-examples/2sims/config/ocss7/setup-sim-endpoint2.commands -f in-examples/2sims/config/setup-examples-sim2.commands Example for Simulator 1: $ ./scenario-simulator.sh -f in-examples/2sims/config/ocss7/setup-sim-endpoint1.commands -f in-examples/2sims/config/setup-examples-sim1.commands Starting JVM... Processing commands from file at in-examples/2sims/config/ocss7/setup-sim-endpoint1.commands Processing command: set-endpoint-address endpoint1 type=C7,ri=gt,digits=1234 Processing command: set-endpoint-address endpoint2 type=C7,ri=gt,digits=4321 Processing command: create-local-endpoint endpoint1 cgin -propsfile in-examples/2sims/config/ocss7/ocss7-endpoint1.properties Initializing local endpoint "endpoint1" ... Local endpoint initialized. Finished reading commands from file Processing commands from file at in-examples/2sims/config/setup-examples-sim1.commands Processing command: bind-role SSP-Loadgen endpoint1 Processing command: bind-role SCP-Rhino endpoint2 Processing command: wait-until-operational 60000 Simulator is operational Processing command: load-scenario in-examples/2sims/scenarios/INAP-SSP-SCP.scen Playing role "SSP-Loadgen" in initiating scenario "INAP-SSP-SCP" with dialogs [SSP-SCP] Processing command: load-scenario in-examples/2sims/scenarios/CAPv3-Demo-ContinueRequest.scen Playing role "SSP-Loadgen" in initiating scenario "CAPv3-Demo-ContinueRequest" with dialogs [SSP-SCP] Processing command: load-scenario in-examples/2sims/scenarios/CAPv3-Demo-ReleaseCallRequest.scen Playing role "SSP-Loadgen" in initiating scenario "CAPv3-Demo-ReleaseCallRequest" with dialogs [SSP-SCP] Finished reading commands from file Ready to start Please type commands... (type "help" <ENTER> for command help) > Example for Simulator 2: $ ./scenario-simulator.sh -f in-examples/2sims/config/ocss7/setup-sim-endpoint2.commands -f in-examples/2sims/config/setup-examples-sim2.commands Starting JVM... Processing commands from file at in-examples/2sims/config/ocss7/setup-sim-endpoint2.commands Processing command: set-endpoint-address endpoint1 type=C7,ri=gt,digits=1234 Processing command: set-endpoint-address endpoint2 type=C7,ri=gt,digits=4321 Processing command: create-local-endpoint endpoint2 cgin -propsfile in-examples/2sims/config/ocss7/ocss7-endpoint2.properties Initializing local endpoint "endpoint2" ... Local endpoint initialized. Finished reading commands from file Processing commands from file at in-examples/2sims/config/setup-examples-sim2.commands Processing command: bind-role SSP-Loadgen endpoint1 Processing command: bind-role SCP-Rhino endpoint2 Processing command: wait-until-operational 60000 Simulator is operational Processing command: load-scenario in-examples/2sims/scenarios/INAP-SSP-SCP.scen Playing role "SCP-Rhino" in receiving scenario "INAP-SSP-SCP" with dialogs [SSP-SCP] Processing command: load-scenario in-examples/2sims/scenarios/CAPv3-Demo-ContinueRequest.scen Playing role "SCP-Rhino" in receiving scenario "CAPv3-Demo-ContinueRequest" with dialogs [SSP-SCP] Processing command: load-scenario in-examples/2sims/scenarios/CAPv3-Demo-ReleaseCallRequest.scen Playing role "SCP-Rhino" in receiving scenario "CAPv3-Demo-ReleaseCallRequest" with dialogs [SSP-SCP] Finished reading commands from file Ready to start Please type commands... (type "help" <ENTER> for command help) > |

||

|---|---|---|---|

2 |

Check the remote SSN information

Before running a test session let’s pause to check the display-info-remotessninfo command, which should now show the following on both nodes: 127.0.0.1:10111 PC1-1> display-info-remotessninfo Found 4 object(s): +----------+----------+---------------+ |dpc |ssn |status | +----------+----------+---------------+ |1 |1 |ALLOWED | +----------+----------+---------------+ |1 |101 |ALLOWED | +----------+----------+---------------+ |2 |1 |ALLOWED | +----------+----------+---------------+ |2 |102 |ALLOWED | +----------+----------+---------------+ From this we can see that both SGCs have registered the connected simulators and informed the Concerned Point Codes about the state change for the SSN used by the simulator. |

||

3 |

Run a test session

On Simulator 1 run: run-session CAPv3-Demo-ContinueRequest This will run the test scenario, which is a basic CAPv3 IDP / CON scenario. Example: > run-session CAPv3-Demo-ContinueRequest Send --> OpenRequest to endpoint2 Send --> InitialDP (Request) to endpoint2 Send --> Delimiter to endpoint2 Recv <-- OpenAccept from endpoint2 Recv <-- Continue (Request) from endpoint2 Recv <-- Close from endpoint2 Outcome of "CAPv3-Demo-ContinueRequest" session: Matched scenario definition "CAPv3-Demo-ContinueRequest"

|

Installing the SGC

This section of the manual covers the installation, basic configuration, and control of the SGC component of OCSS7.

| |

The Rhino-side component of OCSS7, the TCAP stack, is automatically installed with CGIN and has no special installation procedure or requirements beyond those of Rhino and CGIN. Information on configuring the TCAP stack component can be found in TCAP Stack configuration in the CGIN manual. |

Checking prerequisites

Before installing OCSS7, make sure you have the required:

-

operating system, with

lksctp-toolsinstalled, and

Before attempting a production installation please also see Network Architecture Planning.

Configuring network features

| |

Before installing OCSS7, please configure the following network features:

| Feature | What to configure |

|---|---|

IP address |

Make sure the system has an IPv4 or IPv6 address and is visible on the network. |

Host names |

Make sure that the system can resolve |

Firewall |

Ensure that any firewall installed is configured to permit OCSS7 related traffic. The rules required will depend on the Hazelcast network configuration chosen. See the OCSS7 manual section Hazelcast cluster configuration and the Hazelcast 3.7 Reference Manual section Setting Up Clusters for more details on Hazelcast network configurations. In its default configuration the SGC uses multicast UDP to discover other cluster members:

Additionally, Hazelcast uses TCP to form connections between cluster members. By default it listens on all available network interfaces for incoming TCP connections. This uses ports in the range TCP is also used for intra-node communication (comm switch) and SGC to TCAP stack communication. The ports and addresses for these are user configurable and described in General Configuration. SCTP is used by M3UA assocations. See M3UA Configuration. |

| |

IPv6 considerations

When using IPv6 addressing, remember to configure the PREFER_IPV4 property in the SGC_HOME/config/sgcenv file. For details, please see Configuring SGC_HOME/config/sgcenv. |

User process tuning

Ensure that the user that OCSS7 will be running under has a soft limit of no less than 4096 user processes.

| |

The number of permitted user processes may be determined at runtime using the ulimit command; for example

ulimit -Su

This value may be changed by editing

It may also be necessary to increase the hard limit:

|

SCTP tuning

For optimal performance, tune these kernel parameters:

| Parameter | Recommended value | What it specifies |

|---|---|---|

|

|

Default receive buffer size (in bytes) |

|

|

Default send buffer size (in bytes) |

|

|

Maximum receive buffer size (in bytes) This value limits the |

|

|

Maximum send buffer size (in bytes) This value limits the |

|

|

Minimum retransmission timeout (in ms) This should be greater than the |

|

|

Delayed acknowledgement (SACK) timeout (in ms) Should be lower than the retransmission timeout of the remote SCTP endpoints. |

|

|

SCTP heartbeat interval (in ms) |

| |

Kernel parameters can be changed

|

SGC Installation

To install OCSS7: unpack and configure then create additional nodes.

Unpack and configure

| |

SGC_HOME

The following instructions use |

To begin the SGC installation and create the first node:

1 |

Unpack the SGC archive file

Run: unzip ocss7-package-VERSION.zip (replacing This creates the distribution directory, |

|---|---|

2 |

Make sure that the |

3 |

Configure basic cluster / node information

If your installation will use more than a single node in a SGC cluster, then:

If you are planning to use more than one SGC cluster in the same local network then:

|

Creating additional nodes

After installing the first SGC node in a cluster, you can add more nodes by either:

-

copying the installation directory of an existing node, and changing the

ss7.instanceproperty inSGC_HOME/config/SGC.propertiesto a value unique among all the other nodes in the cluster.

or

-

repeating the installation steps for another node,

-

setting the

ss7.instanceproperty inSGC_HOME/config/SGC.propertiesto a value unique among all other nodes in the cluster, -

setting the

hazelcast.groupinSGC_HOME/config/SGC.propertiesto the value chosen as cluster name, and -

repeating any other installation customization steps.

Layout of the SGC installation

A typical SGC installation contains these subdirectories:

| Directory | Contents |

|---|---|

|

(SGC installation directory)

|

|

SGC management scripts |

|

command line interface installation, including start scripts, configuration, and logs |

|

configuration files which may be edited by the user as required |

|

supplementary documentation included as a convenience, such as SNMP MIB files |

|

Java libraries used by the SGC |

|

third-party software licenses |

|

log output from the SGC |

|

persisted cluster configuration ( |

Running the SGC

SGC operations

| |

JAVA_HOME

The SGC script expects the |

The SGC is started and stopped using the SGC_HOME/bin/sgc script.

The sgc script runs SGC under a watchdog: if the SGC process exits for an unrecognized reason it is automatically restarted. Output from the SGC and from the watchdog script is redirected into a startup log file. The startup log files are in SGC_HOME/logs directory and are named startup.<startup-time>. If startup fails for any reason, details about the failure should be available in the startup file.

The sgc script is configured in SGC_HOME/config/sgcenv. The sgcenv file contains JVM parameters which cannot be provided in the SGC.properties file.

The sgc script can be run with the following arguments:

| Command argument | Optional arguments | Description |

|---|---|---|

|

|

Starts the SGC using the configuration from

|

|

|

Stops the SGC. Without the |

|

|

Equivalent of |

|

|

Runs the SGC in test mode. In test mode, SGC runs in the foreground; and logging is configured in |

|

|

Runs the SGC in foreground mode. SGC is not demonized. |

|

Prints the status of SGC and returns one of these LSB-compatible exit codes:

|

For example:

Start SGC |

|

|---|---|

Stop SGC |

|

Check SGC status |

|

Configuring SGC_HOME/config/sgcenv

The SGC_HOME/config/sgcenv file contains configuration parameters for the sgc script. The following settings are supported:

| Variable name | Descriptions | Valid Values | Default |

|---|---|---|---|

|

Location of the JVM home directory. |

||

|

Host that SGC should bind to in order to listen for incoming JMX connections. |

IPv4 or IPv6 address |

|

|

Port where SGC binds for incoming JMX connections. It is not recommended to use a port in the emphemeral range as these are used for short-lived TCP connections and may result in the SGC failing to start if the port is in use by another application. The emphemeral port range may be queried with |

|

|

|

Whether or not the JMX connection should be secured with SSL/TLS. For details, please see Securing the SGC JMX management connection with SSL/TLS. |

|

|

|

Whether or not the SGC should require a trusted client certificate for an SSL/TLS-secured JMX connection. For details, please see Securing the SGC JMX management connection with SSL/TLS. |

||

|

Path to the configuration file with properties used to secure the JMX management connection. For details, please see Securing the SGC JMX management connection with SSL/TLS. |

||

|

Password used during generation of the key store and trust store used to secure the JMX management connection. For details, please see Securing the SGC JMX management connection with SSL/TLS. |

|

|

|

Maximum size of the JVM heap space. For details, please see Configuring the Java Heap. |

||

|

Initial size of the JVM heap space. |

||

|

The size (minimum and maximum) of the JVM new generation. |

|

|

|

Full override of default garbage collections settings. |

||

|

Additional JVM parameters. Modifications should add to the existing |

||

|

The log4j2 configuration file to be used in normal mode (start/restart/foreground). |

||

|

The log4j2 configuration file to be used in test mode. |

||

|

Location of the SGC properties file. |

||

|

Whether or not the watchdog is enabled. Disabling the watchdog may be required if the SGC is run under the control of some other HA systems. |

|

|

|

Enables additional script information. |

|

|

|

Prefers using IPv4 protocol. Set value to |

|

|

|

On NUMA architecture machines, this parameter allows selecting specific CPU and memory bindings for SGC. |

||

|

On non-NUMA architecture machines, this parameter may be used to set SGC affinity to specific CPUs. |

| |

JMX Connector configuration variables

|

Configuring the Java Heap

The Java Virtual Machine’s MAX_HEAP_SIZE must be appropriately configured for the SGC. If insufficient heap is configured, then the result may be:

-

Frequent and/or prolonged garbage collections, which may have a negative impact on the SGC’s performance.

-

SGC restarts caused by the JVM throwing

OutOfMemoryError.

The main factors affecting the selection of an appropriate value are:

-

The base SGC requires a certain amount of heap.

-

The size of the configuration MML.

-

The configured maximum concurrent TCAP transactions (

sgc.tcap.maxTransactions)

MAX_HEAP_SIZE is no longer dependent on the number of connected TCAP peers or migrated prefixes.

Recommendations

| Factor | Recommendation |

|---|---|

Base SGC |

1024MB This value allows for an SGC configured for 1 million The amount required is platform and JVM dependent. An estimation of the base SGC requirements may be obtained by loading a minimal MML configuration into a test SGC, and then using the |

Configuration MML Size |

Variable Each As each configuration differs substantially it is not possible to provide generic guidelines. An estimation of a configuration’s minimum requirements may be obtained by loading the full MML configuration into a test SGC, and then using the |

Maximum concurrent TCAP transactions |

1 million transactions requires no additional heap allowance as this is included in the base SGC figure above. 10 million transactions requires approximately 250MB additional heap. 100 million transactions requires approximately 2.5GB additional heap. The maximum number of concurrent TCAP transactions is configured in |

Installing SGC as a service

To install SGC as a service, perform the following operations as user root:

1 |

Copy # copy $SGC_HOME/bin/sgcd /etc/init.d |

|---|---|

2 |

Grant execute permissions to # chmod a+x /etc/init.d/sgcd |

3 |

Create the file SGC=/opt/sgc/PC1-1/PC1/current/bin/sgc SGCUSER=sgc |

4 |

Activate the service using the standard RedHat command: # chkconfig --add sgcd |

Network Architecture Planning

| |

Ensure you are familiar with the with the OCSS7 architecture before going further. In particular, ensure you have read the following: |

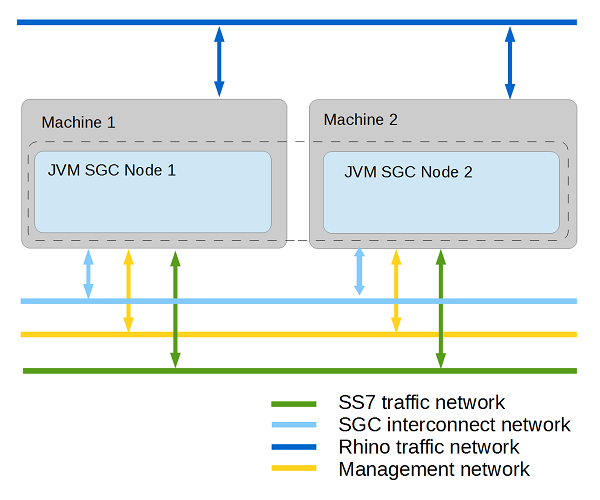

Network planning

When planning an OCSS7 deployment, Metaswitch recommends preparing IP subnets that logically separate different kinds of traffic:

| Subnet | Description |

|---|---|

SS7 network |

dedicated for incoming/outgoing SIGTRAN traffic; should provide access to the operator’s SS7 network |

SGC interconnect network |

internal SGC cluster network with failover support (provided by interface bonding mechanism); used by Hazelcast and communication switch |

Rhino traffic network |

used for traffic exchanged between SGC and Rhino nodes |

Management network |

dedicated for managing tools and interfaces (JMX, HTTP) |

SGC Stack network communication overview

The SS7 SGC uses multiple logical communication channels that can be separated into two broad categories:

-

SGC directly managed connections — connections established directly by SGC subsystems, configured as part of the SGC cluster-managed configuration

-

Hazelcast managed connections — connections established by Hazelcast, configured as part of static SGC instance configuration.

SGC directly managed connections

The following table describes network connections managed directly by the SGC configuration.

| Protocol | Subsystem | Subnet | Defined by | Usage | |

|---|---|---|---|---|---|

|

TCP |

Rhino traffic network |

|

Used in the first phase of communication establishment between the TCAP Stack (CGIN RA) and the SGC cluster. The communication channel is established during startup of the TCAP Stack (CGIN RA activation), and closed after a single HTTP request / response. |

||

|

|

Used in the second phase of communication establishment between the TCAP Stack (CGIN RA) and the SGC cluster. The communication channel is established and kept open until either the SGC Node or the TCAP Stack (CGIN RA) is shutdown (deactivated). This connection is used to exchange TCAP messages between the SGC Node and the TCAP Stack using a custom protocol. The level of expected traffic is directly related to the number of expected SCCP messages originated and destined for the SSN represented by the connected TCAP Stack.

|

||||

|

SGC interconnect network |

|

Used by the communication switch (inter-node message transfer module) to exchange message traffic between nodes of the SGC cluster. The communication channel is established between nodes of the SGC cluster during startup, and kept open until the node is shut down. During startup, the node establishes connections to all other nodes that are already part of the SGC cluster. The level of expected traffic depends on the deployment model, and can vary anywhere between none and all traffic destined and originated by the SGC cluster.

|

|||

|

SCTP |

M3UA |

SS7 Network |

|

Used by SGC nodes to exchange M3UA traffic with Signalling Gateways and/or Application Servers. The communication channel lifecycle depends directly on the SGC cluster configuration; that is, the enabled attribute of the connection configuration object and the state of the remote system with which SGC is to communicate. The level of traffic should be assessed based on business requirements. |

|

|

JMX over TCP |

Configuration |

Management network |

Used for managing the SGC cluster. Established by the management client Command-Line Management Console, for the duration of the management session. The level of traffic is negligible. |

Hazelcast managed connections

Hazelcast uses a two-phase cluster-join procedure:

-

Discover other nodes that are part of the same cluster.

-

Establish one-to-one communication with each node found.

Depending on the configuration, the first step of the cluster-join procedure can be based either on UDP multicast or direct TCP connections. In the latter case, the Hazelcast configuration must contain the IP address of at least one other node in the cluster. Connections established in the second phase always use direct TCP connections established between all the nodes in the Hazelcast cluster.

Traffic exchanged over SGC interconnect network by Hazelcast connections is mainly related to:

-

SGC runtime state changes

-

SGC configuration state changes

-

Hazelcast heartbeat messages.

During normal SGC cluster operation, the amount of traffic is negligible and consists mainly of messages distributing SGC statistics updates.

Inter-node message transfer

The communication switch (inter-node message transfer module) is responsible for transferring data traffic messages between nodes of the SGC cluster. After the initial handshake message exchange, the communication switch does not originate any network communication by itself. It is driven by requests of the TCAP or M3UA layers.

Usage of the communication switch involves additional message-processing overhead, consisting of:

-

CPU processing time to encode and later decode the message — this overhead is negligible

-

network latency to transfer the message between nodes of the SGC cluster — overhead depends on the type and layout of the physical network between communicating SGC nodes.

This overhead is unnecessary in normal SGC cluster operation, and can be avoided during deployment-model planning.

Below are outlines of scenarios involving communication switch usage: Outgoing message inter-node transfer and Incoming message inter-node transfer; followed by tips for Avoiding communication switch overhead.

Outgoing message inter-node transfer

A message that is originated by the TCAP stack (CGIN RA) is sent over the TCP-based data-transfer connection to the SGC node (node A). It is processed within that node up to the moment when actual bytes should be written to the SCTP connection, through which the required DPC is reachable. If the SCTP connection over which the DPC is reachable is established on a different SGC node (node B), then the communication switch is used. The outgoing message is transferred, using the communication switch, to the node where the SCTP connection is established (transferred from node A to node B). After the message is received on the destination node (node B) it is transferred over the locally established SCTP connection.

Incoming message inter-node transfer

A message received by an M3UA connection, with a remote Signalling Gateway or other Application Server, is processed within the SGC node where the connection is established (node A). If the processed message is a TCAP message addressed to a SSN available within the SGC cluster, the processing node is responsible for selection of a TCAP Stack (CGIN RA) corresponding to that SSN. The TCAP Stack (CGIN RA) selection process gives preference to TCAP Stacks (CGIN RAs) that are directly connected to the SGC node which is processing the incoming message. If a suitable locally connected TCAP Stack (CGIN RA) is not available, then a TCAP stack connected to another SGC node (node B) in the SGC cluster is selected. After the selection process is finished, the incoming TCAP message is sent either directly to the TCAP Stack (locally connected TCAP Stack), or first transferred through the communication switch to the appropriate SGC node (transferred from node A to node B) and later sent by the receiving node (node B) to the TCAP Stack.

| |

TCAP Stack (CGIN RA) selection

TCAP Stack selection is invoked for messages that start a new transaction ( TCAP Stack selection is described by following algorithm:

|

Avoiding communication switch overhead

A review of the preceding communication-switch usage scenarios suggests a set of rules for deployment, to help avoid communication-switch overhead during normal SGC cluster operation.

| Scenario | Avoidance Rule | Configuration Recommendation | ||

|---|---|---|---|---|

|

If an SSN is available within the SGC cluster, at least one TCAP Stack serving that particular SSN must be connected to each SGC node in the cluster. |

The number of TCAP Stacks (CGIN RAs) serving a particular SSN should be at least the number of SGC nodes in the cluster.

|

|||

|

If the SGC Stack is to communicate with a remote PC (another node in the SS7 network), that PC must be reachable through an M3UA connection established locally on each node in the SGC cluster. |

When configuring remote PC availability within the SGC Cluster, the PC must be reachable through at least one connection on each SGC node. |

SGC cluster membership and split-brain scenario

The SS7 SGC Stack is a distributed system. It is designed to run across multiple computers connected across an IP network. The set of connected computers running SGC is known as a cluster. The SS7 SGC Stack cluster is managed as a single system image. SGC Stack clustering uses an n-way, active-cluster architecture, where all the nodes are fully active (as opposed to an active-standby design, which employs a live but inactive node that takes over if needed).

SGC cluster membership state is determined by Hazelcast based on network reachability of nodes in the cluster. Nodes can become isolated from each other if some networking failure causes a network segmentation. This carries the risk of a "split brain" scenario, where nodes on both sides of the segment act independently, assuming nodes on the other segment have failed. The responsibility of avoiding a split-brain scenario depends on the availability of a redundant network connection. For this reason, network interface bonding MUST be employed to serve connections established by Hazelcast.

Usage of a communication switch subsystem within the SGC cluster depends on the cluster membership state, which is managed by Hazelcast. Network connectivity as seen by the communication switch subsystem MUST be consistent with the cluster membership state managed by Hazelcast. To fulfil this requirement, the communication switch subsystem MUST be configured to use the same redundant network connection as Hazelcast.

| |

Network connection redundancy delivery method

Both Hazelcast and the communication switch currently do not support network interface failover. This results in a requirement to use OS-level network interface bonding to provide a single logical network interface delivering redundant network connectivity. |

| |

Network Path Redundancy

The entire network path between nodes in the cluster must be redundant (including routers and switches). |

Recommended physical deployment model

In order to take full advantage of the fault-tolerant and high-availability modes supported by the OC SS7 stack, Metaswitch recommends using at least two dedicated machines with multicore CPUs and two or more Network Interface Cards.

Each SGC node should be deployed on one dedicated machine. However hardware resources can be also shared with nodes of Rhino Application Server.

| |

The OC SS7 stack also supports less complex deployment modes which can also satisfy high-availability requirements. |

| |

To avoid single points of failure at network and hardware levels, provide redundant connections for each kind of traffic.The SCTP protocol that SS7 traffic uses itself provides a mechanism for IP multi-homing. For other kinds of traffic, an interface-bounding mechanism should be provided. Below is an example assignment of different kinds of traffic among network interface cards on one physical machine. |

| Network Interface Card 1 | Network Interface Card 2 | |

|---|---|---|

port 1 |

SS7 IP addr 1 |

SS7 IP addr 2 |

port 2 |

SGC Interconnect IP addr (bonded) |

SGC Interconnect IP addr (bonded) |

port 3 |

Rhino IP addr |

|

port 4 |

Management IP addr |

| |

While not required, bonding Management and Rhino traffic connections can provide better reliability. |

Securing the SGC JMX management connection with SSL/TLS

Default configuration of the JMX management connection

The default JMX configuration allows for unsecured JMX management connections from the local machine only. That is, the SGC SS7 stack by default listens for management connections on a local loopback interface. This allows for any JMX management client running on the same machine as the SGC stack instance to connect and manage that instance with no additional configuration.

Securing the JMX management connection with SSL/TLS

| |

SGC_HOME

SGC_HOME in the following instructions represents the path to the SGC Stack installation directory. |

SGC stack secure configuration

The SGC SS7 stack can be configured to secure JMX management connections using the SSL/TLS protocol. The default installation package provides a helper shell script (SGC_HOME/bin/sgckeygen) that generates:

-

SGC_HOME/config/sgc-server.keystore— a PKCS #12 repository of security certificates containing two entries: an SGC JMX server private key and a trust entry for the SGC JMX client certificate -

SGC_HOME/config/sgc-client.keystore— a PKCS #12 repository of security certificates containing two entries: an SGC JMX client private key and a trust entry for the SGC JMX server certificate -

SGC_HOME/config/netssl.properties— a Java properties file containing the configuration the SGC Stack uses during start-up (properties in this file point to the generatedsgc-server.keystore) -

SGC_HOME/config/sgc-trust.cert— the SGC JMX server x509 certificate in DER format, which can be imported to any pre-existing KeyStore to establish a trust relation.

To enable a secure JMX management connection:

-

Generate appropriate server / client private keys and certificates: run the

SGC_HOME/bin/sgckeygenscript. -

Change the SGC stack configuration to enable the secure connection: edit the configuration file

SGC_HOME/config/sgcenv, changing theJMX_SECUREvariable value to true.

| |

By default, the SGC stack is configured to require client authorization with a trusted client certificate. The straightforward approach is to use the generated SGC_HOME/config/sgc-client.keystore as part of the JMX management client configuration. |