This book contains performance benchmarks using OpenCloud’s OCSS7.

It contains these sections:

-

Benchmark Scenarios — descriptions of each of the benchmark scenarios, and notes on the benchmark methodology used

-

Hardware and Software — details of the hardware, software, and configuration used for the benchmarks

-

Benchmark Results — summaries of the benchmarks and links to detailed metrics.

Other documentation for OCSS7 can be found on the OCSS7 product page.

Benchmark Scenarios

This page describes the scenarios and methodology used when running the benchmarks.

Benchmarks are run using two scenarios. Each scenario is run with the OCSS7 acting as the initiator and as the responder.

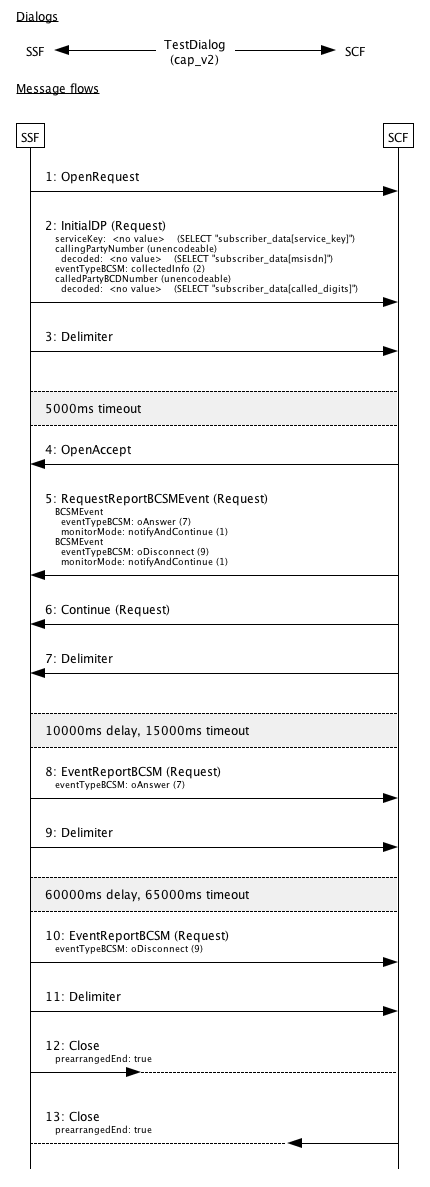

CAP call monitoring scenario

These scenarios consist of dialogs following the message flow:

-

The initiator sends a TC-BEGIN initiating a CAP v2 dialog, containing an CAP InitialDP operation invoke

-

The responder sends a TC-CONTINUE containing two operation invokes: CAP RequestReportBCSM(oAnswer,oDisconnect), and CAP Continue

-

The initiator delays for 10 seconds

-

The initiator sends a TC-CONTINUE containing a CAP EventReportBCSM(oAnswer) operation invoke

-

The initiator delays for 60 seconds

-

The initiator sends a TC-CONTINUE containing a CAP EventReportBCSM(oDisconnect) operation invoke

-

The dialog is ended with prearranged end on both the initiator and responder

OCSS7 as the Initiator

In this configuration, the test system generates load, and is the initiator of the dialog. The responder is the external support system.

Response time is measured on the test system by the scenario simulator infrastructure, from immediately before submission of the TC-BEGIN to the TCAP stack, to immediately after receipt of the TC-CONTINUE containing the CAP Continue invoke from the TCAP stack.

OCSS7 as the Responder

In this configuration, the support system generates load, and is the initiator of the dialog. The test system responds to the dialog.

Response time is measured on the support system by the scenario simulator infrastructure, from immediately before submission of the TC-BEGIN to the TCAP stack, to immediately after receipt of the TC-CONTINUE containing the CAP Continue invoke from the TCAP stack.

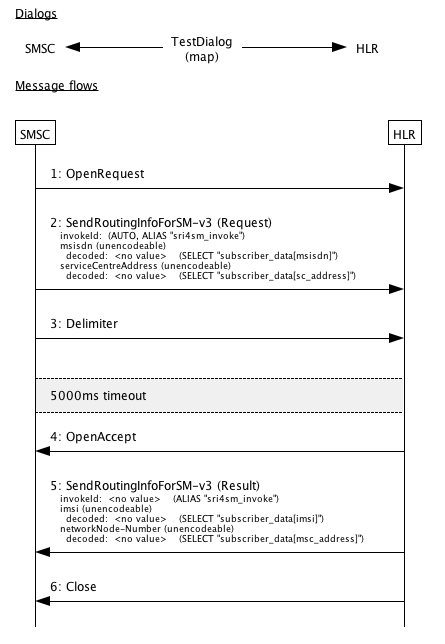

MAP SRI for SM scenario

These scenarios consist of dialogs following the message flow:

-

The initiator sends a TC-BEGIN initiating a MAP dialog, containing SEND-ROUTING- INFO-FOR-SM invoke

-

The responder immediately responds with a TC-END containing a SEND-ROUTING- INFO-FOR-SM result

OCSS7 as the Initiator

In this configuration, the test system generates load, and is the initiator of the dialog. The responder is the external support system.

Response time is measured on the test system by the scenario simulator infrastructure, from immediately before submission of the TC-BEGIN to the TCAP stack, to immediately after receipt of the TC-END from the TCAP stack.

OCSS7 as the Responder

In this configuration, the support system generates load, and is the initiator of the dialog. The test system responds to the dialog, sending a TC-END.

Response time is measured on the support system by the scenario simulator infrastructure, from immediately before submission of the TC-BEGIN to the TCAP stack, to immediately after receipt of the TC-END from the TCAP stack.

Test setup

Each test run consists of a 10 minute ramp-up period where load is increased from zero to the target rate; then a 30 minute measurement period at peak load; then a 2 minute drain period during which no new dialogs are initiated.

The ramp-up period is included as the Oracle JVM provides a Just In Time (JIT) compiler. The JIT compiler compiles Java bytecode to machinecode, and recompiles code on the fly to take advantage of optimizations not otherwise possible. This dynamic compilation and optimization process takes some time to complete. During the early stages of JIT compilation/optimization, the node cannot process full load. JVM garbage collection does not reach full efficiency until several major garbage collection cycles have completed.

Only latency measurements during the measurement period are used; latency measurements during the ramp-up period are ignored.

Load is not stopped between ramp up and starting the test timer.

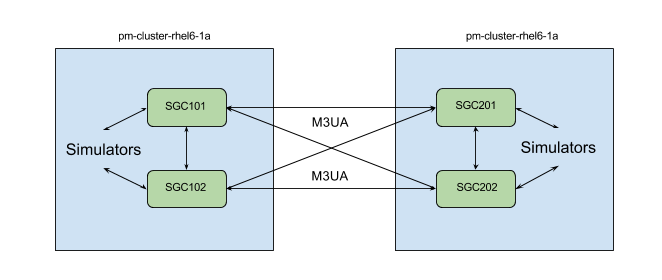

Hardware and Software

This page describes the hardware and software used when running the benchmarks.

Hardware

| Server Name | Specification | OS |

|---|---|---|

pm-cluster-rhel6-1a |

HP DL380 G7 2X Intel Xeon X5660 2.8 GHz 12 Core, 36GB memory |

RHEL 6.8 |

pm-cluster-rhel6-1b |

HP DL380 G7 2X Intel Xeon X5660 2.8 GHz 12 Core, 36GB memory |

RHEL 6.8 |

Software

| Vendor | Software | Version |

|---|---|---|

Oracle |

JDK 1.8.0_60 (64 bit) |

|

OpenCloud |

2.0.0.0 |

|

OpenCloud |

2.3.0.8 |

|

OpenCloud |

1.5.4.0 |

Configuration

| Parameter | Value |

|---|---|

Heap size |

2048M |

GC Type |

CMS |

com.cts.ss7.commsp.server.sendQueueCapacity |

1024 |

sgc.ind.pool_size |

20000 |

sgc.req.pool_size |

20000 |

sgc.worker.threads |

32 |

| Parameter | Value |

|---|---|

net.sctp.rto_min |

80 |

net.sctp.sack_timeout |

70 |

net.core.rmem_max |

2000000 |

net.core.wmem_max |

2000000 |

Benchmark Results

A summary of the benchmark results follows. Click on a benchmark name for detailed results.

| Benchmark | Rate | CPU Usage | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

6000 calls per second (24000 messages per second) |

|

|||||||||||

|

20000 calls per second (40000 messages per second) |

|

CAP Call Monitoring scenario

This page summarises the results for benchmarks executed with the CAP Call Monitoring scenario. Detailed metrics follow the summary tables.

The benchmarks are run in two configurations:

-

OCSS7 as the Initiator

-

OCSS7 as the Responder

The CAP call monitoring scenario description has more information on how these configurations are defined.

| |

Identical call rates was used for both Initiator and Responder benchmarks |

Benchmarks

|

6000 calls per second (24000 messages per second) |

|||||||||||||

|

|||||||||||||

|

|||||||||||||

|

MAP SRI for SM scenario

This page summarises the results for benchmarks executed with the MAP SRI for SM scenario. Detailed metrics follow the summary tables.

The benchmarks are run in two configurations:

-

OCSS7 as the Initiator

-

OCSS7 as the Responder

The MAP SRI for SM scenario description has more information on how these configurations are defined.

| |

Identical call rates was used for both Initiator and Responder benchmarks |

Benchmarks

|

20000 calls per second (40000 messages per second) |

|||||||||||

Maximum theoretical CPU usage is 1200% |

|||||||||||

|

|||||||||||

|