With each major release, OpenCloud provides a standard set of benchmark data, which we design to be genuine and reliable. We measure platform performance in realistic telecommunications scenarios, so that solutions architects can gauge the true likely performance of their development when hosted on OpenCloud Rhino.

| |

|

Scenarios, configuration, and results

For details, please see:

-

descriptions of the real-world test scenarios

-

benchmark environment and configuration for CGIN and Rhino

-

benchmark results.

| |

PROPRIETARY AND CONFIDENTIAL

Rhino benchmark information on these pages is proprietary and confidential. Do not reproduce or present in any fashion without express written consent from OpenCloud. (See also the Rhino documentation Copyright and Disclaimers page.) |

Benchmark Scenarios

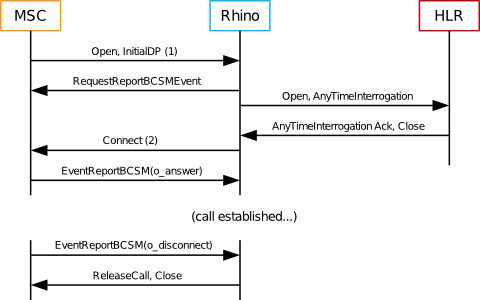

The CGIN benchmarks test CAPv3 within a VPN (Virtual Private Network) IN application.

About the test scenario

We run two benchmarks.

-

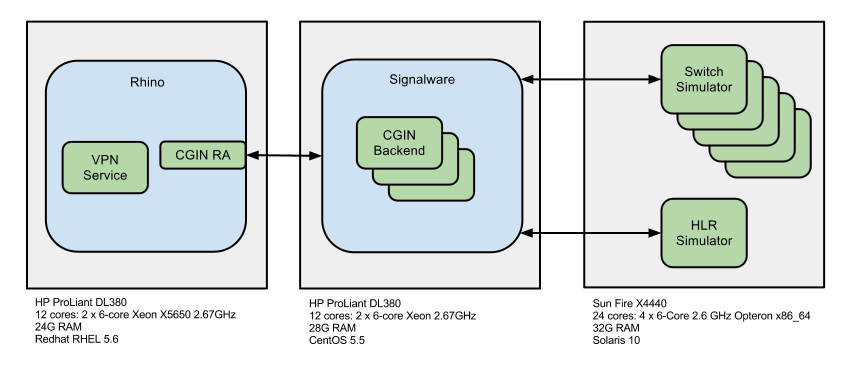

The single-node benchmark runs the VPN application using a single instance of CGIN at 5,000 calls per second.

-

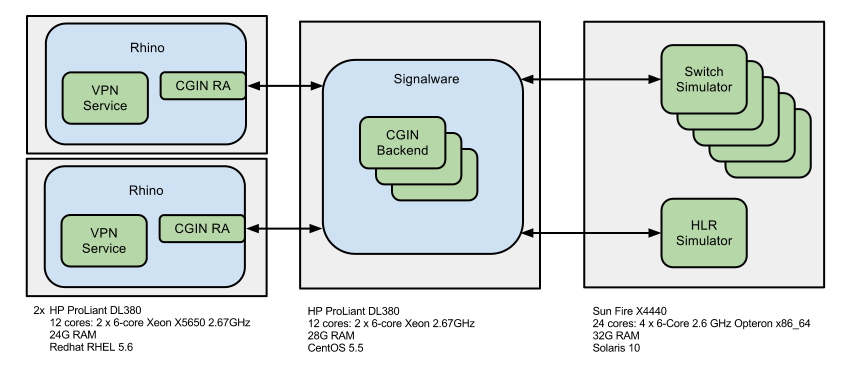

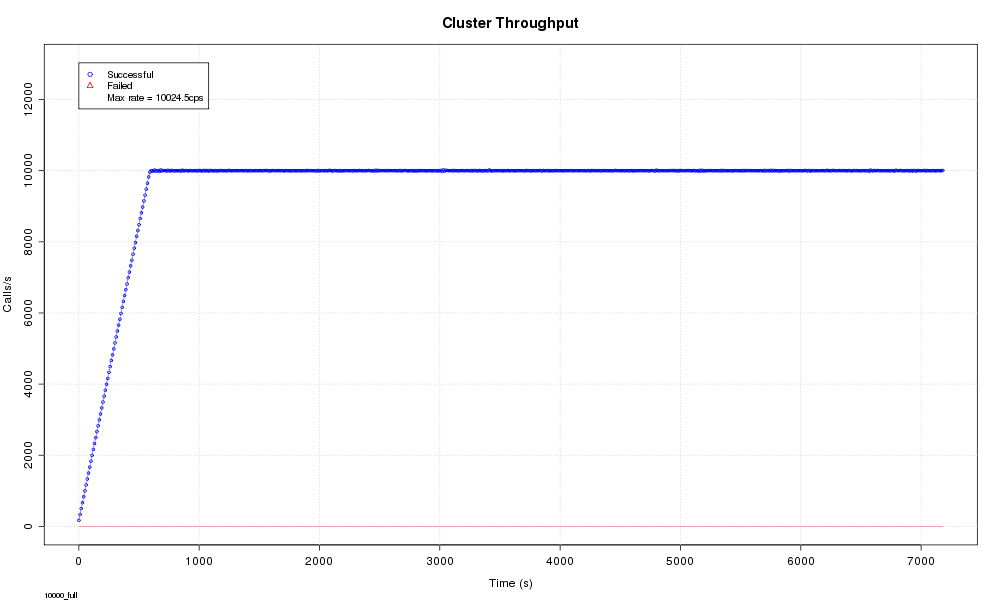

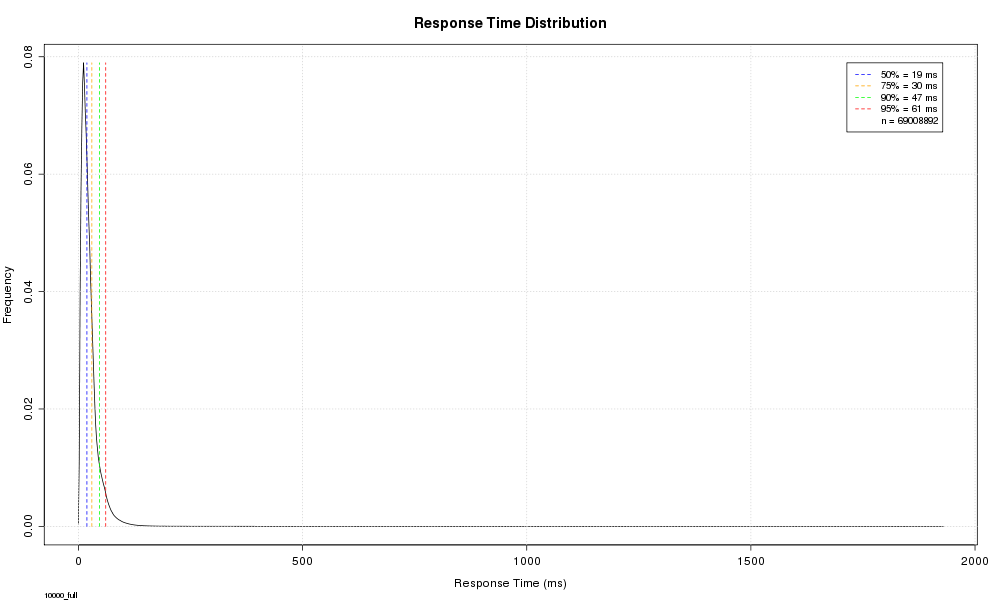

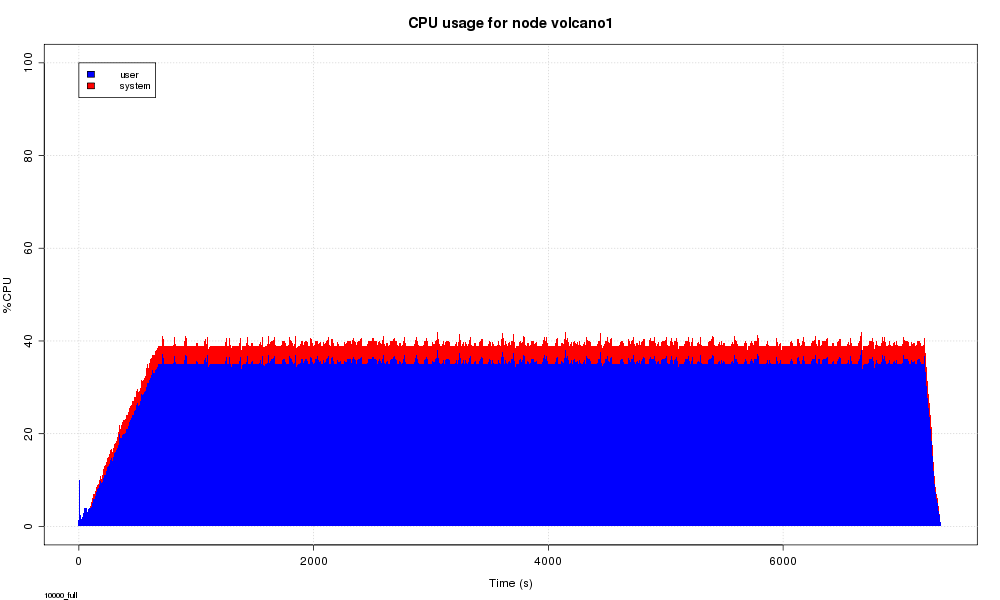

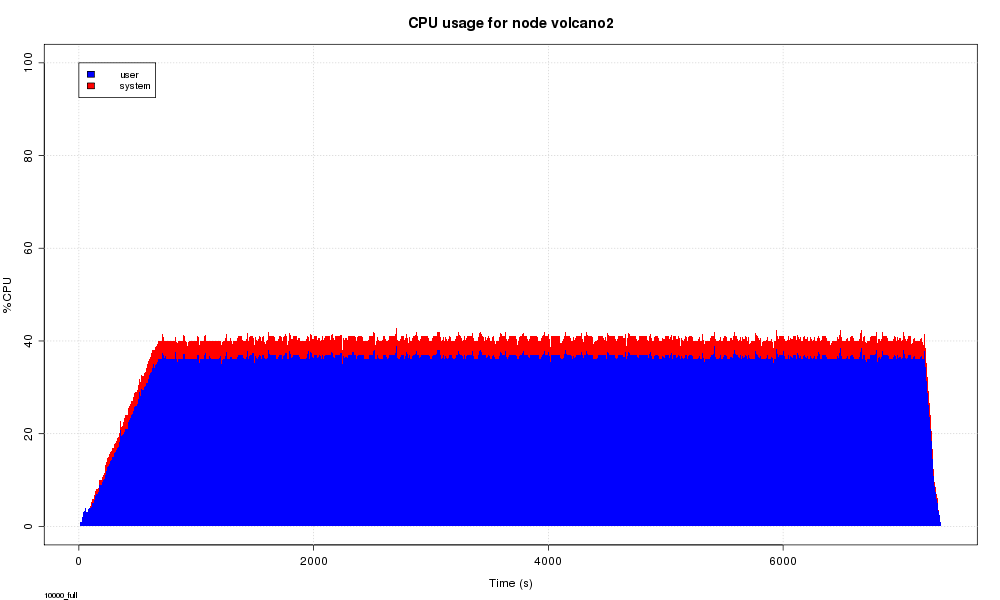

The two-node benchmark runs the VPN application using two instances of CGIN clustered over two servers, at 10,000 calls per second. Each node handles 5,000 calls.

The two-node configuration benchmarks scalability within a cluster. Differences in latency from single-node tests may be due to chatter and shared resources between nodes, which happens with a clustered install even when using high availability.

For each test, we:

-

used a call duration of 60s

-

generated load for two hours, with a ten minute ramp up period at the start

-

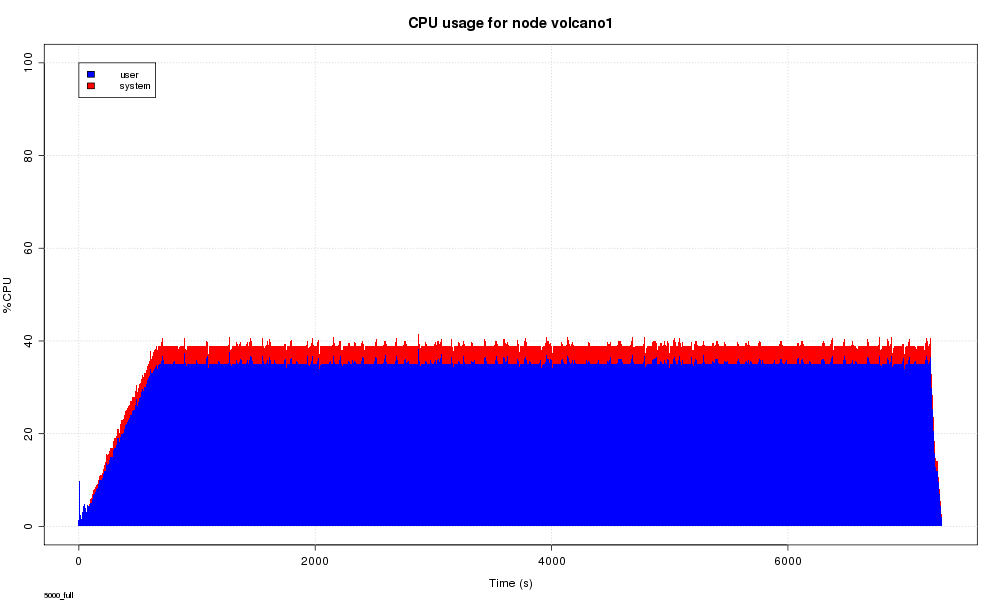

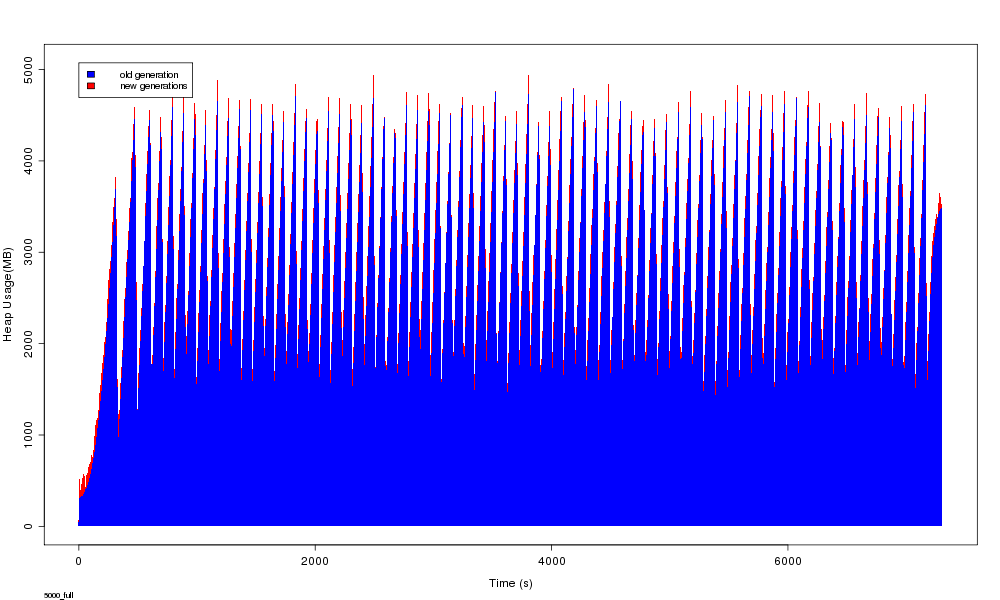

observed the CPU utilisation of the VPN application, the call setup latency, and the heap usage.

The VPN Application

The VPN application provides number-translation and call-barring services for groups of subscribers. It supports CAPv3 for call-control and MAPv3 for location information.

| If the A party… | then VPN responds with: |

|---|---|

|

…has called a B party in their own VPN using short code or public number |

|

|

…and B party are not in the same VPN |

|

|

…dials a short code that doesn’t exist |

|

Benchmark Environment and Configuration

Below are the hardware, software, and Rhino configuration used for the CGIN benchmarks.

Software

The CGIN benchmark tests used the following software.

| Software | Version |

|---|---|

Java |

JDK 1.6.0_18 |

Rhino |

Rhino 2.3.0.0 |

CGIN |

1.5.0.8 |

CGIN VPN |

1.5.0.8 |

CGIN Signalware Back End |

Ulticom Signalware SP6.V |

Rhino configuration

For the CGIN benchmark tests, we made the following changes to the Rhino 2.3 default configuration.

| Parameter | Value | Note |

|---|---|---|

TCAP stack |

Signalware 9.0SP6V |

|

JVM Architecture |

64bit |

Enables larger heaps |

Heap size |

8192M |

|

New Gen size |

256M |

Increased from default of 128M to achieve higher throughput without increasing latency |

Staging queue size |

5000 |

Increased from default of 3000 to allow up to 1s worth of burst traffic |

Staging threads |

60 |

Increased from default of 30 for reduced latency at high throughput |

CGIN RA |

10 |

Increased from default of 4 for reduced latency at high throughput |

CGIN RA |

5000 |

Increased from default of 250 to allow load spikes without processing falling back to incoming thread |

CGIN RA |

5000 |

Increased from default of 256 as required to match tcap-fibers.queue-size |

Local Memory DB size |

200M |

Increased from default of 100M to allow more in-flight calls |

| |

You can also review the Rhino configuration files we used during the test runs. |

Rhino Configuration

The following configuration files were in place during the CGIN benchmark tests:

config_variables

#Tue Jun 26 09:28:32 NZST 2012 SAVANNA_MCAST_END=224.0.253.8 RHINO_SERVER_STORE_PASS=changeit SNAPSHOT_BASEPORT=42000 MAX_NEW_SIZE=256m RHINO_SSL_PORT=1203 RHINO_CLIENT_STORE_PASS=changeit RHINO_PASSWORD=password JAVA_HOME=/opt/hudson/tools/jdk1.6.0_18 LOCALIPS="127.0.0.1 [0\:0\:0\:0\:0\:0\:0\:1%1] [fe80\:0\:0\:0\:225\:22ff\:fe61\:f9ad%2] 192.168.0.97 192.168.122.1" MANAGEMENT_DATABASE_HOST=volcano1 MANAGEMENT_DATABASE_PASSWORD=rhino JVM_ARCH=64 RHINO_CLIENT_KEY_PASS=changeit SAVANNA_MCAST_START=224.0.253.1 RHINO_BASE=/home/pburrowes/remagent_253/rhino SAVANNA_CLUSTER_ADDR=224.0.253.1 RHINO_SERVER_KEY_PASS=changeit RHINO_WATCHDOG_THREADS_THRESHOLD=50 NEW_SIZE=256m MANAGEMENT_DATABASE_NAME=cgin_perf RMI_MBEAN_REGISTRY_PORT=1199 MANAGEMENT_DATABASE_USER=postgres HEAP_SIZE=8192m RHINO_WATCHDOG_DUMPTHREADS=/home/pburrowes/remagent_253/rhino/node-102/dumpthreads.sh JMX_SERVICE_PORT=1202 RHINO_USERNAME=admin RHINO_CLUSTERING=/home/pburrowes/remagent_253/rhino/node-102/clustering.sh NODE_ID=102 RHINO_WATCHDOG_STUCK_INTERVAL=45000 SAVANNA_CLUSTER_ID=253 RHINO_WORK_DIR=/home/pburrowes/remagent_253/rhino/node-102/work RHINO_HOME=/home/pburrowes/remagent_253/rhino/node-102 FILE_URL=file\: MANAGEMENT_DATABASE_PORT=5432

rhino-config.xml

<?xml version="1.0" encoding="UTF-8"?>

<!DOCTYPE rhino-config PUBLIC "-//Open Cloud Ltd.//DTD Rhino Config 2.3.1//EN" "http://www.opencloud.com/dtd/rhino-config_2_3_1.dtd">

<rhino-config>

<file-locations>

<temp-dir>${RHINO_WORK_DIR}/tmp</temp-dir>

<deployment-dir save="False">${RHINO_WORK_DIR}/deployments</deployment-dir>

<state-dir>${RHINO_WORK_DIR}/state</state-dir>

<per-machine-mlet-conf>file:${RHINO_CONFIG_DIR}/permachine-mlet.conf</per-machine-mlet-conf>

<per-node-mlet-conf>file:${RHINO_CONFIG_DIR}/pernode-mlet.conf</per-node-mlet-conf>

<savanna-base-url>file:${RHINO_CONFIG_DIR}/savanna/cluster.properties</savanna-base-url>

<security-policy-file>${RHINO_CONFIG_DIR}/rhino.policy</security-policy-file>

</file-locations>

<cluster-group>rhino-cluster</cluster-group>

<admin-group>rhino-admin</admin-group>

<!-- State exchange configuration. This may be different on each node if desired. -->

<state-exchange>

<!-- TCP port range to accept state-exchange connections on. Ports are tried in order until one is successfully bound, so this range can safely be the same on all nodes even when there are multiple nodes per physical machine) -->

<min-port>6000</min-port>

<max-port>6099</max-port>

<!-- Maximum bandwidth per node for state exchange during initial cluster boot. This defaults to 8MB/s to avoid completely saturating a 100Mbit connection. If you are using 1000Mbit, consider increasing this. -->

<boot-rate-limit>8000000</boot-rate-limit>

<!-- Maximum bandwidth per node for state exchange when the cluster is active. This defaults to 4MB/s to avoid interfering with the extracting node's normal operation, but can be increased.-->

<active-rate-limit>4000000</active-rate-limit>

<!-- Optional address to bind to. If omitted, determined automatically by Savanna -->

<!-- <override-address>1.2.3.4</override-address> -->

</state-exchange>

<activity-handler>

<group-name>rhino-ah</group-name>

<message-id>10000</message-id>

<resync-rate>100000</resync-rate>

</activity-handler>

<persistence-resources>

<replicated-sbbs>DomainedMemoryDatabase</replicated-sbbs>

<non-replicated-sbbs>LocalMemoryDatabase</non-replicated-sbbs>

<profiles>ProfileDatabase</profiles>

<resource-adaptors>DomainedMemoryDatabase</resource-adaptors>

<default-shutdown-flush-timeout>60000</default-shutdown-flush-timeout>

</persistence-resources>

<!-- Example replication domain configuration. This example splits the cluster into several 2-node domain pairs for the purposes of service state replication. This example does not cover replication domaining for writeable profiles. -->

<!-- <domain name="domain-1" nodes="101,102"> <memdb-resource>DomainedMemoryDatabase</memdb-resource> <ah-resource>rhino-ah</ah-resource> </domain> <domain name="domain-2" nodes="201,202"> <memdb-resource>DomainedMemoryDatabase</memdb-resource> <ah-resource>rhino-ah</ah-resource> </domain> <domain name="domain-3" nodes="301,302"> <memdb-resource>DomainedMemoryDatabase</memdb-resource> <ah-resource>rhino-ah</ah-resource> </domain> <domain name="domain-4" nodes="401,402"> <memdb-resource>DomainedMemoryDatabase</memdb-resource> <ah-resource>rhino-ah</ah-resource> </domain> -->

<rhino-monitoring>

<group-name>rhino-monitoring</group-name>

<message-id>19999</message-id>

<sample-generations>6</sample-generations>

<sample-generation-rollover>5000</sample-generation-rollover>

<stats-session-timer>20000</stats-session-timer>

<heartbeat-period>5000</heartbeat-period>

<direct-min-port>17400</direct-min-port>

<direct-max-port>17699</direct-max-port>

</rhino-monitoring>

<query-is-alive>

<scan-period>180000</scan-period>

<threshold>150000</threshold>

<maximum-batch-size>128</maximum-batch-size>

</query-is-alive>

<timer-facility>

<timer-resolution>250</timer-resolution>

<timer-common-duration>32000</timer-common-duration>

<timer-max-duration>180000</timer-max-duration>

<timer-default-timeout>1000</timer-default-timeout>

</timer-facility>

<default-component-log4j-include>

<sbb-log4j-include included="False"/>

<ra-log4j-include included="True"/>

<library-log4j-include included="True"/>

</default-component-log4j-include>

<ocbb-resources>

<!-- Note: the names of the following resources must not be changed as the core system depends on their presence: LocalMemoryDatabase ReplicatedMemoryDatabase ManagementDatabase Additionally, the SIP example services depend on the presence of the JDBCResource resource. Other resource names are referenced only by other elements in this configuration file and so may be modified as needed. -->

<memdb-local>

<jndi-name>LocalMemoryDatabase</jndi-name>

<committed-size>200m</committed-size>

</memdb-local>

<memdb>

<jndi-name>ReplicatedMemoryDatabase</jndi-name>

<message-id>10002</message-id>

<group-name>rhino-db</group-name>

<committed-size>100M</committed-size>

<resync-rate>100000</resync-rate>

</memdb>

<memdb>

<jndi-name>DomainedMemoryDatabase</jndi-name>

<message-id>10005</message-id>

<group-name>rhino-db</group-name>

<committed-size>100M</committed-size>

<resync-rate>100000</resync-rate>

</memdb>

<!-- Begin PostgreSQL configuration. To use Oracle Database rather than PostgreSQL, comment out this section and uncomment the Oracle Database section below. -->

<memdb>

<jndi-name>ManagementDatabase</jndi-name>

<message-id>10003</message-id>

<group-name>rhino-management</group-name>

<committed-size>150M</committed-size>

<resync-rate>100000</resync-rate>

<persistence>

<persistence-instance>

<datasource-class>org.postgresql.ds.PGSimpleDataSource</datasource-class>

<dbid>rhino_management</dbid>

<parameter>

<param-name>serverName</param-name>

<param-type>java.lang.String</param-type>

<param-value>${MANAGEMENT_DATABASE_HOST}</param-value>

</parameter>

<parameter>

<param-name>portNumber</param-name>

<param-type>java.lang.Integer</param-type>

<param-value>${MANAGEMENT_DATABASE_PORT}</param-value>

</parameter>

<parameter>

<param-name>databaseName</param-name>

<param-type>java.lang.String</param-type>

<param-value>${MANAGEMENT_DATABASE_NAME}</param-value>

</parameter>

<parameter>

<param-name>user</param-name>

<param-type>java.lang.String</param-type>

<param-value>${MANAGEMENT_DATABASE_USER}</param-value>

</parameter>

<parameter>

<param-name>password</param-name>

<param-type>java.lang.String</param-type>

<param-value>${MANAGEMENT_DATABASE_PASSWORD}</param-value>

</parameter>

<parameter>

<param-name>loginTimeout</param-name>

<param-type>java.lang.Integer</param-type>

<param-value>30</param-value>

</parameter>

<parameter>

<param-name>prepareThreshold</param-name>

<param-type>java.lang.Integer</param-type>

<param-value>1</param-value>

</parameter>

</persistence-instance>

</persistence>

</memdb>

<memdb>

<jndi-name>ProfileDatabase</jndi-name>

<message-id>10004</message-id>

<group-name>rhino-db</group-name>

<committed-size>100M</committed-size>

<resync-rate>100000</resync-rate>

<persistence>

<persistence-instance>

<datasource-class>org.postgresql.ds.PGSimpleDataSource</datasource-class>

<dbid>rhino_profiles</dbid>

<parameter>

<param-name>serverName</param-name>

<param-type>java.lang.String</param-type>

<param-value>${MANAGEMENT_DATABASE_HOST}</param-value>

</parameter>

<parameter>

<param-name>portNumber</param-name>

<param-type>java.lang.Integer</param-type>

<param-value>${MANAGEMENT_DATABASE_PORT}</param-value>

</parameter>

<parameter>

<param-name>databaseName</param-name>

<param-type>java.lang.String</param-type>

<param-value>${MANAGEMENT_DATABASE_NAME}</param-value>

</parameter>

<parameter>

<param-name>user</param-name>

<param-type>java.lang.String</param-type>

<param-value>${MANAGEMENT_DATABASE_USER}</param-value>

</parameter>

<parameter>

<param-name>password</param-name>

<param-type>java.lang.String</param-type>

<param-value>${MANAGEMENT_DATABASE_PASSWORD}</param-value>

</parameter>

<parameter>

<param-name>loginTimeout</param-name>

<param-type>java.lang.Integer</param-type>

<param-value>30</param-value>

</parameter>

<parameter>

<param-name>prepareThreshold</param-name>

<param-type>java.lang.Integer</param-type>

<param-value>1</param-value>

</parameter>

</persistence-instance>

</persistence>

</memdb>

<jdbc>

<jndi-name>JDBCResource</jndi-name>

<datasource-class>org.postgresql.ds.PGSimpleDataSource</datasource-class>

<parameter>

<param-name>serverName</param-name>

<param-type>java.lang.String</param-type>

<param-value>${MANAGEMENT_DATABASE_HOST}</param-value>

</parameter>

<parameter>

<param-name>portNumber</param-name>

<param-type>java.lang.Integer</param-type>

<param-value>${MANAGEMENT_DATABASE_PORT}</param-value>

</parameter>

<parameter>

<param-name>databaseName</param-name>

<param-type>java.lang.String</param-type>

<param-value>${MANAGEMENT_DATABASE_NAME}</param-value>

</parameter>

<parameter>

<param-name>user</param-name>

<param-type>java.lang.String</param-type>

<param-value>${MANAGEMENT_DATABASE_USER}</param-value>

</parameter>

<parameter>

<param-name>password</param-name>

<param-type>java.lang.String</param-type>

<param-value>${MANAGEMENT_DATABASE_PASSWORD}</param-value>

</parameter>

<parameter>

<param-name>loginTimeout</param-name>

<param-type>java.lang.Integer</param-type>

<param-value>30</param-value>

</parameter>

<parameter>

<param-name>prepareThreshold</param-name>

<param-type>java.lang.Integer</param-type>

<param-value>5</param-value>

</parameter>

<connection-pool>

<max-connections>15</max-connections>

<max-pool-size>15</max-pool-size>

<max-idle-time>600</max-idle-time>

<idle-check-interval>30</idle-check-interval>

<connection-pool-timeout>5000</connection-pool-timeout>

</connection-pool>

</jdbc>

<!-- End of PostgreSQL configuration. -->

<!-- Begin Oracle Database configuration. To use Oracle Database rather than PostgreSQL, comment out the PostgreSQL section above and uncomment the section below. -->

<!-- <memdb> <jndi-name>ManagementDatabase</jndi-name> <message-id>10003</message-id> <group-name>rhino-management</group-name> <committed-size>150M</committed-size> <resync-rate>100000</resync-rate> <persistence> <persistence-instance> <datasource-class>oracle.jdbc.pool.OracleDataSource</datasource-class> <dbid>rhino_management</dbid> <parameter> <param-name>URL</param-name> <param-type>java.lang.String</param-type> <param-value>jdbc:oracle:thin:@${MANAGEMENT_DATABASE_HOST}:${MANAGEMENT_DATABASE_PORT}:${MANAGEMENT_DATABASE_NAME}</param-value> </parameter> <parameter> <param-name>user</param-name> <param-type>java.lang.String</param-type> <param-value>${MANAGEMENT_DATABASE_USER}</param-value> </parameter> <parameter> <param-name>password</param-name> <param-type>java.lang.String</param-type> <param-value>${MANAGEMENT_DATABASE_PASSWORD}</param-value> </parameter> <parameter> <param-name>loginTimeout</param-name> <param-type>java.lang.Integer</param-type> <param-value>30</param-value> </parameter> </persistence-instance> </persistence> </memdb> <memdb> <jndi-name>ProfileDatabase</jndi-name> <message-id>10004</message-id> <group-name>rhino-db</group-name> <committed-size>100M</committed-size> <resync-rate>100000</resync-rate> <persistence> <persistence-instance> <datasource-class>oracle.jdbc.pool.OracleDataSource</datasource-class> <dbid>rhino_profiles</dbid> <parameter> <param-name>URL</param-name> <param-type>java.lang.String</param-type> <param-value>jdbc:oracle:thin:@${MANAGEMENT_DATABASE_HOST}:${MANAGEMENT_DATABASE_PORT}:${MANAGEMENT_DATABASE_NAME}</param-value> </parameter> <parameter> <param-name>user</param-name> <param-type>java.lang.String</param-type> <param-value>${MANAGEMENT_DATABASE_USER}</param-value> </parameter> <parameter> <param-name>password</param-name> <param-type>java.lang.String</param-type> <param-value>${MANAGEMENT_DATABASE_PASSWORD}</param-value> </parameter> <parameter> <param-name>loginTimeout</param-name> <param-type>java.lang.Integer</param-type> <param-value>30</param-value> </parameter> </persistence-instance> </persistence> </memdb> <jdbc> <jndi-name>JDBCResource</jndi-name> <datasource-class>oracle.jdbc.pool.OracleDataSource</datasource-class> <parameter> <param-name>URL</param-name> <param-type>java.lang.String</param-type> <param-value>jdbc:oracle:thin:@${MANAGEMENT_DATABASE_HOST}:${MANAGEMENT_DATABASE_PORT}:${MANAGEMENT_DATABASE_NAME}</param-value> </parameter> <parameter> <param-name>user</param-name> <param-type>java.lang.String</param-type> <param-value>${MANAGEMENT_DATABASE_USER}</param-value> </parameter> <parameter> <param-name>password</param-name> <param-type>java.lang.String</param-type> <param-value>${MANAGEMENT_DATABASE_PASSWORD}</param-value> </parameter> <parameter> <param-name>loginTimeout</param-name> <param-type>java.lang.Integer</param-type> <param-value>30</param-value> </parameter> <connection-pool> <max-connections>15</max-connections> <max-pool-size>15</max-pool-size> <max-idle-time>600</max-idle-time> <idle-check-interval>30</idle-check-interval> <connection-pool-timeout>5000</connection-pool-timeout> </connection-pool> </jdbc> -->

<!-- End of Oracle Database configuration. -->

</ocbb-resources>

<ejb-resources>

</ejb-resources>

</rhino-config>read-config-variables

# -*- sh -*-

# Expected and optional variables:

# RHINO_HOME: required - the base directory of the Rhino installation to load configuration variables from

# POLICY_FILE: optional - the unqualified name of the security policy file to use

# OVERRIDE_HEAP_SIZE: optional - the heap size to use

# OVERRIDE_MAX_NEW_SIZE: optional - the max new size to use

# OVERRIDE_NEW_SIZE: optional - the new size to use

if [ -z "$RHINO_HOME" ]; then

echo "RHINO_HOME variable not set" >&2

exit 1

fi

unset CLASSPATH OPTIONS

if [ -z "$VARS" ]; then

VARS=$RHINO_HOME/config/config_variables

fi

if [ ! -f "$VARS" ]; then

echo "$VARS does not exist; check your installation." >&2

exit 1

fi

. $VARS

. $RHINO_BASE/rhino-common

if [ "${OVERRIDE_HEAP_SIZE}" ]; then

HEAP_SIZE=${OVERRIDE_HEAP_SIZE}

fi

if [ "${OVERRIDE_MAX_NEW_SIZE}" ]; then

MAX_NEW_SIZE=${OVERRIDE_MAX_NEW_SIZE}

fi

if [ "${OVERRIDE_NEW_SIZE}" ]; then

NEW_SIZE=${OVERRIDE_NEW_SIZE}

fi

if [ "$VERBOSE" = "1" ]; then

echo "Using RHINO_BASE: ${RHINO_BASE:?not set}"

echo "Using RHINO_HOME: ${RHINO_HOME:?not set}"

echo "Using RHINO_WORK_DIR: ${RHINO_WORK_DIR:?not set}"

echo "Using VARS: ${VARS:?not set}"

echo "Using JAVA_HOME: ${JAVA_HOME:?JAVA_HOME not set}"

echo "Using MANAGEMENT_DATABASE_HOST: ${MANAGEMENT_DATABASE_HOST:?not set}"

echo "Using MANAGEMENT_DATABASE_PORT: ${MANAGEMENT_DATABASE_PORT:?not set}"

echo "Using MANAGEMENT_DATABASE_USER: ${MANAGEMENT_DATABASE_USER:?not set}"

echo "Using MANAGEMENT_DATABASE_NAME: ${MANAGEMENT_DATABASE_NAME:?not set}"

echo "Using HEAP_SIZE: ${HEAP_SIZE:?not set}"

echo "Using MAX_NEW_SIZE: ${MAX_NEW_SIZE:?not set}"

echo "Using NEW_SIZE: ${NEW_SIZE:?not set}"

echo "Using RMI_MBEAN_REGISTRY_PORT: ${RMI_MBEAN_REGISTRY_PORT:?not set}"

fi

if [ -z "${MANAGEMENT_DATABASE_PASSWORD}" ]; then

echo "MANAGEMENT_DATABASE_PASSWORD not set" >&2

exit 1

fi

# Get a working directory for information generated by the script

SCRIPT=`basename $0`

SCRIPT_WORK_DIR=$RHINO_WORK_DIR/$SCRIPT

# Set classpath

LIB=$RHINO_BASE/lib

CLASSPATH="${CLASSPATH:+${CLASSPATH}:}$LIB/RhinoBoot.jar"

RUNTIME_CLASSPATH="$LIB/postgresql.jar:$LIB/ojdbc6.jar"

# Load platform hooks if they are available.

if [ -f "$LIB/platform-hooks.jar" ]; then

CLASSPATH="$CLASSPATH:$LIB/platform-hooks.jar"

fi

if [ "$VERBOSE" = "1" ]; then

echo "Using CLASSPATH: ${CLASSPATH:?not set}"

fi

JVM_VERSION=`check_java_version "${JAVA_HOME}/bin/java"`

LD_LIBRARY_PATH_UNCHANGED=$LD_LIBRARY_PATH

unset OSOPTIONS

if [ "$JVM_ARCH" != "64" -a "$JVM_ARCH" != "32" ]; then

echo "Please specific either 32 or 64 for JVM_ARCH in ${RHINO_HOME}/config/config_variable file"

exit 1

fi

osname="`uname -s`"

htname="`uname -m`"

case "$osname,$htname" in

Linux,i[3456]86) OS_TYPE="linux" ;;

Linux,x86_64) OS_TYPE="linux" ;;

SunOS,i86pc) OS_TYPE="solaris_i386" ;;

SunOS,sun4*) OS_TYPE="solaris" ;;

Darwin,i386) OS_TYPE="darwin_i386"

JVM_ARCH_OPTION=""

export OSOPTIONS="-Djava.library.path=${RHINO_BASE}/lib/${OS_TYPE}";;

*) echo "Unsupported Architecture $osname"

exit 1;;

esac

if [ "`uname -s`" != "Darwin" ]; then

case "$JVM_ARCH-$JVM_VERSION" in

32-*1.4.*) JVM_ARCH_OPTION="" ;; # 1.4.x does not understand -d32

*) JVM_ARCH_OPTION="-d$JVM_ARCH" ;;

esac

fi

if [ "`uname -s`" != "Darwin" ]; then

LD_LIBRARY_PATH="$LD_LIBRARY_PATH:$RHINO_BASE/lib/$OS_TYPE/$JVM_ARCH-bit"

fi

if [ "`uname -s`" = "HP-UX" ]; then

export SHLIB_PATH

if [ "$VERBOSE" = "1" ]; then

echo "Using SHLIB_PATH: ${SHLIB_PATH:?not set}"

fi

else

export LD_LIBRARY_PATH

if [ "$VERBOSE" = "1" ]; then

echo "Using LD_LIBRARY_PATH: ${LD_LIBRARY_PATH:?not set}"

fi

fi

# Improved OutOfMemoryError handling.

# This will force the Rhino JVM to immediately terminate when it

# encounters an OOME. Only available on 1.6+ JVMs.

OOME_OPTION="-Doome.kill=unsupported"

get_java_version "${JAVA_HOME}/bin/java"

if [ "$java_version_major" -eq "1" ]; then

if [ "$java_version_minor" -gt "5" ]; then

OOME_OPTION=-XX:OnOutOfMemoryError="$RHINO_HOME/stop-rhino.sh --terminate"

fi

fi

# VM garbage collection settings - these are some reasonable defaults

# to minimise GC pause times and keep latency low. Adjust to suit your

# hardware platform.

VERBOSEGC="-verbose:gc -XX:+PrintGCDateStamps -XX:+PrintGCDetails"

#INCREMENTALCMSOPTIONS="\

# -XX:+CMSIncrementalMode \

# -XX:+CMSIncrementalPacing \

# -XX:CMSIncrementalDutyCycleMin=10 \

# -XX:CMSIncrementalDutyCycle=10"

CMSOPTIONS="\

-XX:+UseConcMarkSweepGC \

-XX:CMSInitiatingOccupancyFraction=60 \

${INCREMENTALCMSOPTIONS}"

# When there are frequent JMX connections made and released these

# options should be enabled. Note. The CMSPermGenSweepingEnabled option

# is not needed for Java6.

CMSOPTIONS="$CMSOPTIONS -XX:+CMSClassUnloadingEnabled"

#CMSOPTIONS="$CMSOPTIONS -XX:+CMSPermGenSweepingEnabled"

GCOPTIONS="\

-XX:+UseParNewGC \

-XX:MaxNewSize=${MAX_NEW_SIZE} -XX:NewSize=${NEW_SIZE} \

-XX:MaxPermSize=192m -XX:PermSize=192m \

-Xms${HEAP_SIZE} -Xmx${HEAP_SIZE} \

-XX:SurvivorRatio=128 \

-XX:MaxTenuringThreshold=0 \

-Dsun.rmi.dgc.server.gcInterval=0x7FFFFFFFFFFFFFFE \

-Dsun.rmi.dgc.client.gcInterval=0x7FFFFFFFFFFFFFFE \

-XX:+UseTLAB \

-XX:+DisableExplicitGC \

${VERBOSEGC} \

${CMSOPTIONS}"

# This file is written out by Rhino to indicate that the node should not

# restart automatically.

RHINO_HALT_FILE="${RHINO_WORK_DIR}/halt_file"

# Rhino ActivityHandler GC options

AHGC_OPTIONS="-Drhino.ah.gclog=True"

AHGC_OPTIONS="$AHGC_OPTIONS -Drhino.ah.gcchurn=5000"

# Set options

OPTIONS="$OPTIONS $AHGC_OPTIONS"

OPTIONS="$OPTIONS -Drhino.ah.failover.checksum=True"

OPTIONS="$OPTIONS -Djavax.net.ssl.keyStore=${RHINO_BASE}/rhino-server.keystore -Djavax.net.ssl.keyStorePassword=${RHINO_SERVER_STORE_PASS}"

OPTIONS="$OPTIONS -Djavax.net.ssl.trustStore=${RHINO_BASE}/rhino-server.keystore -Djavax.net.ssl.trustStorePassword=${RHINO_SERVER_KEY_PASS}"

OPTIONS="$OPTIONS -Drhino.runtime.classpath=${RUNTIME_CLASSPATH}"

OPTIONS="$OPTIONS -Drhino.watchdog.dumpthreads=${RHINO_WATCHDOG_DUMPTHREADS}"

OPTIONS="$OPTIONS -Drhino.dir.base=${RHINO_BASE} -Drhino.dir.home=${RHINO_HOME} -Drhino.dir.work=${RHINO_WORK_DIR}"

OPTIONS="$OPTIONS -Drhino.haltfile=${RHINO_HALT_FILE}"

OPTIONS="$OPTIONS -Drhino.snapshot.baseport=${SNAPSHOT_BASEPORT}"

OPTIONS="$OPTIONS -Dstaging.live_threads_fraction=${RHINO_WATCHDOG_THREADS_THRESHOLD}"

OPTIONS="$OPTIONS -Dstaging.stuck_interval=${RHINO_WATCHDOG_STUCK_INTERVAL}"

# Options used in conjunction with make-primary.sh logic.

OPTIONS="$OPTIONS -Drhino.primary.makeprimary=${RHINO_WORK_DIR}/make.primary"

OPTIONS="$OPTIONS -Drhino.primary.createnode=${RHINO_WORK_DIR}/make.primary.firstboot"

OPTIONS="$OPTIONS -Drhino.primary.waiting=${RHINO_WORK_DIR}/make.primary.waiting"

# SNMP4J logging integration

OPTIONS="$OPTIONS -Dsnmp4j.LogFactory=com.opencloud.rhino.snmp.SNMP4JLogFactory"

# Force the JVM to include stack trace information in all exceptions.

# Without this option, server JVM optimisations at runtime may result in

# the loss of stack traces for frequently generated exceptions.

OPTIONS="$OPTIONS -XX:-OmitStackTraceInFastThrow"

# This option will force Rhino to use the specified savanna application

# version instead of the default build specific one.

# OPTIONS="$OPTIONS -Dsavanna.appversion=Override version goes here"

# This option (when set to 'true') prevents unauthenticated management access

# through the platform JMX interface (e.g. via a local instance of jconsole).

OPTIONS="$OPTIONS -Drhino.jmx.requireauth=false"

# This option overrides the default trace level (normally 'info') of all

# tracers with the specified level.

# Value values are: off, severe, warning, info, config, fine, finer, finest

# OPTIONS="$OPTIONS -Drhino.tracer.defaultlevel=finest"

# This option will enable automatic heap dumping in the event of

# an OutOfMemoryError. Only available in 1.4.2_12+, 1.5.0_07+,

# and 1.6+ Sun JVMs.

# OPTIONS="$OPTIONS -XX:+HeapDumpOnOutOfMemoryError"

# This option takes a comma seperated list of classes implementing the

# PlatformHooks interface. Classes specified here should also be placed in

# a new jar file at 'lib/platform-hooks.jar'. This feature is not

# intended for general use. Please contact OpenCloud for further details.

# OPTIONS="$OPTIONS -Drhino.platform.hooks=<classname>"

if [ -n "$POLICY_FILE" ]

then

OPTIONS="$OPTIONS -Djava.security.manager"

OPTIONS="$OPTIONS -Djava.security.policy==${SCRIPT_WORK_DIR}/config/${POLICY_FILE}"

fi

#OPTIONS="$OPTIONS -Djava.security.debug=access,failure"

OPTIONS="$OPTIONS -Djava.security.auth.login.config=${SCRIPT_WORK_DIR}/config/rhino.jaas"

#OPTIONS="$OPTIONS -Djmx.invoke.getters"

OPTIONS="$OPTIONS -Djava.io.tmpdir=${RHINO_WORK_DIR}/tmp"

OPTIONS="$OPTIONS -ea"

OPTIONS="$OPTIONS $GCOPTIONS"

# Set external command options

OPTIONS="$OPTIONS -Dcom.opencloud.javac.external=${RHINO_HOME}/run-compiler.sh"

OPTIONS="$OPTIONS -Dcom.opencloud.jar.external=${RHINO_HOME}/run-jar.sh"

# Use Rhino's MBeanServer implementation

OPTIONS="$OPTIONS -Djavax.management.builder.initial=com.opencloud.rhino.management.SleeMBeanServerBuilder"

JAVA="${JAVA_HOME}/bin/java $JVM_ARCH_OPTION -server"

Benchmark Results

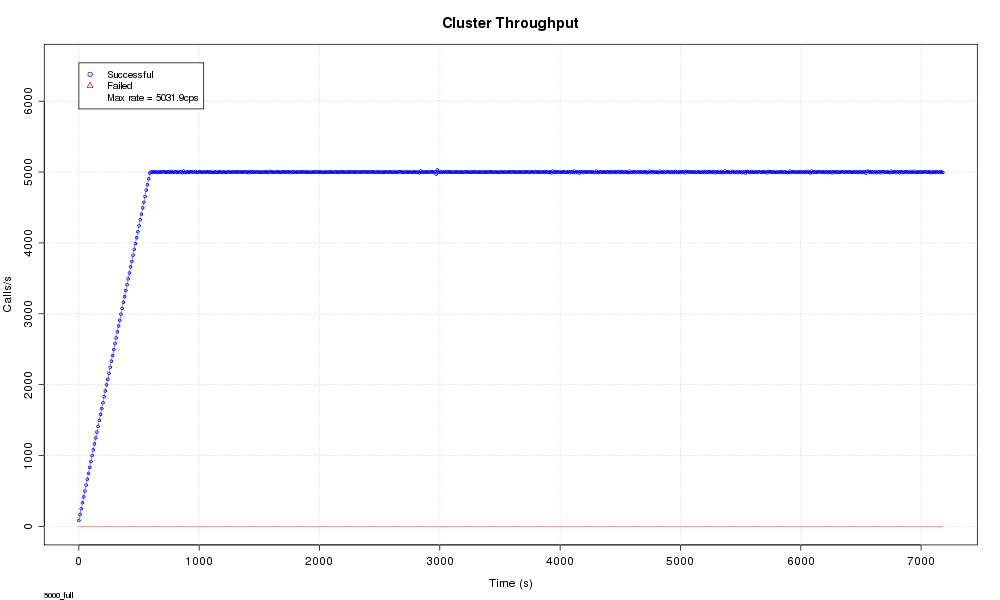

Below are the results for the single node and two node benchmarks, followed by some notes on the benchmark statistics.

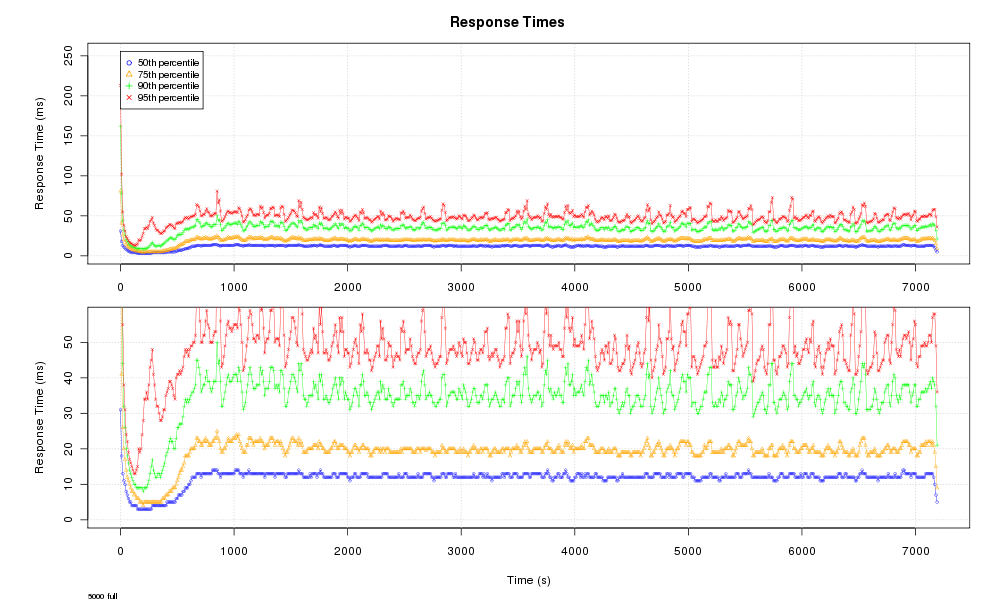

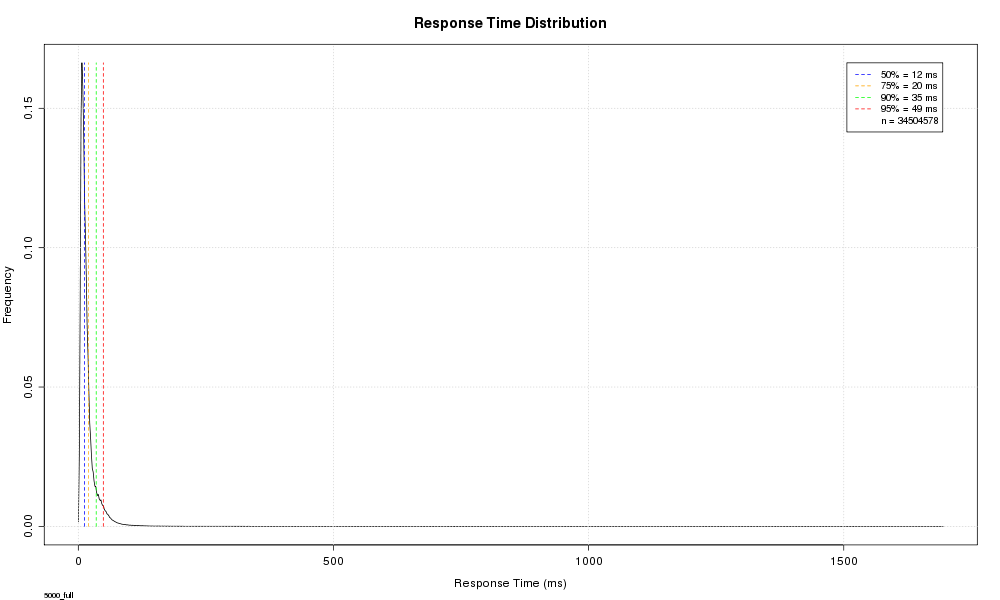

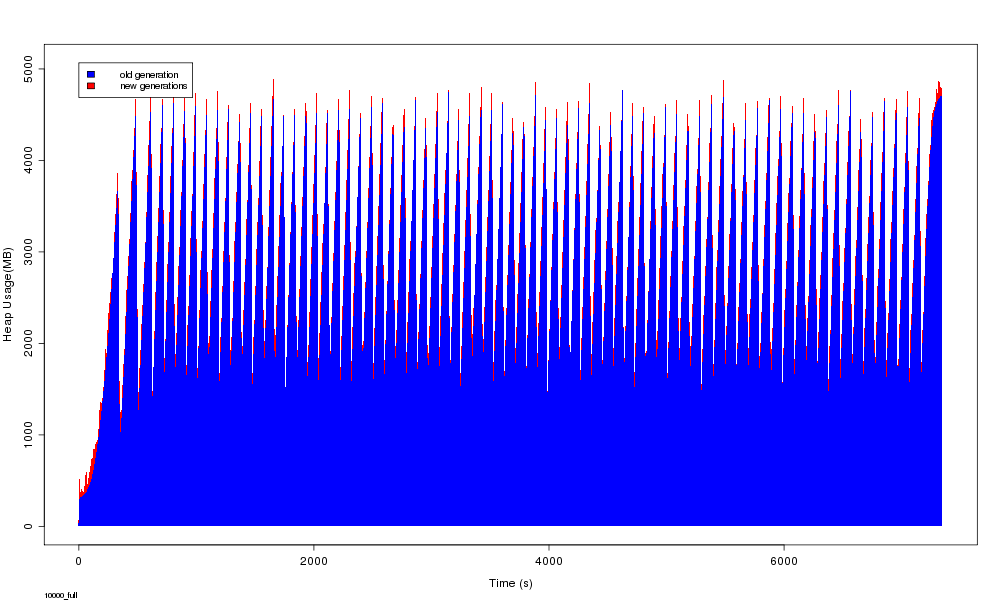

Single node benchmark

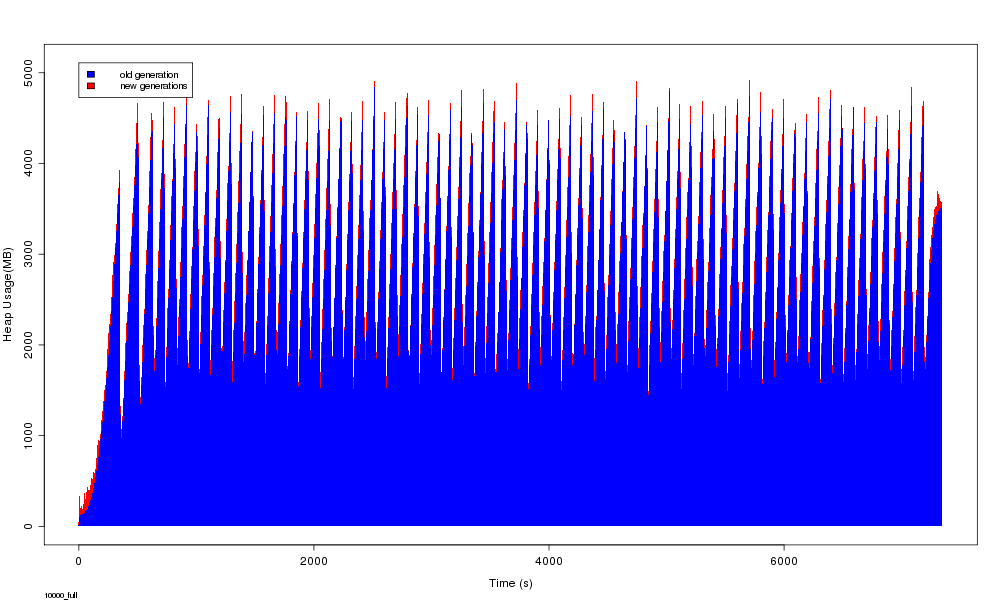

Two node benchmark

Notes

-

All calls are monitored for the entire duration on the originating leg.

-

The switch simulator measures call-setup time from when it sends the

InitialDPto the application until it receives the corresponding response. -

The response time (or "call-setup" time) is the time between sending the

InitialDP(1) and receiving theConnect(2), as measured by the switch. -

The response time includes the time for any MAP query to the HLR that the application may have performed.

-

To calculate the number of dialogs (for example, using 5,000 calls per second):

-

All calls involve originating and terminating treatment (in our example, this means 10,000 dialogs)

-

50% of calls involve a query to the HLR (2,500 dialogs).

... for a total of 12,500 dialogs per second.

-